Roman Empire

Member

It is all about dem big numbers!I thought we played games for fun or have I been doing it wrong all along?

It is all about dem big numbers!I thought we played games for fun or have I been doing it wrong all along?

No because there is precedence at least for Dirt. It's running pretty high frames on all GPU's, it's a game made for pretty high framerates. Remember when Vega GPU's used to dominate even the 1080ti in prior dirt games? It's also crossgen, dirt will run at high framerates on pretty much all mid gen GPU's and a guaranteed 60fps on lower end cards....So I should wait for the multiplatform games to release before I comment but you won’t wait for anything before claiming that the divide is negligible. Got you.

The truth is Sony could have easily mentioned the performance of the games they demoed yesterday and didn’t, not for a single one. For all the flak that the MS 3rd party showing got, everyone knew at the end what performance features to expect of each the games demoed (a table was released with all the info so we don’t have to speculate). Digital Foundry won’t be as secretive when the games release and this thread is a good preview of what will happen once the secrecy is broken.

This is BS. VRS can be used during the pixel shader workloads. Mesh shader or primitive shader deals with geometry workloads.VRS will be used in unoptimized engines for PS5 that still use ancient LOD's system. Plus it's important for PSVR2 as it'll most likely be 4K@120Hz for each eye (240Hz total).

right right, we want full shading in shadows with MAX shading precision to get the color hmmm black. Let be less efficient which means we are more efficient.

VRS is closer to the end of the rendering pipeline on a screen space level(and some primitive shading for tier 2).

for example, regions of the screen behind Hud elements, textures in shadow, or regions behind motion blur can get reduced/faster versions of shaders executed against them

In other words, the developer has greater control of where they want the GPU to spend is pixel shading power.

its a win

That's not a correct assumption. MS has already talked about features that they have that others don't.They both use RDNA2 which means they both have it.

It is stupid to assume they don't.

At 15% it would be great, yeah. But the reality is, if you don't want your game to be visibly blurry, you're getting around 5% more performance. Not terrible by any means, but also not groundbreaking.

They both use a custom RDNA2 based GPU that is only similar but not equal.They both use RDNA2 which means they both have it.

It is stupid to assume they don't.

But AC Valhalla runs at 4k 30 fps on the Series XThe difference between 30fps PS5 and 60fps Xbox Series X

They both use a custom RDNA2 based GPU that is only similar but not equal.

That's not a correct assumption. MS has already talked about features that have that others don't.

That's the take away here, instead of people taking it to the platform holder and holding them responsible they rather go at each other.That's not a correct assumption. MS has already talked about features that have that others don't.

That's the take away here, instead of people taking it to the platform holder and holding them responsible they rather go at each other.

The only direction fingers should be pointed at is the one(s) that didn't send a clear message or muddied theirs by communicating poorly.

But AC Valhalla runs at 4k 30 fps on the Series X

Nope. VRS blurs image. Unreal Engine 5 has resolution scaling and temporal upsampling that the DF guys cannot tell how many pixel there are. NVidia AI scaling also doesn't blur the image. Checkerboard rendering and VRS have compromises. There are better solutions out there to extract more performance.

Due to performance constraints, graphics renderers cannot always afford to deliver the same quality level on every part of their output image. Variable rate shading, or coarse pixel shading, is a mechanism to enable allocation of rendering performance/power at varying rates across the rendered image.

Visually, there are cases where shading rate can be reduced with little or no reduction in perceptible output quality, leading to “free” performance.

Sorry with the FUD so thiccc here I'm just trying to be different.Please stop making too much sense!!

Alex is, senior member of XboxEra Discord FUD cult. The rest try to be neutral and hide the bias although funded by MS.

If you're arguing if VRS is good or bad, your statement above will still make it good, wouldn't it?.What were the benefits of VRS then? Wasn't it running at 24fps/3840x1608? You're saying it would be sub-20fps without it?

No, you are confusing things that the engine is doing with hardware features.Sony has that already via Horizon ZD technique. They've been rendering only what the player sees already for quite some time

Because it's potential free performance.It's not a bad thing but it's a bloody compromise like checkerboarding. Would we be saying why doesn't it have checkerboarding in our games? VRS is like checkerboard rendering it reduces image quality/resolution but on parts of the screen. It's a good technique but why are we trying to push the narrative that because the games didn't use it it must not have hardware support?

you're either clueless or trolling, which is it?VRS will be used in unoptimized engines for PS5 that still use ancient LOD's system. Plus it's important for PSVR2 as it'll most likely be 4K@120Hz for each eye (240Hz total).

At what cost theVRS is still within the render camera's viewport. Variable Rate Shading changes shading resolution which conserves GPU resource.

If you're arguing if VRS is good or bad, your statement above will still make it good, wouldn't it?.

No, you are confusing things that the engine is doing with hardware features.

Because it's potential free performance.

you're either clueless or trolling, which is it?

Conceptually its a win.At what cost the

Seems to be very Important then. Let's judge the outcomes based on the next 5 years of Games and their individual Graphics. What do you say?

Because anything Else is smoke and mirrors, Lot of talking, let the Games do the talking, right?

Actually it sharpens detail where it matters most, while blurring things that are less noticed/if noticed at all in the background.

Audio and SSD are part of Hardware, so saying that Xbox has better hardware is simply wrong. Xbox has some advantages while PS5 has others, simple as that.

The scenario is somewhat similar to N64 vs PS1 (not to that extreme though) of N64 having better GPU but lost (Ff7 , Metal Gear, RE...etc) due to its limitations in cartridge space, 32MB vs 700MB

At what cost the

Seems to be very Important then. Let's judge the outcomes based on the next 5 years of Games and their individual Graphics. What do you say?

Because anything Else is smoke and mirrors, Lot of talking, let the Games do the talking, right?

Geometry Engine is more Mesh Shading.

I would have thought Sony would have mentioned VRS by now if they had it, but until Sony gives out some more info, hopefully in the teardown reveal, we cant really judge much.

VRS would have been available to them via RDNA 2 if they wanted to option it, so maybe they have their own solution, or didn't value it.

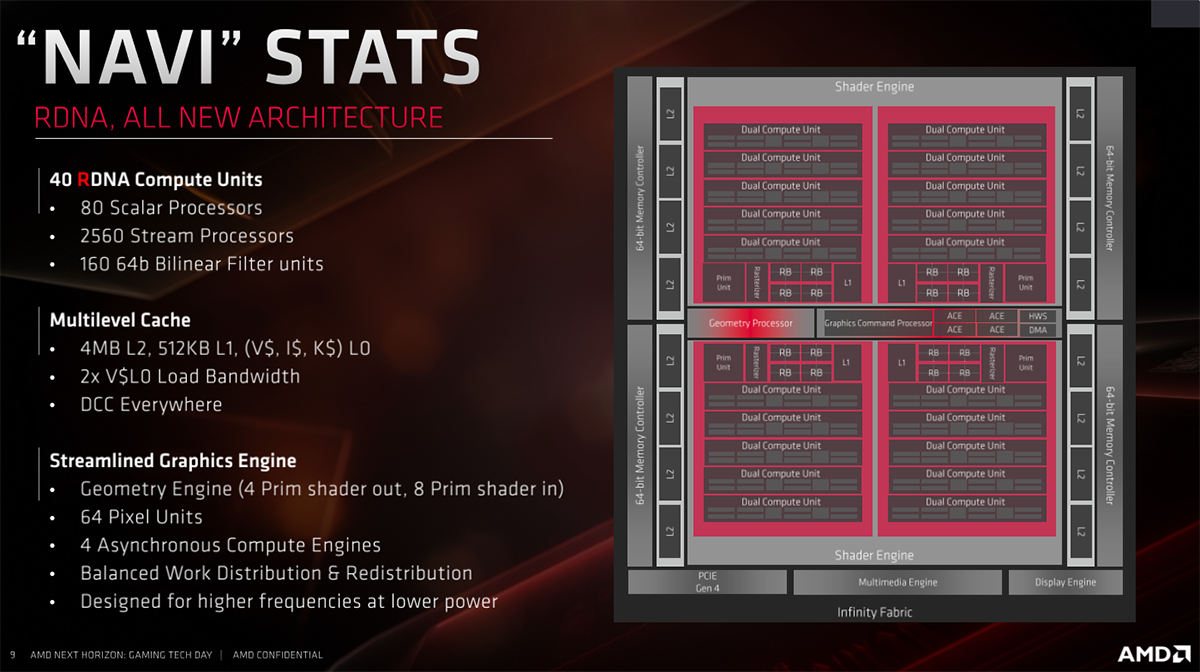

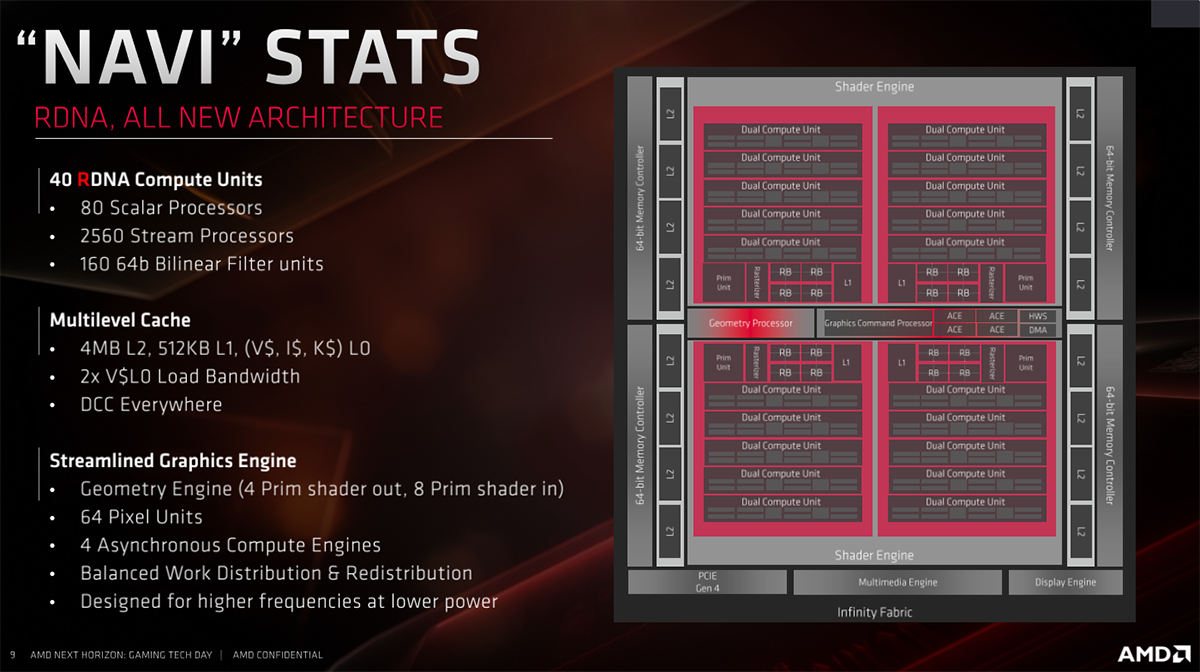

On a features level, Cerny reveals features that suggest parity with other upcoming AMD and AMD-derived products based on the RDNA 2 technology. A new block known as the Geometry Engine offers developers unparalleled control over triangles and other primitives, and easy optimisation for geometry culling. Functionality extends to the creation of 'primitive shaders' which sounds very similar to the mesh shaders found in Nvidia Turing and upcoming RDNA 2 GPUs.

VRS is also "lossy", in that it results in lower image quality for certain portions of the screen. It may be or may be not visible to players.

Anyway, some people should reconsider does PS5 has VRS ( which is a STANDARD feature of RDNA 2 ) when formerly PS5 software engineer said this :

No way, does AC have Raytracing with that 4k 30?, like those 4k games seen yesterday?But AC Valhalla runs at 4k 30 fps on the Series X

NoNo way, does AC have Raytracing with that 4k 30?, like those 4k games seen yesterday?

The tweet says the opposite of what you are trying to say.

VRS = GOOD THING

weather the PS5 has it or not is a different argument, I actually think it does have it.

IIRC isn't the GE based mainly around Primitive Shading? Here's a quote from the Eurogamer article:

So you might be right in that it's an equivalent to mesh shading, but the question is how much of it is actually based on mesh shading versus primitive shading, which was introduced with the first RDNA iteration. The specification of primitive shaders right in the article itself would suggest the GE is moreso built around AMD's Primitive Shaders, which are compiler-controlled.

It might be possible Sony've taken a few features from mesh shaders into the GE, however.

Do you have the specs on the XSX audio HW?

GE isn't anything new. It existed since Vegas. The difference in RDNA 1 is that it was improved. But its still doing the same fundamentally thing. Its improving the current pipeline.

So primitive shaders just caters to the existing pipeline and accelerates it for culling and such, but mesh shaders reinvents the entire pipeline and gives you low level access and control over threading, model selection, topology customization, etc.

So primitive shaders, geometry engine is NOT new. What's actually new in RDNA 2 is support for Mesh shaders.

Here's RDNA 1

No, but Im 100% it isn't nearly as capable as the one inside PS5 otherwise MS will be touting it as the best thing ever.

XSX audio chip is similar to the one in Xone and PS4. Nothing fancy

"Minimum"But AC Valhalla runs at 4k 30 fps on the Series X

Thanks for some of the clarifications; so if Sony's essentially using primitive shaders (albeit improved over the Vega versions, and maybe bringing a few bit of mesh shaders if it's possible) and MS's using mesh shaders, I would assume that the latter is going to offer a good deal more efficiency and overall performance boost.

This is a bit why I didn't want to tread on the topic because it kind of supports the idea that while PS5 is certainly custom RDNA2, the range of what RDNA2 features it (or any custom GPU) can have can vary wildly. Even among things we might assume are standard RDNA2!

Also in that graphic I notice they literally call it the Geometry Engine themselves, and this is on AMD's end for RDNA1. Road to PS5 kind of made it sound like it was Sony's own nomenclature. I still think Sony's done some customizations here, FWIW.

*looks at avatar and post history*

The desperation is seeping through your post. Yikes. Buckle up, because we're gonna get hundreds of DF comparison videos this gen. Might wanna make sure you're stocked up on blood pressure medication.

well they said they are trying to get it to 60fps... and I hope they will because there's honestly no excuse for a cross gen game to be 30fps this time around.

it made sense on PS4 and Xbox One due to their god awful CPUs, but now? nah

Thanks for some of the clarifications; so if Sony's essentially using primitive shaders (albeit improved over the Vega versions, and maybe bringing a few bit of mesh shaders if it's possible) and MS's using mesh shaders, I would assume that the latter is going to offer a good deal more efficiency and overall performance boost.

This is a bit why I didn't want to tread on the topic because it kind of supports the idea that while PS5 is certainly custom RDNA2, the range of what RDNA2 features it (or any custom GPU) can have can vary wildly. Even among things we might assume are standard RDNA2!

Also in that graphic I notice they literally call it the Geometry Engine themselves, and this is on AMD's end for RDNA1. Road to PS5 kind of made it sound like it was Sony's own nomenclature. I still think Sony's done some customizations here, FWIW.

No matter how many times you post it Bo, it will be ignored, because many here know more than Matt. RDNA 2 VRS is going to change the game, not the VRS with advanced customizations on PS5 though, the normal one you get from the AMD's GPU hardware and software stack.

What's crazier is that so many are now latching unto VRS, but pretty much all modern GPU's landing in 2020 will have VRS supported, it's like your 2020 AF. Everything from the Intel Xe's, to the Ampere GPU's to the RDNA 2 GPU's....Current NV GPU's even support VRS already, it's not proprietary at all. People should be clued in already based on all the custom work on the SSD, that Sony never goes for the standard, they put in their own spin and improvements to the standard VRS that comes with RDNA 2.

"Minimum"

Meaning there's going to be a 60fps option as well.

You need to chill out man, they arn't spreading fud, they simply didn't see any evidence of it. If you can you're more than welcome to point it out and correct them.They like spreading FUD, just like DF and other suspect websites. As if we do care what's happening inside PS5 to produce this insane CGI movie level of gameplay!

No because there is precedence at least for Dirt. It's running pretty high frames on all GPU's, it's a game made for pretty high framerates. Remember when Vega GPU's used to dominate even the 1080ti in prior dirt games? It's also crossgen, dirt will run at high framerates on pretty much all mid gen GPU's and a guaranteed 60fps on lower end cards....

Also, you have The Ascent which is an over the top shooter, these games will run at very high framerates on anything. According to PC steam the requirements are an i3 with a GTX 660...…..As for Scorn, that game has been in development for a while, it's not so much a ground up game for next gen, there are gameplay videos if you care to watch. I can easily see a 120fps mode, even at lowered resolutions. However it's not really a quickpaced game like Quake, where 120fps would be more noticeable. Yet, Scorn's Official Requirements, not even minimum Req is an i5 with a GTX 970, it's really not that high. The next gen consoles run circles around such a setup.....120FPS modes is not out of the ordinary for any of these titles. The only one we have not seen gameplay of is the medium, so that is a wait and see......

I frankly don't see a need for that or what a possible customization would entail and based on Cerny's description on Road To PS5. Its Geometry Engine is doing exactly all that AMD already described. I firmly believe the whole "CUSTOM X" is overhyped/marketing. Its more like picking that you want a tomato, how big of a slice you want and how many tomato slices you want on your subway rather than working at the Gene-editing facility to change the functions and proprieties of the tomatoes.

I agree with this. My other take - and hence why I am curious over a proper look at that PS5 GPU - is that it seems to me PS5 set out from the beginning to optimise the architecture differently.

In a PC framework the optimisation parameters are set: You want to optimise FPS in a 4k setting with a set (and limited) VRAM budget (with RT and other features of course). This results in a given hardware path for optimisation.

Assume you are Sony and say: We aim for 4K/stable 30FPS but with a much larger amount of textures at a higher resolution on screen (with RT and other features of course).

If that assumption is correct, what - if any - changes to the weight of the different components would you need to go for at the GPU level (not new features as such, but rather where would the bottle necks come into play given the much larger amount of textures and their size)? I might be wrong, but I have a suspicion that Cerny has had something like the above in mind when designing the console.

I have a problem with DF saying “little to no evidence” when there’s no reason to believe some form of VRS isn’t being used.

The point of VRS (and especially Tier 2) is to reduce rendering load in parts of the frame that don’t need to be rendered at full res like low contrast, blurred, or distanced objects. You’re not supposed to be able to tell when it’s being used.

Check out these shots comparing Tier 2 on vs. off:

You might be able to find some differences in the stills, but in motion? On compressed streams? Would you believe this implementation gives a 60%+ performance improvement on a 2080?

VRS tier 1 runs on integrated Intel gpu’s, and Microsoft’s VRS parent even references Mark Cerny’s patent from 6 years ago for the same thing: https://patents.google.com/patent/US20150287167

This is 2013 and Tiled Resources all over again: https://www.neogaf.com/threads/auto...iling-announced-for-w8-1-and-xbox-one.604641/

Wrong. The PS4 did not even have a dedicated audio chip. Sound designers had to hold back to preserve Jaguar CPU time.XSX audio chip is similar to the one in Xone and PS4. Nothing fancy

I think it does? Something about it being similar to AMD’s true audio chip.Wrong. The PS4 did not even have a dedicated audio chip. Sound designers had to hold back to preserve Jaguar CPU time.