JägerSeNNA

Banned

Variable Frequency for CPU and GPUUnstable????

Variable Frequency for CPU and GPUUnstable????

Variable Frequency for CPU and GPU

According to MS,Cloud Computing allegedly would be a game changer and eSRAM was a revolution backi in 2013. All those became a big nothing. Don‘t come to me „according to Cerny“ plus NXGamer,please no. You have to tell me first of all; How they‘re going to keep this thing cool at that speeds when both CPU and GPU maxed out. Waiting for a logical answer. Don‘t come to me please with „but someone said that“Most of the time. Since you probably didn't listen what Cerny said, maybe even deliberately. Cerny stated clearly they expect both CPU and GPU to spend most of their time at their top frequencies. Both CPU and GPU can work at their top frequency together as long as the total workload does not exceed the power cap. It that happens, downclock will be minor. Based NXG from his analysis, it's about 50 Mhz. It's sufficient to lower clocks by 2% to lower power consumption by 10%. Dropping power consumption by 10% IS NOT 10% drop in performance.

According to MS,Cloud Computing allegedly would be a game changer and eSRAM was a revolution backi in 2013. All those became a big nothing. Don‘t come to me „according to Cerny“ plus NXGamer,please no. You have to tell me first of all; How they‘re going to keep this thing cool at that speeds when both CPU and GPU maxed out. Waiting for a logical answer. Don‘t come to me please with „but someone said that“

According to MS,Cloud Computing allegedly would be a game changer and eSRAM was a revolution backi in 2013. All those became a big nothing. Don‘t come to me „according to Cerny“ plus NXGamer,please no. You have to tell me first of all; How they‘re going to keep this thing cool at that speeds when both CPU and GPU maxed out. Waiting for a logical answer. Don‘t come to me please with „but someone said that“

Both CPU and GPU can work at their top frequency together as long as the total workload does not exceed the power cap

The people who claimed that the cloud Computing will be a Game changer was also Xbox Engineers.Cerny is a designer and system architect and beyond that. How they're going to keep this thing cool? Keeping CPU and GPU frequency high has most of the time has nothing to do with thermals. Yeah, looks like you didn't listen what Cerny said about cooling solution. We are ending discussion here right now.

Variable Frequency for CPU and GPU

Most of the time. Since you probably didn't listen what Cerny said, maybe even deliberately. Cerny stated clearly they expect both CPU and GPU to spend most of their time at their top frequencies. Both CPU and GPU can work at their top frequency together as long as the total workload does not exceed the power cap. It that happens, downclock will be minor. Based NXG from his analysis, it's about 50 Mhz. It's sufficient to lower clocks by 2% to lower power consumption by 10%. Dropping power consumption by 10% IS NOT 10% drop in performance.

Btw. welcome to GAF.

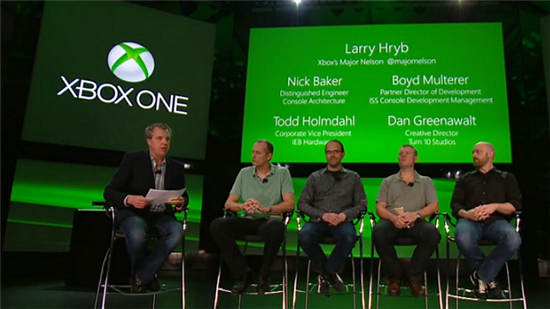

Major Nelson surely isn't a engineer yet he talked about Power Of The Cloud :The people who claimed that the cloud Computing will be a Game changer was also Xbox Engineers.Yes I watched his tech talk. If it was only %2 reduction in terms of core speeds,he wouldn’t even mention about it. Believe what you want to believe.

Most of the time. Since you probably didn't listen what Cerny said, maybe even deliberately. Cerny stated clearly they expect both CPU and GPU to spend most of their time at their top frequencies. Both CPU and GPU can work at their top frequency together as long as the total workload does not exceed the power cap. It that happens, downclock will be minor. Based NXG from his analysis, it's about 50 Mhz. It's sufficient to lower clocks by 2% to lower power consumption by 10%. Dropping power consumption by 10% IS NOT 10% drop in performance.

Btw. welcome to GAF.

How is it crap?Oh, you again with that crap. I've explained that to you yesterday, yet you providing the same crap over and over, Xbox fan!

What about the memory bus feeding the XSX GPU at a MUCH HIGHER bandwidth? That's 560 GB/s vs 448GB/s , a 112 GB/s advantage for the X feeding the memory pipeline vs a 22GB/s best case really vs 6GB/s, really 8-9 like you stated ,for the ps5. That's a Delta of 112GB/s for the X versus a likely Delta of 3GB/s( 9 - 6) for the ps5....much more the advantage for the series X. Not all apples to apples but still talking about the memory system setup and bandwidth with the same destinations. I don't think the xsx ssd will be as much as a bottleneck as the ps5's RAM bandwidth will be for it IMO.The best case scenario Cerny talks is 22GB/s.

8-9GB/s is the average/typical.

How is it crap?

As Cerny and even you say, PS5 CPU/GPU may have to adjust when things get too tough, as there's a power cap.

How can you downplay the GIF when you're even going off about mhz and clock cycle reductions? If the system is so great, just have both CPU/GPU run at max speed and not worry about thermal temp like every other console does.

Also, you still haven't given a definition of what "most of the time" means. And Cerny beat around the bush too. A vague claim which can mean anything.

I agree.I think what he meant by „this crap“ is that SSD is even included there. Because SSD Is not even close as important as CPU and GPU.

Think about it, take the base XBOX ONE, Don’t change cpu and gpu, just change SSD. Will there be a significant change other than loading time and maybe less popups? No

Now change CPU and GPU, will there be a significant change? HEALL YEAH. HUGE difference.

Even just changing the CPU will change games DRASTICALLY.

SSD is nice for loading times and snappier is, and maybe for open world games. That’s it. CPU and GPU. That’s where the important stuff is.

It’s certainly the first time in console history that I see persistent storage even being considered as a power ingredient. On PCsSo on one hand the PS5 is stronger than the XSX, because the XSX is merely bruteforcing and the PS5 is much more elegant..... buuuuuut on the other hand, the XSX is faster than the PS5, because PS5 is merely bruteforcing and the XSX is much more elegant.

Or maybe.. just maybe.. both machines are incredibly well optimized with different strenghts, where Sony has opted for a bit slower APU with an extreme SSD, while MS has chosen a bit faster APU with a slightly less extreme SSD.

First of all, he's making the PS5 consume the same amount of watts every time it's on so the cooling can be calibrated. that's already bad for your electricity bill. You should want him to come up with a dynamic and reliable cooling solution instead. You're paying for it.Most of the time. Since you probably didn't listen what Cerny said, maybe even deliberately. Cerny stated clearly they expect both CPU and GPU to spend most of their time at their top frequencies. Both CPU and GPU can work at their top frequency together as long as the total workload does not exceed the power cap. It that happens, downclock will be minor. Based NXG from his analysis, it's about 50 Mhz. It's sufficient to lower clocks by 2% to lower power consumption by 10%. Dropping power consumption by 10% IS NOT 10% drop in performance.

Btw. welcome to GAF.

How is it crap?

As Cerny and even you say, PS5 CPU/GPU may have to adjust when things get too tough, as there's a power cap.

How can you downplay the GIF when you're even going off about mhz and clock cycle reductions? If the system is so great, just have both CPU/GPU run at max speed and not worry about thermal temp like every other console does.

Also, you still haven't given a definition of what "most of the time" means. And Cerny beat around the bush too. A vague claim which can mean anything.

What about the memory bus feeding the XSX GPU at a MUCH HIGHER bandwidth? That's 560 GB/s vs 448GB/s , a 112 GB/s advantage for the X feeding the memory pipeline vs a 22GB/s best case really vs 6GB/s, really 8-9 like you stated ,for the ps5. That's a Delta of 112GB/s for the X versus a likely Delta of 3GB/s( 9 - 6) for the ps5....much more the advantage for the series X. Not all apples to apples but still talking about the memory system setup and bandwidth with the same destinations. I don't think the xsx ssd will be as much as a bottleneck as the ps5's RAM bandwidth will be for it IMO.

What the hell is wrong with you? Are you trying to destroy the insane discussion happening, and console wars with your rational and reasonable take?So on one hand the PS5 is stronger than the XSX, because the XSX is merely bruteforcing and the PS5 is much more elegant..... buuuuuut on the other hand, the XSX is faster than the PS5, because PS5 is merely bruteforcing and the XSX is much more elegant.

Or maybe.. just maybe.. both machines are incredibly well optimized with different strenghts, where Sony has opted for a bit slower APU with an extreme SSD, while MS has chosen a bit faster APU with a slightly less extreme SSD.

Who's saying it's a 9.2tf lately? Since they revealed it maxing out as 10.3tf with throttling, 9.2tf is seems way understated.It's crap since you, Xbone fans, trying to push narrative that PS5 is 9.2 TF console, which is a FUD in every single way.

I'll just copy the same crap which i've told you few days ago :

Like Cerny stated, both CPU and GPU can work at their top frequency together as long as the total workload does not exceed the power cap. It that happens, downclock will be minor. It's sufficient to lower clocks by 2% to lower power consumption by 10%. Dropping power consumption by 10% IS NOT 10% drop in performance.

If game surely sustain resolution at max. most of the time ( like FC 5 on X1X is at 4k most of the time, even John Linneman couldn't noticed drops in resolution, yet VGTech did ), than surely console will be at power peak most of the time. Like in bunch DF and NXG games comparison where it was mentioned so often - resolution drops are rarity ( depends on platform )

Who's saying it's a 9.2tf lately? Since they revealed it maxing out as 10.3tf with throttling, 9.2tf is seems way understated.

But there's no denying PS5 has issues with maxing out cores and workload, that's why you and Cerny has been pushing variable speeds and 2% downclocks or whatever, where there's this theoretically vague "most of the time" statement.

Just accept it. Everyone has. PS5 can't max out ghz at full workload like every other console can do.

If Sony designed this system better with better specs to begin with, you wouldn't even need a console gpu running at 2.23 ghz to begin with. No other console (even SeX) has a gpu even touching 2 ghz.

I'm sure they are, even moreso for specs which need to downclock so the system doesn't melt down.Mind you that for all console with "locked" specs, numbers are theoretical. I think NXG mentioned that in his recently analysis.

I'm sure they are, even moreso for specs which need to downclock so the system doesn't melt down.

You can keep going all day defending this odd variable cpu/gpu throttling, but there's no denying if SOny just made a more powerful system right off the bat like MS did, you wouldn't even need a gpu busting out at 2.23 ghz to begin with.

SeX gets by perfectly fine with a gpu at only 1.825 ghz.

Defending. LOL! Why Xbox fans keep spreading FUD then I presume it's not ok to defend it, but spreading FUD and crap is OK.. GPU and CPU will be at peak frequency most of the time, it adjusts clocks based on the activity of the chip, not temperature.

Are all 3rd party games mediocre too?Ok great so they will have great compression on their lackluster games. I mean how good is a mediocre game if its compressed in a better way? Will it somehow be a good game?

Not enough Netflix 3rd person action adventures and every gamer knows only those are good games.Are all 3rd party games mediocre too?

So you saw Halo 6, Hellblade 2, Playgrounds new RPG, Forza 9, Obsidians new big game, The Initiative new game, and all other 2nd party games MS have deals with? Damn son, you're the next Miss Cleo!

That Ori and the Will of the Wisp sure is a mediocre game with that 90 metacritic score.

What is most of the time 51%? Sony won't give numbers like they will the SSD. Cerny never said 2%, he said a couple he was very clever to avoid specific numbers on the down clock. Minor, a couple and most of the time were what he used. Show us the numbers just like the SSD. What is the max down clock what work loads cause issues ect quit hiding the facts like Microsoft in 2013.Defending. LOL! Why Xbox fans keep spreading FUD then I presume it's not ok to defend it, but spreading FUD and crap is OK.. GPU and CPU will be at peak frequency most of the time, it adjusts clocks based on the activity of the chip, not temperature.

What is most of the time 51%? Sony won't give numbers like they will the SSD. Cerny never said 2%, he said a couple he was very clever to avoid specific numbers on the down clock. Minor, a couple and most of the time were what he used. Show us the numbers just like the SSD. What is the max down clock what work loads cause issues ect quit hiding the facts like Microsoft in 2013.

From a brief skim, is this where we’re really at? Arguing about texture compression methods for 5 pages? : P

Imma need you to tone down that sound logic of yours.So on one hand the PS5 is stronger than the XSX, because the XSX is merely bruteforcing and the PS5 is much more elegant..... buuuuuut on the other hand, the XSX is faster than the PS5, because PS5 is merely bruteforcing and the XSX is much more elegant.

Or maybe.. just maybe.. both machines are incredibly well optimized with different strenghts, where Sony has opted for a bit slower APU with an extreme SSD, while MS has chosen a bit faster APU with a slightly less extreme SSD.

Sure that 88% if they can decompress that in time devs can use it.So XSX SSD is actually up to 19.2 GB/s.

I was once in the group of saying my 720p games looked as fine as 1080p games on PS4. We were wrong, but our console was the weaker that time. I understand why Sony fans are doing this right now. I totally understand.Sure, 10,3tf ps5 is better than 12tf xsx.

“HE THINKS” he has no proof to fully support this claim.He said few/couple percentages. And few/couple percentages ISN'T 10%, it's from 1 to 3 or 4 like bunch of tech breakdowns are assuming a couple is 2 or 3 . Based NXG, he thinks it's around 50 MHz.

“HE THINKS” he has no proof to fully support this claim.

That’s why “he thinks” cause there is nothing more than estimations before we see some actual numbers.He calculated it based on what Cerny said.

That’s why “he thinks” cause there is nothing more than estimations before we see some actual numbers.

What about the memory bus feeding the XSX GPU at a MUCH HIGHER bandwidth? That's 560 GB/s vs 448GB/s , a 112 GB/s advantage for the X feeding the memory pipeline vs a 22GB/s best case really vs 6GB/s, really 8-9 like you stated ,for the ps5. That's a Delta of 112GB/s for the X versus a likely Delta of 3GB/s( 9 - 6) for the ps5....much more the advantage for the series X. Not all apples to apples but still talking about the memory system setup and bandwidth with the same destinations. I don't think the xsx ssd will be as much as a bottleneck as the ps5's RAM bandwidth will be for it IMO.

It's sufficient to lower clocks by 2% to lower power consumption by 10%.

I was once in the group of saying my 720p games looked as fine as 1080p games on PS4. We were wrong, but our console was the weaker that time. I understand why Sony fans are doing this right now. I totally understand.

Memory bandwidth doesn’t make different for streaming data from SSD to RAM... the SSD data will use a very small part of the bandwidth.What about the memory bus feeding the XSX GPU at a MUCH HIGHER bandwidth? That's 560 GB/s vs 448GB/s , a 112 GB/s advantage for the X feeding the memory pipeline vs a 22GB/s best case really vs 6GB/s, really 8-9 like you stated ,for the ps5. That's a Delta of 112GB/s for the X versus a likely Delta of 3GB/s( 9 - 6) for the ps5....much more the advantage for the series X. Not all apples to apples but still talking about the memory system setup and bandwidth with the same destinations. I don't think the xsx ssd will be as much as a bottleneck as the ps5's RAM bandwidth will be for it IMO.

Who knew a 3.1 gb/s gap of SSD speed would be the holy grail of next gen gaming.

What if you need to drop the power consumption by more than 10%? What if the customer wants to have a consistent experience for the gamer? Wouldn’t he design the game based on the lowest possible clock under all circumstances?

When it's all you got, you cling to it as if you life depends on it. PS5's SSD speed is Xbox One's CPU clock speed + ESRAM. Everybody knows it won't do anything, but half a year of make-believe and seven years of suffering are better than seven and a half years of suffering. The roles truly completely turned around from 2013, when PS5 ends up being 499 against a 399 XSX because of an expensive and useless SSD, eyeballs will melt. PS5 SSD is basically this gen's Kinect, a great idea that will get barely any use and just make the console more expensive.

And funnily enough: Microsoft implemented ESRAM in Xbox One to make up for the lack of memory bandwidth and now Sony does the same with PS5. Just another piece of the puzzle that will make PS5 more expensive while being less powerful.

Btw. Michael ( NXG ) has been working as a software & hardware engineer for a long time. Surely he knows something. Better than rest of us here. Based on what Cerny himself said about only needing couple of percentage frequency clock drop when the system hits its set power limit, a tiny drop of which would claw back a huge amount of power (10%). Based on that, NXGamer has calculated around 50 MHz.

If that were the case, they would just go with fixed clocks. Makes no sense to have a variable rate with such minor changes. Which means he is wrong.