Handy Fake

Member

Ah kna man. Bloody Mags telling wuh Mackems the obvious.Thats the EPIC dev saying they can run that UE5 demo at 60 FPS but less detail and its tuneable with knobs.

Ah kna man. Bloody Mags telling wuh Mackems the obvious.Thats the EPIC dev saying they can run that UE5 demo at 60 FPS but less detail and its tuneable with knobs.

I'd say games designed with next-gen console's SSD in mind will completely eliminate pop-in. WD2's engine is still current-gen. I too think this is bs.It has pop-in which shouldn't be a thing on SSD most probably, so must be bs.

True. That's correct, it's not the same as Steam's FPS indicator, the one in the vid looks different to Steam's.Oh absolutely, I usually tend to see more debug information on consoles if they're showing anything more than the standard user-interface, rather than just the FPS in the corner. It's been a while since I used the FPS indicator in Steam Overlay but is that not the same design (font,size etc.) as the Steam Overlay?

Ah kna man. Bloody Mags telling wuh Mackems the obvious.

does that last tweet from Sweeney implies U5 demo uses kraken for more then 5.5GB/s BW for streaming? or it would use it anyway even if it less then 5.5GB/s ? more then 5.5GB/s would make sense considering it uses 8K textures if so then it's most likely other next-gen platforms will use 4K textures, i expect that will be main difference between them.

The engine is fully scalable, so not only can one scale back and/or disable features for old hardware, one can also tweak settings to optimize performance.Thats the EPIC dev saying they can run that UE5 demo at 60 FPS but less detail and its tuneable with knobs.

Wild prediction: Gran Turismo where the gameplay is ‘photo mode’ quality and can pass for real life footage

The Franchise is a Joke. Part 1 was ok, Part 2 trash and the upcoming looks na as well."We are starting by boosting performance on top 100 PS4 games"

Proceed to boost performance on Watch Dogs 2

LOL

/jk

Edit: PC version then. The video itself is the joke.

www.windowscentral.com

www.windowscentral.com

I welcome this wholeheartedly. More choices, the better.The question is whether graphical performance settings are going to somehow become standard on consoles next gen.

The question is whether graphical performance settings are going to somehow become standard on consoles next gen.

The Epic developer was talking about providing those knobs for developers, not gamers.I welcome this wholeheartedly. More choices, the better.

The engine is fully scalable, so not only can one scale back and/or disable features for old hardware, one can also tweak settings to optimize performance.

The question is whether graphical performance settings are going to somehow become standard on consoles next gen.

I like how the whole conversation shifted from Teraflops to the SSDs, smart call by Sony, that demo shifted the whole conversation to the strongest point in their hardware.

Even Xbox fanboys are talking about the SSD trying so hard to downplay it they literally forgot about the Teraflops (at least from what I'm seeing)

Smart f**** Sony

I'm not talking about the marketing but the narrative to be more accurate. The narrative in the past few months was about Tflops now it's about the SSDs. No matter how we spin it, that was a smart move from Sony or epic to shift that stupid narrative to what's really going to matter next gen.Its not smart marketing, this SSD and coherency, cache scrubbers and fast and narrow approach with attacking IO latency has been planned meticulously for 3 years. or more.

It was bold, I can imagine Cerny saying hey I dont want more CUs and flops, I want IO silicon to do this and this and people looking at him in bemusement, but why ?

Lets face it, every body thought he had gone crazy in the GDC speach, like what on earth. Underpowered, overheated whats going on...

I can imagine Cerny smiling underneath thinking just you wait, not long now .....Its not just the intelligence, its the will and tenacity to see it through. I am impressed as F.

Its a paradigm shift in concepts, I love it.

I wonder what he means by lower detail. Lower detail on assets? Going back to older downscaled rendering method? Or just dropping resolution to 1080p, which would be meaningless if the image can be that clean.Maybe but they can also run that demo at 60 FPS with less detail on Ps5, so the SSD speed and more important Latency / coherency is still necessary to go double frame rate is my take

if every single frame the engine is streaming in new tris, that means we could take the per frame poly count (20 mill) x frame rate (30) and get 600 mill tris of unique geometry per second. Find out how much data an 8k asset takes per million tris and you could get a fairly good estimate of the data throughput required for this demo.

Or I could be horribly wrong doing some bro math in which case please dismiss.

I like how the whole conversation shifted from Teraflops to the SSDs, smart call by Sony, that demo shifted the whole conversation to the strongest point in their hardware.

Even Xbox fanboys are talking about the SSD trying so hard to downplay it they literally forgot about the Teraflops (at least from what I'm seeing)

Smart f**** Sony

The thing is that nanite has to crunch 1Billion triangles per frame into those 20 Million. So unless it is precrunched in some data structure on the ssd, you need that Billion triangles to be streamed from the ssd to the gpu so that nanite can do its crunching.

I wonder what he means by lower detail. Lower detail on assets? Going back to older downscaled rendering method? Or just dropping resolution to 1080p, which would be meaningless if the image can be that clean.

The thing is that nanite has to crunch 1Billion triangles per frame into those 20 Million. So unless it is precrunched in some data structure on the ssd, you need that Billion triangles to be streamed from the ssd to the gpu so that nanite can do its crunching.

Let's see how is that gonna reflect on their sales, they already sold the narrative of Tflops when they launched the Xbox One X but it didn't help them. Let's see if they could change that with their games.I don’t think it’s about being smart or not. Microsoft got ahead of the narrative and sold their vision for next gen and people latched into the number they understand, and that’s 12 Teraflops. Why? Because they believe that was what set apart the PS4 and the Xbox One, therefore it stands to reason in the mind of the uneducated that it’s as simple as that. 12 teraflops, the end.

Except it’s not, and the point of hardware evolving is to break barriers and create new paths. People just got so used to the idea of more of the same because of PS3/360 -> PS4/Xbox One. They got so used to it that now even when big time developers talk about the breakthroughs and how revolutionary they are, you get an army of posters who go to war against that.

Here’s the kicker though, XBox Studios and MS itself has put more focus on Velocity Architecture and CPU than they have on the 12 TF of power angle. That’s mostly on the fanboys and shills, fed hook line and sinker. And right now it doesn’t take a genius to understand why.

the statues were identical, but all the rocks in the demo had similar complexity with millions of polygons per asset. At the point of the demo when they said 1 billion polygons crunched into 20 million there was a cave with varied assets, not the 500 statues.It's hard to say how much date was "crunched" in this demo, especially since they used an army of the same model. If each model had been different, it seems like that would have caused more data overhead.

My biggest question regarding the UE5 demo is how much of this detail can be maintained at 90 or 120fps. VR will be very compelling on PS5 if they can keep a lot of this detail in place. No more PS3 looking VR games, hopefully.

8K textures used in the demo seems needlessly excessive, and essentially just to prove a point of how much throughput is required to do so.His words were lower QUALITY at 60 FPS, so quality must mean they toned down the 8K to something like 4k assets or it means they ran at 1080p60. Input or output quality, we do not know.

If the answer is the former, lower than 8K assests can enable 60 FPS, that is the way forward imo.

8K textures used in the demo seems needlessly excessive, and essentially just to prove a point of how much throughput is required to do so.

Actual games will be much smarter about this.

they'll never give up

they'll never give up

We don't know if that technology allows instancing of objects, or they need to be baked into geometry data for each instance. For some reason i feel like it will have similar limitations like idsoftware megatexture had.the statues were identical, but all the rocks in the demo had similar complexity with millions of polygons per asset. At the point of the demo when they said 1 billion polygons crunched into 20 million there was a cave with varied assets, not the 500 statues.

what do you mean baked? They said you can use the raw zbrush and quixel scan assets in your game without normal maps or lods. This particular demo apparently made heavy use of raw quixel photogrammetry assets, and there was a wide variety of assets.We don't know if that technology allows instancing of objects, or they need to be baked into geometry data for each instance. For some reason i feel like it will have similar limitations like idsoftware megatexture had.

It's obviously not saved as raw zbrush files. It need to be saved into some sort of treelike structure which allow renderer to quickly grab only polygons which are needed to display. And there is question if that structure will allow for references to single model or every instance will need to have it own data in that tree. First solution would have lot of problems with for example overlapping models.what do you mean baked? They said you can use the raw zbrush and quixel scan assets in your game without normal maps or lods. This particular demo apparently made heavy use of raw quixel photogrammetry assets, and there was a wide variety of assets.

The magic bullet for VR is eye-tracking with foveated rendering. Sony patents suggests it’s an active area of research for them.

Once a game knows where a player’s fovea is pointing, VR can become better quality than TV. That isn’t an exaggeration, either.

AI upscaling like DLSS is all the rage at the moment, as are other forms of intelligent image construction. Guess what? Your brain already does this for free while adding no extra watts on the APU! Your literal blind-spot is filled in by your brain making stuff up. Pretty much everything outside of your fovea is simulated in your head outside of significant movement and large visual structures.

It’s something people aren’t really aware of unless they start playing around with magic tricks and learning about human fallibility.

Most illusion tricks and sleight of hand is done by pinning an audiences fovea where you need it at certain times.

A lot of vision relies on assumption. A lot of the human experience relies on assumption.

Even the effect of moving your eyes to look somewhere else is hidden from you by the simulation your brain makes. It’s why that illusion where you look at a clock and it seems like the first second hand tick takes a lot longer than the subsequent ones. Your experience of vision is literally turned off until the eye is back on target and your brain time shifts the experience to make it seem seamless.

For VR this means although you need to render a scene twice (recent improvements by Nvidia remove most of the CPU overhead for this) you only need to render what’s under your fovea at high resolution and with any kind of expensive filtering.

TVs cannot do this, they don’t know where you’re looking.

This means that a VR headset could have 8K resolution or more, and as long as it can track your eyes and has fast enough latency it might only need to render a patch of it at “8K quality”, and the rest progressively lower down to barely anything, and you’d never know. Dart your eyes around all you want and it’s sharper even than a TV, with perfect filtering, and rendering costs comparable with (even lower at points) than a 4K TV where every inch of it is at full detail, resolution and filtering.

Expect future VR to pull well ahead of TV quality and performance.

Cannot wait to see where Sony go with it. This is the kind of next-gen I want. Not the same old formula at higher resolutions and frame-rates.

I want new worlds and experiences, not sharper versions of the same worlds and experiences.

Imagine how good Ironman could be flying around a city with PS5 IO. Or the next Spider-man. I want him to go faster and for there to be streets that span the city in length. I don’t want the same carefully designed maze with slow floaty swinging but now with sharper edges and shiner effects.

Faster IO and VR is the next-gen I’m most excited about.

Yup, hence the demo running at 60 FPS with 4K assets (or whatever the scaling is) seems a better way forward, still requires the same high streaming SSD and delivery as your working on smaller assets but at twice the frame rate.

Got to admit, the cgi quality assests made a strong point.

I’m starting to think the assets is what Sony was referring to when mentioning 8K support.

that's the whole point of PS5 SSD to stream highest quality assets without creating LOD's to cut dev time, it's like 2 hits with one shot (highest possible details and reduced dev time) doesn't pro and xonex already uses 4K textures in some games?8K textures used in the demo seems needlessly excessive, and essentially just to prove a point of how much throughput is required to do so.

Actual games will be much smarter about this.

Every step you make, every move you make

I'll be loading you....

I'll show myself the door don't worry.

I’m starting to think the assets is what Sony was referring to when mentioning 8K support.

Ahhh come on... mine was innocent.

I thought it didn't use RT at all.Bit that demo uses very little RT in Lumen... so I don’t know:

Sure. But those moments could require replacing most of assets in the memory. It should take less than 2 seconds to swap all VRAM content and those corridor sections are more or less that long. It's still a lot better than a loading screen.Isn’t every step you take a loading section?

PS5 SSD Is 'Far Ahead' of High-End PCs, Epic Games CEO Says - IGN

The PlayStation 5 breakthroughs on SSD and storage are ahead of any PC alternatives.www.ign.com

On a slightly tangential note, I'd love to see No Man's Sky II on this engine. I wonder how it would work with procedural generation.

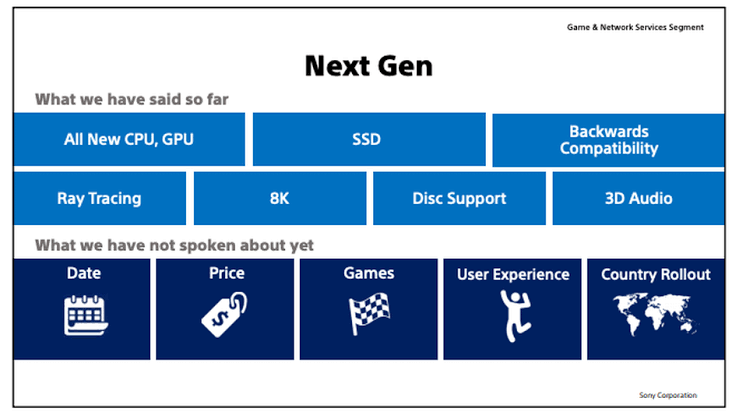

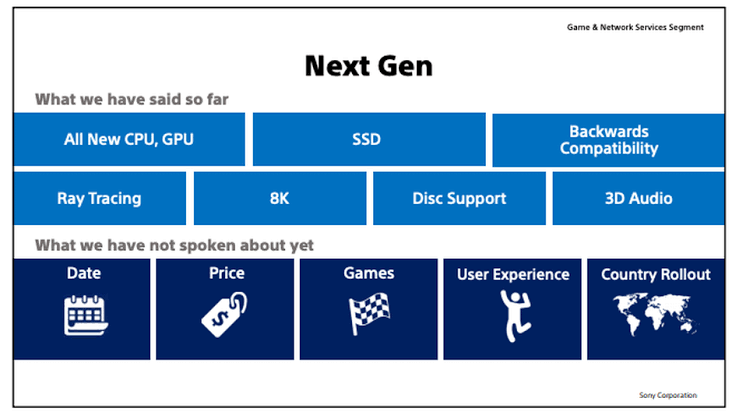

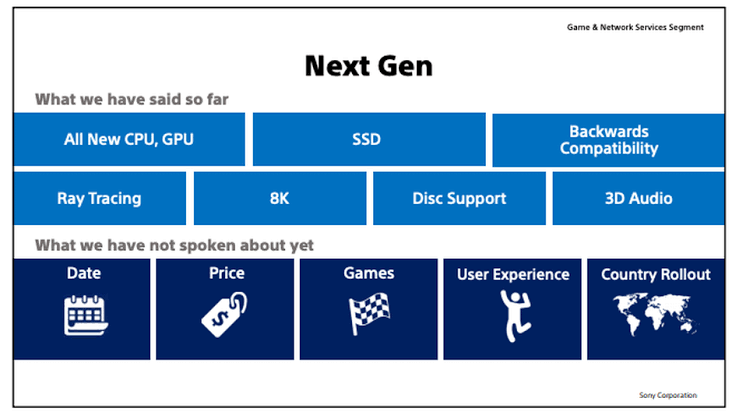

By user experience do you mean like showing the UI and how to change certain settings and the store fronts and stuff? I love seeing new UIs don't know whyI’m starting to think the assets is what Sony was referring to when mentioning 8K support.

The asset streaming alone would make it incredibly stable.i think no mans sky on ps5 would be INSANE

How different would the narrative have been the last 2 months if Epic had shown the demo along side Cerny’s presentation at GDC as planned? Sony really needed to demonstrate why traditional metrics won’t define this generation. The PS5 technical dive along with the demo would have sent a clear message. I think Sony planned it right, but Covid derailed the messaging.

I was on the poor messaging bandwagon, but if things had went as planned we would have been drooling for the last 2 months in anticipation of the June event. I’m going to give them a pass on the lack of information, I think the plan was in place.

Or someone's been playing with AI. I felt a bit weird when I saw this first.I'd say that's just 60fps PC footage.

Have you by any chance read Peter Watts' books? He explores the ideas of perception and consciousness in a very engaging way. I also found this very interesting since DualSense is getting a heartbeat sensor.Most illusion tricks and sleight of hand is done by pinning an audiences fovea where you need it at certain times.

A lot of vision relies on assumption. A lot of the human experience relies on assumption.

Even the effect of moving your eyes to look somewhere else is hidden from you by the simulation your brain makes. It’s why that illusion where you look at a clock and it seems like the first second hand tick takes a lot longer than the subsequent ones. Your experience of vision is literally turned off until the eye is back on target and your brain time shifts the experience to make it seem seamless.