-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Digital Foundry about XSX teraflops advantage : It's kinda all blowing up in the face of Xbox Series X

- Thread starter Bernoulli

- Start date

DJ12

Member

Again, if MS are going down this route its on them if there's xbox optimisations not being used, not devsIt’s no Anti Sony drivel at all, it has the has to do with maintaining multiple systems. It’s a prime example of MS bashing to be honest, and totally unnecessary. It’s only used by me to get my point across, as both Nintendo and Sony don’t really operate this way (yet)

the other side is that you maintain 1 software stack but you probably can’t put enough energy in it to really tap into the power of the different devices as a developer, because you also are develop for other systems .

MasterCornholio

Member

Again, if MS are going down this route its on them if there's xbox optimisations not being used, not devs

I believe that's a very good point. Not only is it important to make a system powerful but it needs to be easy to use. For example if it takes a massive effort on devs part for the XSX to destroy the PS5 then Microsoft is to blame for this. You really can't expect devs to make a huge effort so the XSX can destory the PS5 in multiplat comparisons.

Darkness_Arises

Member

It literally has a 22% better pixel fillrate than the XSX. What are you playing at? The XOS and XSS have a better pixel fillrate than the PS5, are you that dense?What do you mean "it's faster at 'placing' pixels on the screen?" Is the Xbox One S 11% faster at "placing pixels on the screen" because it has a higher clock frequency?

It is as if we say that Xbox Series X is 44% more powerful when it comes to 'drawing' graphics because it has 44% more compute units. Graphics cards don't work or compare that way. Not only tflops are used as a measure, I agree with that.

We can take as an example an AMD 6700XT (40CU/2581 Mhz) vs 6800 (60CU/2105 Mhz), there are more differences (texture units, ROP...), but the difference is big. I don't want to say that this is the difference between PS5 and Xbox Series X (Ps5 does not have more texture units or more ROP, it is just faster but there is a clear and concise CU difference) and they reach the same "point" (tflops), generally the slower one but with more CUs has a higher performance. And they really aren't even at the same point (tflops), XSX is more powerful with the same architecture and very similar specifications.

Developers don't care that one console may have slightly higher resolutions than another, XSX generally seems to have an advantage when dynamic resolutions are involved and they are often higher, but what one developer especially values is having lots of memory, ease and that the API is not problematic with CPU overload, and in that sense PS5 (or its API) has done its homework better. And that is not negative for PlayStation, quite the opposite. It seems they have done a better job and that effort has been rewarded. Although I still think that being the "dominant" platform also influences.

Even One on PS4 Pro, when it was worse in any technical aspect than One X, the tools, the optimization process and the main platform also influence the final result.

On the other hand, I notice a certain tone of mockery in your response. I would appreciate more education. I am always open to debate, but with respect.

Killjoy-NL

Member

Isn't it basically:

Xbox more brute power, but bottlenecked

Vs

PS5 less power, less bottlenecks + constant boost-mode

Resulting in similar performance?

This whole discussion is pointless anyway, because we have the actual results and there is barely any noticeable difference between either system.

I'd actually like to argue that the tech-experts in here are almost just as clueless as DF.

(Assuming DF isn't in bed with MS and still spreading FUD and damagecontrolling for Xbox).

Xbox more brute power, but bottlenecked

Vs

PS5 less power, less bottlenecks + constant boost-mode

Resulting in similar performance?

This whole discussion is pointless anyway, because we have the actual results and there is barely any noticeable difference between either system.

I'd actually like to argue that the tech-experts in here are almost just as clueless as DF.

(Assuming DF isn't in bed with MS and still spreading FUD and damagecontrolling for Xbox).

Tripolygon

Banned

That does not make sense. Having a higher clock without the accompanying hardware does not make you automatically faster but having a higher clock with accompanying hardware can make you faster compared to a system that has much higher hardware at slower clock. Let's look at some examples below.What do you mean "it's faster at 'placing' pixels on the screen?" Is the Xbox One S 11% faster at "placing pixels on the screen" because it has a higher clock frequency?

Pixel Fill rate

PS4: 32 x 0.800 = 25.60 GPixel/s

XOS: 16 x 0.914 =14.62 GPixel/s

Xbox One S has a 14% higher clock 914MHz vs PS4 800MHz but PS4 has 100% more ROPs 32 compared to XOS 16 ROPs. A higher clock can only take you so much without the backing hardware units. For XOS to have a higher pixel fill rate, they would have to clock 100% higher at 1.6GHz not 14% higher at 0.914GHz.

4Pro: 64 x 0.900 = 58 GPixel/s

XOX: 32 x 1.172 = 38 GPixel/s

Xbox One X has 30% faster clock but PS4 Pro has 100% more ROPs. Which makes PS4 Pro have a higher pixel fill rate than XOX even though XOX has higher clock. It's the reverse of the of PS4 and XOS with the twist that Sony added 32 more ROPs to PS4 Pro.

PS5: 64 x 2.23 = 142.72 GPixel/s

XSX: 64 x 1.825 = 116.8 GPixel/s

XSX and PS5 have identical number of ROPs 64 but PS5 has a faster clock which again makes PS5 have a higher pixel fill rate compared to XSX. See what I'm getting at? Sony with all their consoles have higher pixel fill rate compared to Xbox consoles via adding more hardware and or higher clock.

Let's look at the difference between PS4P vs XOX and XSX vs PS5.We can take as an example an AMD 6700XT (40CU/2581 Mhz) vs 6800 (60CU/2105 Mhz), there are more differences (texture units, ROP...), but the difference is big. I don't want to say that this is the difference between PS5 and Xbox Series X (Ps5 does not have more texture units or more ROP, it is just faster but there is a clear and concise CU difference) and they reach the same "point" (tflops), generally the slower one but with more CUs has a higher performance. And they really aren't even at the same point (tflops), XSX is more powerful with the same architecture and very similar specifications.

CPU

4Pro: 2.1GHz

XOX: 2.3GHz 9% difference for XOX

PS5: 8 core 16 threads 3.5GHz

XSX: 8 core 16 threads 3.6GHz 2.6% difference

GPU Teraflop

4Pro: 2304 x .900 x 2 = 4.2TF

XOX: 2560 x 1.172 x 2 = 6TF 40% difference for XOX

PS5: 10.28TF

XSX: 12TF 15% difference

RAM/Bandwidth

4Pro: 8GB @ 217.6 GB/s

XOX: 12GB @ 326.4 GB/s 40% difference for XOX

PS5: 16GB @ 448GB/s 22% difference against the 6GB

XSX: 10GB @ 560GB/s and 6GB @ 336GB/s 22% difference for 10GB

Triangle rasterization

4Pro: 4 x .900 = 3.6 billion triangles/s

XOX: 4 x 1.172 = 4.7 billion triangle/s 26% for XOX

PS5: 4 x 2.23 = 8.92 BT/s 20% difference for PS5

XSX: 4 x 1.825 = 7.3 BT/s

Culling rate

4Pro: 8 x .900 = 7.2 BT/s

XOX: 8 x 1.172 = 9.2BT/s 24% difference for XOX

PS5: 8 x 2.23 GHz = 17.84 BT/s 20% difference for PS5

XSX: 8 x 1.825 GHz = 14.6 BT/s

Pixel fill rate

4Pro: 64 x .900 = 58 GPixel/s 41% difference for Pro

XOX: 32 X 1.172 = 38 GPixel/s

PS5: 64 x 2.23 = 142.72 GPixel/s 20% difference for PS5

XSX: 64 x 1.825 = 116.8 GPixel/s

Texture fill rate

4Pro: 144 x .900 = 130 GTexel/s

XOX: 160 x 1.172 = 188 GTexel/s 36% difference for XOX

PS5: 4 x 36 x 2.23 = 321.12 GTexel/s

XSX: 4 x 52 x 1.825 = 379.6 GTexel/s 16% difference for XSX

Literally everything is faster in XOX than PS4 Pro except for Pixel fill rate

Almost everything is faster in PS5 except for Texture fill rate and 15% more TF.

Ray triangle intersection rate

PS5: 4 x 36 x 2.23 = 321.12 Billion RTI/s

XSX: 4 x 52 x 1.825 = 379.6 Billion RTI/s 16% difference for XSX

As you can see above, there is no number of tools that makes PS4 Pro more performant that One X. One X is more powerful than PS4 Pro in every way imaginable except for Pixel fill rate which was mainly important for PS4 Pro checkerboard rendering. That is not the case with PS5 and XSX, in some ways PS5 is more powerful and in other XSX is more powerful. Simply stating the number of CUs and Teraflops does not tell you the wholes story. Because of that you will notice some games favor PS5 and other favors XSX. It's been 3 years now, it's time to let go of the tools narrative.Even One on PS4 Pro, when it was worse in any technical aspect than One X, the tools, the optimization process and the main platform also influence the final result.

Last edited:

Lysandros

Member

Furthermore 22% is only the difference in color ROP throughput, in depth ROP department the difference is actually 122% due to PS5 having twice the number of units there. It's somewhat reminiscent of the XboxOne vs PS4 situation. PS5 is also 22% ahead in fixed function geometry throughput and triangle culling among others.It literally has a 22% better pixel fillrate than the XSX. What are you playing at? The XOS and XSS have a better pixel fillrate than the PS5, are you that dense?

Last edited:

Darkness_Arises

Member

True that, the person I was quoting seems to have a hard time grasping how GPUs work. Watch him write yet another long wall of text, ending in both systems are equal but my precious X is slightly better. I have no idea why this lie is so important to him and the DF crew.Furthermore 22% is only the difference in color ROP throughput, in depth ROP department the the difference is actually 122% due to PS5 having twice the number of units there. It's somewhat reminiscent of the XboxOne vs PS4 situation. PS5 is also 22% ahead in fixed function geometry throughput and triangle culling among others.

Mr.Phoenix

Member

Thank you, I couldn't have said it better myself.That does not make sense. Having a higher clock without the accompanying hardware does not make you automatically faster but having a higher clock with accompanying hardware can make you faster compared to a system that has much higher hardware at slower clock. Let's look at some examples below.

Pixel Fill rate

PS4: 32 x 0.800 = 25.60 GPixel/s

XOS: 16 x 0.914 =14.62 GPixel/s

Xbox One S has a 14% higher clock 914MHz vs PS4 800MHz but PS4 has 100% more ROPs 32 compared to XOS 16 ROPs. A higher clock can only take you so much without the backing hardware units. For XOS to have a higher pixel fill rate, they would have to clock 100% higher at 1.6GHz not 14% higher at 0.914GHz.

4Pro: 64 x 0.900 = 58 GPixel/s

XOX: 32 X 1.172 = 38 GPixel/s

Xbox One X has 30% faster clock but PS4 Pro has 100% more ROPs. Which makes PS4 Pro have a higher pixel fill rate than XOX even though XOX has higher clock. It's the reverse of the of PS4 and XOS with the twist that Sony added 32 more ROPs to PS4 Pro.

PS5: 64 x 2.23 = 142.72 GPixel/s

XSX: 64 x 1.825 = 116.8 GPixel/s

XSX and PS5 have identical number of ROPS 64 but PS5 has a faster clock which again makes PS5 have a higher pixel fill rate compared to XSX. See what I'm getting at? Sony with all their consoles have higher pixel fill rate compared to Xbox consoles via adding more hardware and or higher clock.

Let's look at the difference between PS4P vs XOX and XSX vs PS5.

CPU

4Pro: 2.1GHz

XOX: 2.3GHz 9% difference for XOX

PS5: 8 core 16 threads 3.5GHz

XSX: 8 core 16 threads 3.6GHz 2.6% difference

GPU Teraflop

4Pro: 2304 x .900 x 2 = 4.2TF

XOX: 2560 x 1.172 x 2 = 6TF 40% difference for XOX

PS5: 10.28TF

XSX: 12TF 15% difference

RAM/Bandwidth

4Pro: 8GB at 217.6 GB/s

XOX: 12GB at 326.4 GB/s 40% difference for XOX

PS5: 16GB @ 448GB/s 22% difference against the 6GB

XSX: 10GB @ 560GB/s and 6GB @ 336GB/s 22% difference for 10GB

Triangle rasterization

4Pro: 4 x .900 = 3.6 billion triangles/s

XOX: 4 x 1.172 = 4.7 billion triangle/s 26% for XOX

PS5: 4 x 2.23 = 8.92 BT/s 20% difference for PS5

XSX: 4 x 1.825 = 7.3 BT/s

Culling rate

4Pro: 8 x .900 = 7.2 BT/s

XOX: 8 x 1.172 = 9.2BT/s 24% difference for XOX

PS5: 8 x 2.23 GHz = 17.84 BT/s 20% difference for PS5

XSX: 8 x 1.825 GHz = 14.6 BT/s

Pixel fill rate

4Pro: 64 x .900 = 58 GPixel/s 41% difference for Pro

XOX: 32 X 1.172 = 38 GPixel/s

PS5: 64 x 2.23 = 142.72 GPixel/s 20% difference for PS5

XSX: 64 x 1.825 = 116.8 GPixel/s

Texture fill rate

4Pro: 144 x .900 = 130 GTexel/s

XOX: 160 x 1.172 = 188 GTexel/s 36% difference for XOX

PS5: 4 x 36 x 2.23 = 321.12 GTexel/s

XSX: 4 x 52 x 1.825 = 379.6 GTexel/s 16% difference for XSX

Literally everything was faster in XOX than PS4 Pro except for Pixel fill rate

Almost everything is faster in PS5 except for Texture fill rate and 15% more TF.

Ray triangle intersection rate

PS5: 4 x 36 x 2.23 = 321.12 Billion RTI/s

XSX: 4 x 52 x 1.825 = 379.6 Billion RTI/s 16% difference for XSX

As you can see above, there is no number of tools that makes PS4 Pro more performant that One X. One X is more powerful than PS4 Pro in every way imaginable except for Pixel fill rate which was mainly important for PS4 Pro checkerboard rendering. That is not the case with PS5 and XSX, in some ways PS5 is more powerful and in other XSX is more powerful. Simply stating the number of CUs and Teraflops does not tell you the wholes story. Because of that you will notice some games favor PS5 and other favors XSX. It's been 3 years now, it's time to let go of the tools narrative.

This unfortunately is all something I fear a lot of people just will not get. It doesn't help that even DF has somehow not found it relevant to point these things out too. The gross ignorance of the general user base means that all anyone looks at are things like TF numbers.

I am personally tired of saying it on these forums that TFs, don't paint the whole picture, or that TFs make up just one of the ~7 parts of a GPU that contributes to each frame rendered on the screen.

Lysandros

Member

I can not agree on PS5 having "less power", what makes you say that after seeing posts explaining the power equation in detail above?PS5 less power, less bottlenecks + constant boost-mode

It's not what I said, but a nice way to discuss without arguing....So just to be clear, the narrative is when PS5 performs better, it means devs messed up the XSX version. And when XSX performs better, everything is as it should be?

I could tell you why a lot of what you said is so wrong (even though strangely based on facts), but seeing that the narrative above is what you are pushing, it means it would be too difficult or next to impossible to reason with you.

Last edited:

Chromium.Wings

Member

I mean - and I know NOTHING about hardware terminology - to my mind if you're saying you are "more powerful but bottlenecks make you less powerful" then you're less powerful. You're only as strong as your weakest link, especially in the console space where you're effectively locked into those specs for 7 years.I can not agree on PS5 having "less power", what makes you say that after seeing posts explaining the power equation in detail above?

That said, the boxes are so similar in performance I don't know why any of this shit actually matters. We see games coming out performing nearly identical, and looking essentially the same to the naked eye. What the hell else could matter?

Killjoy-NL

Member

Tbh that detailed breakdown came after my post.I can not agree on PS5 having "less power", what makes you say that after seeing posts explaining the power equation in detail above?

In that post, it explains a lot better why they’re performing pretty much equally.

My understanding is limited, but what I said was the gist of what I understood from Road to PS5 and the whole discussion that was happening everywhere pre-launch.

MasterCornholio

Member

And don't forget. We got all these comparisons that show the differences between these two systems years after the Github misinterpretation. I'd say that trumps crazy switch theories IMO.

Vergil1992

Member

It literally has a 22% better pixel fillrate than the XSX. What are you playing at? The XOS and XSS have a better pixel fillrate than the PS5, are you that dense?

It literally has a 22% better pixel fillrate than the XSX. What are you playing at? The XOS and XSS have a better pixel fillrate than the PS5, are you that dense?

man, you should relax. You are disrespecting me. Don't worry, they're just consoles. A healthy debate is always interesting, but if you are going to choose to insult, anything you can say is of no interest.

However, I repeat, if we use its logic, we can say that XSX has 44% more CU for graphics, XSX has 3328 shading units and PS5 2304. Taking the clock speed to say "it's X% better at this" and separating it into multiple sections to make it look like the PS5 GPU is better overall and using it as a measure to compare two gpu is complete stupidity. That's why nobody does it, basically. Answering the other user, precisely the One X vs PS4 Pro gives me completely the reason. One X was superior in practically everything compared to PS4 Pro, and still had worse versions, or versions that came out with graphic problems.

Resident Evil 3, for example, was much more stable on PS4 Pro than on One X. If this happens in a current release, with versions where the resolution differences are less wide, there is TAA, there is checkerboard rendering and on top of that FSR 2.0... which greatly complicates the actual pixel count, or there is even more demanding post-processing that definitely reduces performance (RE4R in Series X) and the comparison media cannot specify... if something like RE3 happens on One then the argument would be that PS5 is better designed.

Cyberpunk also had similar visual quality between PS4 Pro and One X and the framerate was better on the Sony machine. Several CODs also showed better performance on PS4 Pro. Monster Hunter or Borderlands had reduced vegetation on One X hardware difference was huge. And yet, PS4 Pro enjoyed some better versions of cross-platform games when it was worse in all areas!

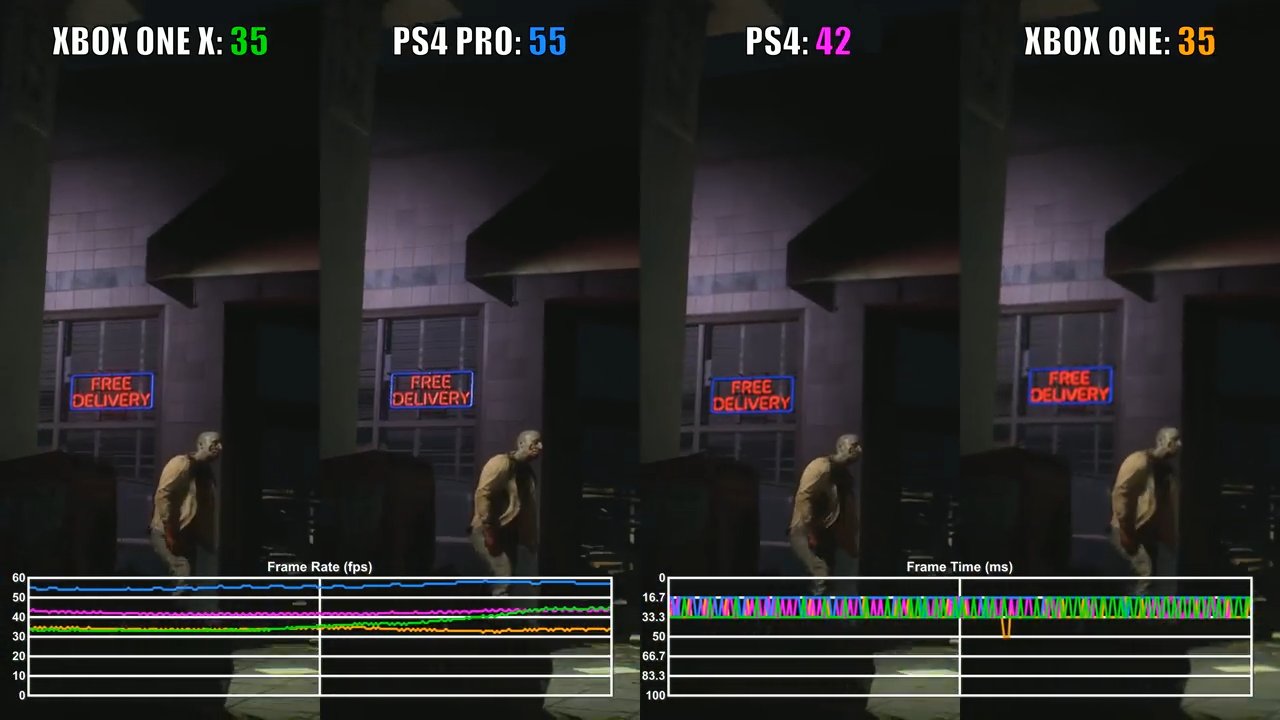

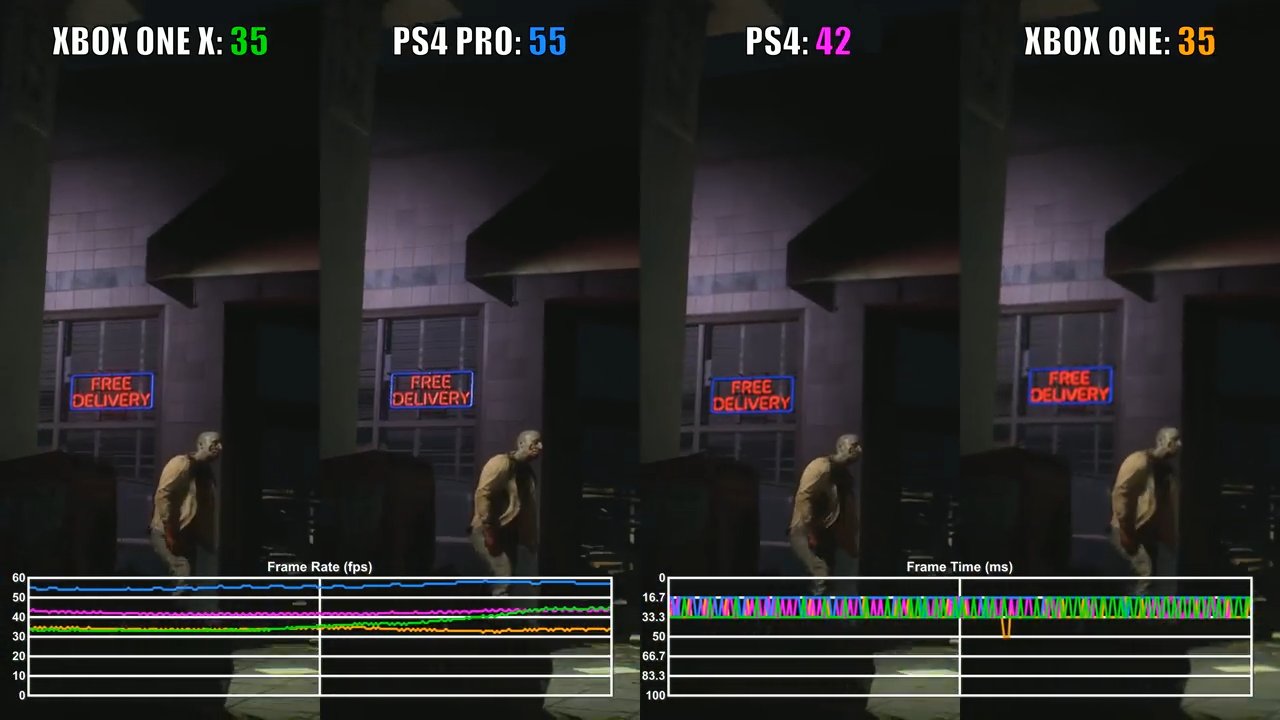

Borderlands 3 PS4 Pro vs One X:

I don't think it's necessary to point out that PS4 Pro had more vegetation than XSX. And it had an inferior GPU in everything. Let's use the AMD 6600XT and the 6700 as a reference. They have 10 and 11Tflops. Same ROPs. The 6700 has slightly more texture units. 32 CU vs 36 CU. The clock frequency is very similar (2359mhz vs 2450). The difference is 1Tflop and especially the 4 CUs and the greater VRAM bandwidth. In short: there are small differences between them, the most notable being the greater bandwidth and the extra CUs of the 6700

I could bet that a clock speed difference from 1.8ghz to 2.2ghz wouldn't make a 44% difference in CU non-existent.

But well, according to some users of this thread, the optimization process, API, main development platform... Nothing exists! And on top of that they give an example of PS4 Pro vs One X when it is the perfect example: many games work better on PS4 Pro than on One X!. And there the hardware completely favored One X. And even in that situation, it had some version that worked worse.

There being little difference between PS5 and XSX, it is logical, XSX is not as powerful (relative to PS5) as One X respect PS4 Pro, its brute force was capable of overcoming all handicaps. XSX cannot do that, hence we see parity.

Mr Moose

Member

man, you should relax. You are disrespecting me. Don't worry, they're just consoles. A healthy debate is always interesting, but if you are going to choose to insult, anything you can say is of no interest.

However, I repeat, if we use its logic, we can say that XSX has 44% more CU for graphics, XSX has 3328 shading units and PS5 2304. Taking the clock speed to say "it's X% better at this" and separating it into multiple sections to make it look like the PS5 GPU is better overall and using it as a measure to compare two gpu is complete stupidity. That's why nobody does it, basically. Answering the other user, precisely the One X vs PS4 Pro gives me completely the reason. One X was superior in practically everything compared to PS4 Pro, and still had worse versions, or versions that came out with graphic problems.

Resident Evil 3, for example, was much more stable on PS4 Pro than on One X. If this happens in a current release, with versions where the resolution differences are less wide, there is TAA, there is checkerboard rendering and on top of that FSR 2.0... which greatly complicates the actual pixel count, or there is even more demanding post-processing that definitely reduces performance (RE4R in Series X) and the comparison media cannot specify... if something like RE3 happens on One then the argument would be that PS5 is better designed.

Cyberpunk also had similar visual quality between PS4 Pro and One X and the framerate was better on the Sony machine. Several CODs also showed better performance on PS4 Pro. Monster Hunter or Borderlands had reduced vegetation on One X hardware difference was huge. And yet, PS4 Pro enjoyed some better versions of cross-platform games when it was worse in all areas!

Borderlands 3 PS4 Pro vs One X:

I don't think it's necessary to point out that PS4 Pro had more vegetation than XSX. And it had an inferior GPU in everything. Let's use the AMD 6600XT and the 6700 as a reference. They have 10 and 11Tflops. Same ROPs. The 6700 has slightly more texture units. 32 CU vs 36 CU. The clock frequency is very similar (2359mhz vs 2450). The difference is 1Tflop and especially the 4 CUs and the greater VRAM bandwidth. In short: there are small differences between them, the most notable being the greater bandwidth and the extra CUs of the 6700

I could bet that a clock speed difference from 1.8ghz to 2.2ghz wouldn't make a 44% difference in CU non-existent.

But well, according to some users of this thread, the optimization process, API, main development platform... Nothing exists! And on top of that they give an example of PS4 Pro vs One X when it is the perfect example: many games work better on PS4 Pro than on One X!. And there the hardware completely favored One X. And even in that situation, it had some version that worked worse.

There being little difference between PS5 and XSX, it is logical, XSX is not as powerful (relative to PS5) as One X respect PS4 Pro, its brute force was capable of overcoming all handicaps. XSX cannot do that, hence we see parity.

Vergil1992

Member

Irrelevant. In fact, I agree with it; on PS4 Pro they made sure that the performance was optimal from the beginning, and then updated the XSX version by decreasing its resolution.

In an era where there is TAA, DRS, FSR, temporary reconstruction, all in the same game, multiple updates... No one has the means to check how things are going in each update. There may be games that at launch performed worse on Series X than on PS5 and today they have been matched, but perhaps no one has checked it. The pixel count may also have changed.

But the undeniable point is that PS4 Pro had several versions better than One X. Some were fixed (like RE3R) others weren't (Cyberpunk, some COD, Borderlands 3), and One X outperformed PS4 Pro in pretty much everything.

It is quite foolish to think that the API, the programming environment, the base platform of the development or simply that one is more advanced than the other in the process (Star Wars Jedi Survivor has taken half a year to be fixed, nowadays games are released and with a huge list of patches over months) do not influence anything in the development and optimization of a game.

If Series X were not slightly more powerful than PS5 I am quite clear that it would lose 99% of the time, it would not have more games at higher resolutions or it would not have greater performance in several games with heavy RT (Control, The Witcher 3).

In fact, I would love to know how RE4R works today. It had worse performance on XSX than on PS5. They downgraded the image to PS5 level and it gained 5-10fps and looks like it matched PS5 performance. Then they updated it saying that they would improve XSX's IQ... and nothing more was heard about it. It is not known which one looks better today, nor which one works faster.

Last edited:

Mr Moose

Member

Different engines will perform better/worse on each of the consoles. They both have their strengths and weaknesses. They are similar enough that it doesn't matter at the end of the day, we just use performance threads as a bit of console warring fun but in the end there's rarely much of a difference between them.Irrelevant. In fact, I agree with it; on PS4 Pro they made sure that the performance was optimal from the beginning, and then updated the XSX version by decreasing its resolution.

In an era where there is TAA, DRS, FSR, temporary reconstruction, all in the same game, multiple updates... No one has the means to check how things are going in each update. There may be games that at launch performed worse on Series X than on PS5 and today they have been matched, but perhaps no one has checked it. The pixel count may also have changed.

But the undeniable point is that PS4 Pro had several versions better than One X. Some were fixed (like RE3R) others weren't (Cyberpunk, some COD, Borderlands 3), and One X outperformed PS4 Pro in pretty much everything.

It is quite foolish to think that the API, the programming environment, the base platform of the development or simply that one is more advanced than the other in the process (Star Wars Jedi Survivor has taken half a year to be fixed, nowadays games are released and with a huge list of patches over months) do not influence anything in the development and optimization of a game.

If Series X were not slightly more powerful than PS5 I am quite clear that it would lose 99% of the time, it would not have more games at higher resolutions or it would not have greater performance in several games with heavy RT (Control, The Witcher 3).

In fact, I would love to know how RE4R works today. It had worse performance on XSX than on PS5. They downgraded the image to PS5 level and it gained 5-10fps and looks like it matched PS5 performance. Then they updated it saying that they would improve XSX's IQ... and nothing more was heard about it. It is not known which one looks better today, nor which one works faster.

Vergil1992

Member

Yes I agree with you. What I do not share is the clear denial that the tools and programming environment, or that one system sells significantly more than another, has no consequence on the development and optimization of the game. PS5 has its strengths at the HW level, especially in storage speed and decompression system, but I don't think the XSX's GPU advantage is completely non-existent as they say here. I think it is slightly more capable, but the difference is not enough to overcome certain handicaps. If a developer maximizes their efforts on PS5 and it is the main version, having an additional 18% power will not make a difference. Or maybe it does and has a higher resolution goal, but if they don't optimize performance later, well... what happens is what we've seen tons of times.Different engines will perform better/worse on each of the consoles. They both have their strengths and weaknesses. They are similar enough that it doesn't matter at the end of the day, we just use performance threads as a bit of console warring fun but in the end there's rarely much of a difference between them.

In any case, we are arguing here over differences that surely none of us could notice. If they didn't tell us that XSX uses VRS, we probably wouldn't notice. If one goes to 1008p and another to 1126p in a demanding area (for example, an intense gunfight) We probably wouldn't notice either.

But it is undeniable that there are certain indications that DF's theories (friendlier API on PS5, prioritizing PS5 for its sales, or that some developers are using DirectX12 without using Xbox's low-level tools) could be correct.

Last edited:

Mr Moose

Member

Could be a number of things, who knows? Series X has a slightly higher res in a lot of cases while PS5 has a slightly higher fps in a lot of cases. Could be down to CPU maybe? PS5 might offload more tasks from the CPU freeing it up?Yes I agree with you. What I do not share is the clear denial that the tools and programming environment, or that one system sells significantly more than another, has no consequence on the development and optimization of the game. PS5 has its strengths at the HW level, especially in storage speed and decompression system, but I don't think the XSX's GPU advantage is completely non-existent as they say here. I think it is slightly more capable, but the difference is not enough to overcome certain handicaps. If a developer maximizes their efforts on PS5 and it is the main version, having an additional 18% power will not make a difference. Or maybe it does and has a higher resolution goal, but if they don't optimize performance later, well... what happens is what we've seen tons of times.

In any case, we are arguing here over differences that surely none of us could notice. If they didn't tell us that XSX uses VRS, we probably wouldn't notice. If one goes to 1008p and another to 1126p in a demanding area (for example, an intense gunfight) We probably wouldn't notice either.

But it is undeniable that there are certain indications that DF's theories (friendlier API on PS5, prioritizing PS5 for its sales, or that some developers are using DirectX12 without using Xbox's low-level tools) could be correct.

I'm not a dev and don't work on consoles, we'd need multiple devs to chime in and let us know why, DF don't know any more than we do.

Darkness_Arises

Member

Watch him write yet another long wall of text, ending in both systems are equal but my precious X is slightly better.

man, you should relax

I am quite relaxed, not writing essays defending the honor of my precious console or the DF crew. Well, I have put out the objective facts and IMO the systems trade blows as far as specs go. Anybody who says that the XSX is better is clearly high on copium or a shill. There is no point in going around in circles on this matter. The consoles and the games speak for themselves.

Vergil1992

Member

This is theory, but I think that even though both CPUs are identical (I even think the xsx one is slightly faster), I think there is something that makes the XSX more difficult to program.Could be a number of things, who knows? Series X has a slightly higher res in a lot of cases while PS5 has a slightly higher fps in a lot of cases. Could be down to CPU maybe? PS5 might offload more tasks from the CPU freeing it up?

I'm not a dev and don't work on consoles, we'd need multiple devs to chime in and let us know why, DF don't know any more than we do.

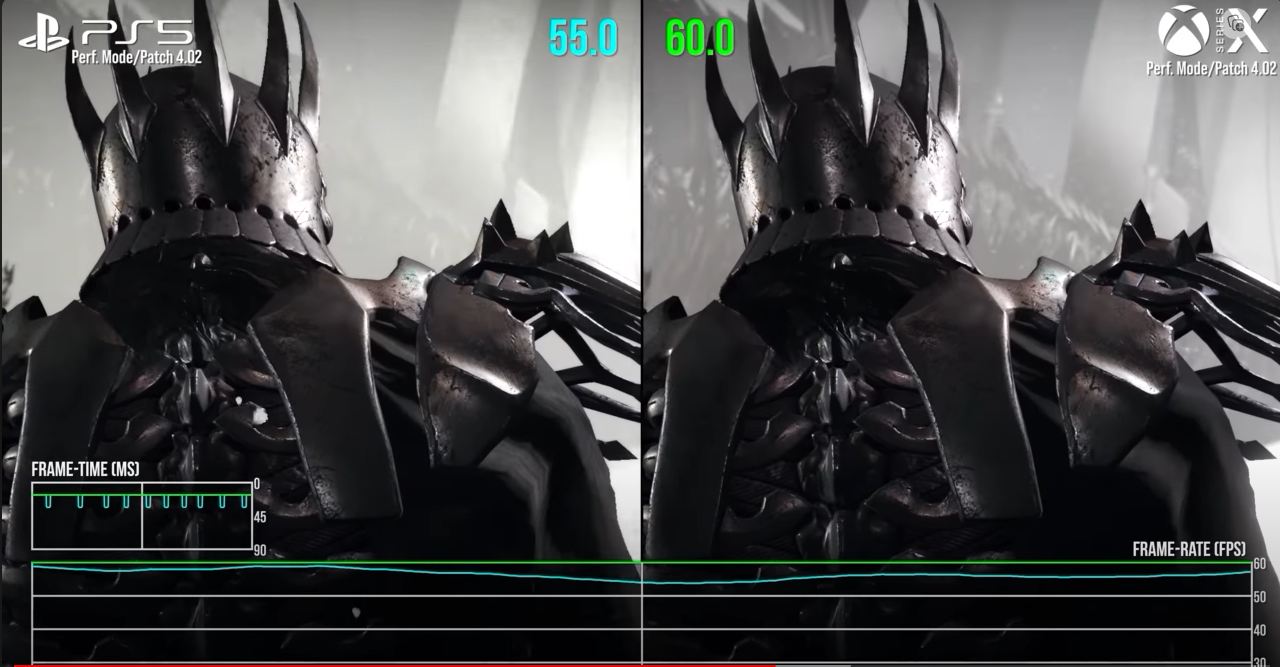

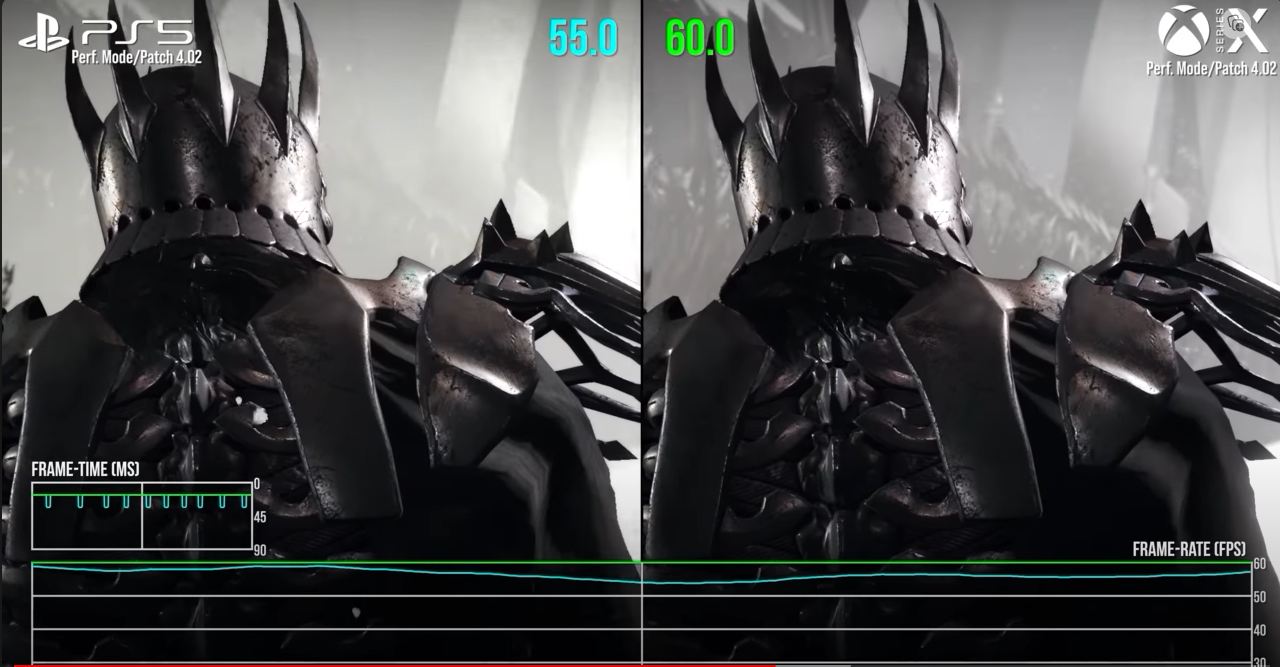

The best example is The Witcher 3, which when it launched its next-gen version, using DirectX12 in Novigrad, performance was poor on PC. A ryzen 3600 couldn't sustain a stable 60fps and had a lot of stuttering. PS5 and XSX also had a lot of drops, but especially XSX, it ran 5-10fps lower than PS5.

This was the difference:

Then they even worsened console performance:

On my PC with a Ryzen 5700X I also had occasional drops below 60fps (without RT), and if I unlocked the framerate, it was between 60-80 and had quite a bit of stuttering. When they released several updates, performance on PC was pretty good, up 20fps and a 3600 was capable of sustaining 60fps. And no stutter.

On consoles "coincidentally" it was also optimized a lot, and we reached a point where performance was practically a technical tie. PS5 was marginally better in cities (1-2fps) and XSX still held the slight advantage in cinematics.

*Although the images may be confusing, its performance was also improved on PS5. It's just different times to argue that XSX matched PS5 performance in a limited cpu zone. But anyone can watch the video and PS5 also improved its performance, just not as much as XSX, which had a worse framerate.

In Ray Tracing mode it was always better on XBX. But the point is that on PC CPU usage had terrible optimization, and when that aspect was updated and improved, PS5 and XSX benefited from it, but especially Xbox Series X

My theory is that CPU-wise, PS5 is easier to optimize. What happens is that not all developers work as hard on their games as CD Projekt after launch, and neither do the media that make comparisons look at less relevant games. But I don't think CD Projekt made magic, and increased the performance of XSX so much. If that happens, it is because it is being underused/less optimized, there is not much mystery.

PD: Darkness_Arises You are not relaxed, you are defensive.

Last edited:

DJ12

Member

This is theory, but I think that even though both CPUs are identical (I even think the xsx one is slightly faster), I think there is something that makes the XSX more difficult to program.

The best example is The Witcher 3, which when it launched its next-gen version, using DirectX12 in Novigrad, performance was poor on PC. A ryzen 3600 couldn't sustain a stable 60fps and had a lot of stuttering. PS5 and XSX also had a lot of drops, but especially XSX, it ran 5-10fps lower than PS5.

This was the difference:

Then they even worsened console performance:

On my PC with a Ryzen 5700X I also had occasional drops below 60fps (without RT), and if I unlocked the framerate, it was between 60-80 and had quite a bit of stuttering. When they released several updates, performance on PC was pretty good, up 20fps and a 3600 was capable of sustaining 60fps. And no stutter.

On consoles "coincidentally" it was also optimized a lot, and we reached a point where performance was practically a technical tie. PS5 was marginally better in cities (1-2fps) and XSX still held the slight advantage in cinematics.

*Although the images may be confusing, its performance was also improved on PS5. It's just different times to argue that XSX matched PS5 performance in a limited cpu zone. But anyone can watch the video and PS5 also improved its performance, just not as much as XSX, which had a worse framerate.

In Ray Tracing mode it was always better on XBX. But the point is that on PC CPU usage had terrible optimization, and when that aspect was updated and improved, PS5 and XSX benefited from it, but especially Xbox Series X

My theory is that CPU-wise, PS5 is easier to optimize. What happens is that not all developers work as hard on their games as CD Projekt after launch, and neither do the media that make comparisons look at less relevant games. But I don't think CD Projekt made magic, and increased the performance of XSX so much. If that happens, it is because it is being underused/less optimized, there is not much mystery.

PD: Darkness_Arises You are not relaxed, you are defensive.

Not pretending you're putting all this through Google translate anymore?

You've got to be a DF guy, judging by how deluded you are my guess is you're Alex.

Last edited:

Vergil1992

Member

I don't always use Google Translate, only when I'm not sure how to express some arguments. I can't be Alex, I'm not a big proponent of using Ray Tracing (especially on console or graphics other than 4080 or 4090).Not pretending you're putting all this through Google translate anymore?

You've got to be a DF guy, judging by how deluded you are my guess is you're Alex.

DJ12

Member

That's exactly what you would say to convince people you aren't actually Alex.I can't be Alex, I'm not a big proponent of using Ray Tracing (especially on console or graphics other than 4080 or 4090).

Welcome back to the forum Alex.

BootsLoader

Banned

What kind of flops are we talking about?

Flopas? Big Flopas?

Flopas? Big Flopas?

Die Namek Ability

Member

For someone who struggles with english enough to have to use Google translate, they sure seem to have their thoughts together and express them in a manner way beyond a typical English speaking member of this forum....hmmmThat's exactly what you would say to convince people you aren't actually Alex.

Welcome back to the forum Alex.

SlimySnake

Flashless at the Golden Globes

You perfectly know what ray tracing does to indirect lighting, so why are you acting as if you didn't? You're standing outside, seemingly at night time. The indirect lighting differences will be at their biggest in sections where sunlight cannot penetrate directly.

Here is an example of path traced vs raster during nighttime.

Raster looks pretty good, right? I bet some people thought the ray traced image was the one on the left. It's more moody and atmospheric but incorrect since there are bright fires burning all around the tent so it shouldn't be this dark. Still, raster looks really good.

However during daytime with the sun high...

Rasterization completely falls apart. I cannot stress how utterly ugly it is. I legitimately stopped playing the game back when I had my 2080 Ti because there were just too many instances where playing without ray tracing resulted in absolutely shit-ugly scenes like the one to the left that completely ruined the immersion. Unlike some other high-end games such as Ratchet & Clank or Horizon Forbidden West, Cyberpunk's texture quality is bad a lot of the times and combined with this terrible indirect lighting and shading, this makes the game look unsightly. And this isn't a cherry-picked screenshot. Go anywhere during daytime (shacks, tunnels, doorways, etc) with a lot of indirect lighting and you'll get a difference like this. Ray tracing, especially lighting, makes the game a lot more consistent visually. Try going to the Aldelcaldo camp during daytime, enter every single tent, and turn ray tracing on/off and tell me you don't see a huge difference every single time. That's just lighting. If you add reflections into the mix (and Cyberpunk has many reflective surfaces), the shortcomings of rasterization are further exposed.

No points for guessing which image has rt reflection. Does this happen every time? No? Even 50% of the time? Perhaps not even but it does happen often enough to make you not want to turn back to rasterized lighting and reflections. CDPR does a pretty damn good job with whatever techniques they're using to approximate the interactions of light sources. In some cases, it's damn near indistinguishable from ray tracing. The claim that 95% of the time the difference is barely perceptible is complete bullshit. That might apply to path traced vs ray traced (and even then, I'd disagree) but rasterization vs path traced? Just no. I'm also not a ray tracing shill like Alex Battaglia is. He really undersold the artifacts brought about by ray reconstruction. The game becomes uglier as a result. I'll take noisier reflections over all that smearing any day. It's seriously ghosting galore. NPCs faces are blurred. Every time they move, you stop being able to make out their features. When they walk past an indirect source of light, their entire body leaves a ghosting trail. Cars and car headlights smear the entire screen. It's shite in motion but looks great in screenshots.

Sorry for the derailment and long-winded post but coming from you this is just unacceptable. You know better than this.

This was my reaction after reading your post.

Dude you know me too well to know how much ive stanned for rt reflections. thats literally the only thing i want implemented from the RT feature suite. though not in every game. medival games set in the wilderness dont need ray tracing for the odd body of water you might encounter every now and then. I mean i was literally at my brother's house showing him ray traced before and after reflections in spiderman just yesterday. I literally play this game with RT reflections on and everything else off. RT reflections are NOT path tracing. Consoles can do rt reflections just fine. i can probably list over 20 games that do this.

but Cyberpunk's failures with indirect lighting is not proof that path tracing has a giant, staggering and massive advantage over standard rasterization. thats on cd project's artists and their engine for not getting it right. Secondly, and most importantly, you dont NEED path tracing for better indirect lighting. you dont NEED to spend 4-5x more gpu resources to get it right. And thats my problem with richard's statement. he's acting like path tracing is the future when matrix fucking looks better and cd project themselves are switching to UE5. So path tracing will be a thing of the past even in cd project's games going forward.

You literally said that this applies to shacks and huts and then say my 95% claim is complete bullshit. Come on, i literally spent days driving around town which makes up 95% of the cyberpunk world. day time, night time, it doesnt matter. Its great that indoors the difference is more obvious but i go back and forth playing starfield and cyberpunk and starfield's realtime GI is able to handle indoor lighting way better. you dont NEED path tracing when consoles finally have enough raw gpu power to do realtime GI.

And consoles CAN do RTGI as shown by Avatar which is using RTGI, RT Reflections and RT shadows in the 30 fps modes in PS5. and RTGI in the 60 fps mode which looks worse but it does do RTGI even at 60 fps. Wtf more does Richard want from consoles? And he doesnt want the PS5 Pro which will do even better ray tracing. I dont fucking get him.

Anyway, here are some more comparisons i took. Yes, they are not from shacks and huts you want me to look at but i just drive around taking in the sights so this is my experience 95% of the time. The last one is actually a comparison i posted because i thought the difference was massive between even RTGI and Path Tracing, but since then i have not been able to get something that noticeable. And even then, its mostly better distant shadows. Something other engines can do without having to rely upon path tracing.

Here is a gif i posted from the hardware unboxed review of path tracing. this is by far the biggest and best example of just how much path tracing can elevate the visuals, but notice how it's mostly shadows that are affected and notice how their engine cant even get RTGI and RT shadows to properly light up the celing and apply accurate shadows. the issue is with their engine not being able to do proper indirect lighting, not with standard rasterization or rtgi. So using this as some kind of gold standard for games is ridiculous.

Here are other games, all of them non RT that handle better indirect lighting than cyberpunk.

Bojji

Member

Dude you know me too well to know how much ive stanned for rt reflections. thats literally the only thing i want implemented from the RT feature suite. though not in every game. medival games set in the wilderness dont need ray tracing for the odd body of water you might encounter every now and then. I mean i was literally at my brother's house showing him ray traced before and after reflections in spiderman just yesterday. I literally play this game with RT reflections on and everything else off. RT reflections are NOT path tracing. Consoles can do rt reflections just fine. i can probably list over 20 games that do this.

but Cyberpunk's failures with indirect lighting is not proof that path tracing has a giant, staggering and massive advantage over standard rasterization. thats on cd project's artists and their engine for not getting it right. Secondly, and most importantly, you dont NEED path tracing for better indirect lighting. you dont NEED to spend 4-5x more gpu resources to get it right. And thats my problem with richard's statement. he's acting like path tracing is the future when matrix fucking looks better and cd project themselves are switching to UE5. So path tracing will be a thing of the past even in cd project's games going forward.

You literally said that this applies to shacks and huts and then say my 95% claim is complete bullshit. Come on, i literally spent days driving around town which makes up 95% of the cyberpunk world. day time, night time, it doesnt matter. Its great that indoors the difference is more obvious but i go back and forth playing starfield and cyberpunk and starfield's realtime GI is able to handle indoor lighting way better. you dont NEED path tracing when consoles finally have enough raw gpu power to do realtime GI.

And consoles CAN do RTGI as shown by Avatar which is using RTGI, RT Reflections and RT shadows in the 30 fps modes in PS5. and RTGI in the 60 fps mode which looks worse but it does do RTGI even at 60 fps. Wtf more does Richard want from consoles? And he doesnt want the PS5 Pro which will do even better ray tracing. I dont fucking get him.

Anyway, here are some more comparisons i took. Yes, they are not from shacks and huts you want me to look at but i just drive around taking in the sights so this is my experience 95% of the time. The last one is actually a comparison i posted because i thought the difference was massive between even RTGI and Path Tracing, but since then i have not been able to get something that noticeable. And even then, its mostly better distant shadows. Something other engines can do without having to rely upon path tracing.

Here is a gif i posted from the hardware unboxed review of path tracing. this is by far the biggest and best example of just how much path tracing can elevate the visuals, but notice how it's mostly shadows that are affected and notice how their engine cant even get RTGI and RT shadows to properly light up the celing and apply accurate shadows. the issue is with their engine not being able to do proper indirect lighting, not with standard rasterization or rtgi. So using this as some kind of gold standard for games is ridiculous.

Here are other games, all of them non RT that handle better indirect lighting than cyberpunk.

Games you posted are completely linear, with static time of day. Lighting is baked.

Games like that don't need any andvanced forms of real time GI to look great.

There is no comparison to open world cyberpunk that needs path tracing or RTGI to do convincing lighting. I'm playing right now with path tracing and frame gen (4070ti) and difference is big enough to don't give a fuck about higher framerate of standard RT mode.

SlimySnake

Flashless at the Golden Globes

you can compare it to matrix, avatar, starfield if you want. realtime gi is enough. RTGI is enough. hardware accelerated lumen is enough.Games you posted are completely linear, with static time of day. Lighting is baked.

Games like that don't need any andvanced forms of real time GI to look great.

There is no comparison to open world cyberpunk that needs path tracing or RTGI to do convincing lighting. I'm playing right now with path tracing and frame gen (4070ti) and difference is big enough to don't give a fuck about higher framerate of standard RT mode.

you dont need path tracing that will completely tank framerates even on a 60 tflops 4090 if you try to run it without dlss.

What you are seeing is devs doing the bare minimum and blaming it on standard rasterization. no offense but this is trash, and proof the cd project, remedy fucked up their base lighting model. not an indictment on standard rasterization or that path tracing is a necessity for next gen.

Matrix awakens has tall buildings blocking sunlight so 90% of the street level is lit by indirect lighting anyway. seems to do fine.

Underpass indirect lighting? No problem.

Avatar is using RTGI with fewer probes/rays and looks stunning even on PS5. Would a path tracing verison look even better? yes. is the difference staggering? no. is it neccessary on next gen xbox? fuck no. would i have MS make a 50 tflops console and waste 40 of those tflops rendering path tracing instead of upping asset quality, character models, destruction and physics? absolutely fucking not.

here is starfield. indoors but the the gi light bounce on her character model as she goes from different light sources. Bethesda didnt need path tracing to do shadows correctly.

Bojji

Member

you can compare it to matrix, avatar, starfield if you want. realtime gi is enough. RTGI is enough. hardware accelerated lumen is enough.

you dont need path tracing that will completely tank framerates even on a 60 tflops 4090 if you try to run it without dlss.

What you are seeing is devs doing the bare minimum and blaming it on standard rasterization. no offense but this is trash, and proof the cd project, remedy fucked up their base lighting model. not an indictment on standard rasterization or that path tracing is a necessity for next gen.

Matrix awakens has tall buildings blocking sunlight so 90% of the street level is lit by indirect lighting anyway. seems to do fine.

Underpass indirect lighting? No problem.

Avatar is using RTGI with fewer probes/rays and looks stunning even on PS5. Would a path tracing verison look even better? yes. is the difference staggering? no. is it neccessary on next gen xbox? fuck no. would i have MS make a 50 tflops console and waste 40 of those tflops rendering path tracing instead of upping asset quality, character models, destruction and physics? absolutely fucking not.

here is starfield. indoors but the the gi light bounce on her character model as she goes from different light sources. Bethesda didnt need path tracing to do shadows correctly.

I completely agree that RTGI could be enough for lighting in open world games and probably cheaper than full PT. But so far we only have Metro that does that really.

I don't get why CDPR didn't include RTGI in Cyberpunk when Witcher 3 has it. On the other hand, maybe with RT reflections, shadows and RTAO - RTGI is really not much (or at all) cheaper than PT that does those things all in one.

I don't agree with Starfield, there is nothing special about in it in my opinion. Starfield has relatively good AO and lot of shadow casting lights in closed spaces buy nothing really impressive in 2023, especially in "open world" locations.

Now Lumen... in Matrix it's really impressive and very close to PT in quality in my opinion, it was hardware accelerated there. But so far games with Lumen aren't that impressive, Immortals looks medicore and Fort Solis has lot of noise and artifacts in lighting, it should have option for hardware variant of lumen.

SlimySnake

Flashless at the Golden Globes

Cyberpunk has RTGI on PC. just not on consoles. the comparison gif i posted above cycles through path tracing, psycho rt (Which has the full GI light bounce pass that rt medium and ultra dont have) and standard rasterization. you can see that their lighting model is straight up broken even in psycho RT. Despite the fact that Psycho RT used to be THE most expensive rt setting in the game costing over 50% of framerate whenever you turned it on. Path tracing adds another 100% on top of that so now we have a game that needs 200% extra gpu power just to do proper light bounce.I completely agree that RTGI could be enough for lighting in open world games and probably cheaper than full PT. But so far we only have Metro that does that really.

I don't get why CDPR didn't include RTGI in Cyberpunk when Witcher 3 has it. On the other hand, maybe with RT reflections, shadows and RTAO - RTGI is really not much (or at all) cheaper than PT that does those things all in one.

I don't agree with Starfield, there is nothing special about in it in my opinion. Starfield has relatively good AO and lot of shadow casting lights in closed spaces buy nothing really impressive in 2023, especially in "open world" locations.

Now Lumen... in Matrix it's really impressive and very close to PT in quality in my opinion, it was hardware accelerated there. But so far games with Lumen aren't that impressive, Immortals looks medicore and Fort Solis has lot of noise and artifacts in lighting, it should have option for hardware variant of lumen.

same goes for that infamous telephone reflection screenshot Alex always used to show the amazing effect RT reflections can have in Control. Like come on dude, its clear that screenspace reflections in a simple tiny room wouldve handled those reflections just fine. Its just that remedy didnt even bother.

But like i said before, I am perfectly fine with RT Reflections. They have significant cost on the GPU but at least they provide a massive boost to fidelity compared to screenspace reflections which are also very expensive and look simply inaccurate.

Gaiff

SBI’s Resident Gaslighter

Your other post was implying that the difference between rasterization and path tracing was imperceptible. You meant path tracing vs ray tracing? If yes, it's not too contentious for me. Path tracing is nicer but ray tracing is good enough.Dude you know me too well to know how much ive stanned for rt reflections. thats literally the only thing i want implemented from the RT feature suite. though not in every game. medival games set in the wilderness dont need ray tracing for the odd body of water you might encounter every now and then. I mean i was literally at my brother's house showing him ray traced before and after reflections in spiderman just yesterday. I literally play this game with RT reflections on and everything else off. RT reflections are NOT path tracing. Consoles can do rt reflections just fine. i can probably list over 20 games that do this.

Come on. Stop comparing a demo to an actual game. We've seen demos in the past that never materialized.but Cyberpunk's failures with indirect lighting is not proof that path tracing has a giant, staggering and massive advantage over standard rasterization. thats on cd project's artists and their engine for not getting it right. Secondly, and most importantly, you dont NEED path tracing for better indirect lighting. you dont NEED to spend 4-5x more gpu resources to get it right. And thats my problem with richard's statement. he's acting like path tracing is the future when matrix fucking looks better and cd project themselves are switching to UE5. So path tracing will be a thing of the past even in cd project's games going forward.

No, I said it applies to everywhere with a lot of indirect lighting during daytime which is quite frequent. You spend a lot of time indoors without direct sunlight.You literally said that this applies to shacks and huts and then say my 95% claim is complete bullshit.

A bunch of borderline corridor shooters. Great examples. We'll see if Ubisoft delivers with Avatar or if it's bullshit.Here are other games, all of them non RT that handle better indirect lighting than cyberpunk.

And if you're going to post 20 pictures, put them in spoiler tags. There are way too many of them in your post.

Last edited:

Bojji

Member

Cyberpunk has RTGI on PC. just not on consoles. the comparison gif i posted above cycles through path tracing, psycho rt (Which has the full GI light bounce pass that rt medium and ultra dont have) and standard rasterization. you can see that their lighting model is straight up broken even in psycho RT. Despite the fact that Psycho RT used to be THE most expensive rt setting in the game costing over 50% of framerate whenever you turned it on. Path tracing adds another 100% on top of that so now we have a game that needs 200% extra gpu power just to do proper light bounce.

same goes for that infamous telephone reflection screenshot Alex always used to show the amazing effect RT reflections can have in Control. Like come on dude, its clear that screenspace reflections in a simple tiny room wouldve handled those reflections just fine. Its just that remedy didnt even bother.

But like i said before, I am perfectly fine with RT Reflections. They have significant cost on the GPU but at least they provide a massive boost to fidelity compared to screenspace reflections which are also very expensive and look simply inaccurate.

I think CP psycho RT lighting is not comparable at all to RTGI in Witcher or Metro. Something was wrong with it since day 1 just like you said. With RT GI and RT AO W3 has very good lighting, they could have implement those in CP as alternatives to PT.

SSR would completely fall apart in that room in Control, I liked this technique in 2010 in Crysis 2 but it looks like shit to me now in most games. For Control only thing that could come close are planar reflections but those are super expensive in their own way and have to be carefully crafted while RT is automatic. H3 has both:

I enjoy watching Digital Foundry and believe they're generally a respectable source for tech news and analysis.

But there is no doubt whatsoever their importability has been compromised due to close links with MS. This was particularly the case pre-launch, DF had early access to Series X/S, but kept this info secret until closer to launch. They largely set the tone Series X would be a significant jump over PS5 performance which led to 12 months of toxic discussions on social media. They also pushed the idea Series S would be a like-for-like junior console where the only compromise would be resolution.

With time we now see PS5 delivers real world performance on par with Series X, while Series S has become a real headache for developers and Microsoft. Yet only recently have DF started to question the value proposition of Series S against discounted PS5 models. Then we have DF's stance on PS5 Pro which for all the world looks like an attempt to diminish it's significance in light of MS not having a competitor.

Anyone who's followed AAA gaming and PC tech developments is well aware we are already seeing significant compromises with new titles. 60fps is now a console standard, many gamers will not accept a return to 30fps. Then we have RT performance which has been underwhelming to say the least. When it is implemented, it's a huge drag on performance, and often a pixelated, low quality mess. DF continue to underplay PS4 Pro's sales perforamnce which accounted for 20%+ of PS4 sales, all at a higher RRP, to high value consumers who purchase many games & services.

DF's stance doesn't make a great deal of sense for a tech oriented channel. Not when they're still cheerleading Series S becoming the dominant Xbox console, with gamers increasingly using cheap subscriptions which undervalues AAA development.

But there is no doubt whatsoever their importability has been compromised due to close links with MS. This was particularly the case pre-launch, DF had early access to Series X/S, but kept this info secret until closer to launch. They largely set the tone Series X would be a significant jump over PS5 performance which led to 12 months of toxic discussions on social media. They also pushed the idea Series S would be a like-for-like junior console where the only compromise would be resolution.

With time we now see PS5 delivers real world performance on par with Series X, while Series S has become a real headache for developers and Microsoft. Yet only recently have DF started to question the value proposition of Series S against discounted PS5 models. Then we have DF's stance on PS5 Pro which for all the world looks like an attempt to diminish it's significance in light of MS not having a competitor.

Anyone who's followed AAA gaming and PC tech developments is well aware we are already seeing significant compromises with new titles. 60fps is now a console standard, many gamers will not accept a return to 30fps. Then we have RT performance which has been underwhelming to say the least. When it is implemented, it's a huge drag on performance, and often a pixelated, low quality mess. DF continue to underplay PS4 Pro's sales perforamnce which accounted for 20%+ of PS4 sales, all at a higher RRP, to high value consumers who purchase many games & services.

DF's stance doesn't make a great deal of sense for a tech oriented channel. Not when they're still cheerleading Series S becoming the dominant Xbox console, with gamers increasingly using cheap subscriptions which undervalues AAA development.

Feel Like I'm On 42

Member

I enjoy watching Digital Foundry and believe they're generally a respectable source for tech news and analysis.

But there is no doubt whatsoever their importability has been compromised due to close links with MS. This was particularly the case pre-launch, DF had early access to Series X/S, but kept this info secret until closer to launch. They largely set the tone Series X would be a significant jump over PS5 performance which led to 12 months of toxic discussions on social media. They also pushed the idea Series S would be a like-for-like junior console where the only compromise would be resolution.

With time we now see PS5 delivers real world performance on par with Series X, while Series S has become a real headache for developers and Microsoft. Yet only recently have DF started to question the value proposition of Series S against discounted PS5 models. Then we have DF's stance on PS5 Pro which for all the world looks like an attempt to diminish it's significance in light of MS not having a competitor.

Anyone who's followed AAA gaming and PC tech developments is well aware we are already seeing significant compromises with new titles. 60fps is now a console standard, many gamers will not accept a return to 30fps. Then we have RT performance which has been underwhelming to say the least. When it is implemented, it's a huge drag on performance, and often a pixelated, low quality mess. DF continue to underplay PS4 Pro's sales perforamnce which accounted for 20%+ of PS4 sales, all at a higher RRP, to high value consumers who purchase many games & services.

DF's stance doesn't make a great deal of sense for a tech oriented channel. Not when they're still cheerleading Series S becoming the dominant Xbox console, with gamers increasingly using cheap subscriptions which undervalues AAA development.

Well said! DF has no integrity as far as I'm concerned.

Are you assuming Microsoft will pull a 360 and the PS6 will be like the PS3 in terms of release date?I predict that PS5 Pro will be close in power with the Main Next Gen Xbox & might actually have more GPU TFLOPS but games will look better on the next gen console

Even PS4 had a few examples against vanilla XB1 that weren't favorable. And that also had the excuse of 'better support and tools' on its side in addition to overwhelming hw advantage in every aspect.One X was superior in practically everything compared to PS4 Pro, and still had worse versions, or versions that came out with graphic problems.

Mobilemofo

Member

I'm calling a new term.. GigaPricks. For those who only clock speed matters. You know who you are.

PaintTinJr

Member

It is also worth considering that Triangle culling differences, assuming we are talking about pre-transformed triangles when triangles rendering is a bottleneck, will frequently sub the same as more CU Teraflops capability (in context) than the 36 specified because for every triangle culled from redundantly doing work on those 36, the other device is having to waste their CU capability to do 21% more, because the general purpose CPU culling systems will be equal, assuming no hardware accelerated kit-bashing is being done.It literally has a 22% better pixel fillrate than the XSX. What are you playing at? The XOS and XSS have a better pixel fillrate than the PS5, are you that dense?

The non-culled triangles also occupy resources/cache after their vertices and normals are transformed, probably not the texture coordinates, before eventually being culled late before fragmentation, which has to hold them until all three are out with the view frustum, so the efficiency loss is more than just in the geometry stages AFAIK.

The triangle rasterization capability difference in the table is partly double counting, because that is a direct result of the higher fill-rate. However it is probably more critical than its 22% because fill-rate units are Giga and the triangle flops unit are Tera, so a much smaller finite resource and likely to disproportionately impact game performance when it is the bottleneck, because it is typically a one triangle to many, many filled pixels ratio, even when ignoring reused worldspace transformed geometry saving on flops between camera and shadow map lightsources(camera position) that would be another order more pixel fragments generated per triangle..

Even nanite's ability to produce tiny pixel sized micro polygons from megascans outputs more than a single fragment per polygon, most likely 7 at a minimum, but more likely 4x 7 for a dithered/anti-aliased polygon, assuming a micro polygon would need 3 fragments per triangle edge and 1 in the middle as a filled triangle, so with 3 shared triangle corner fragments and 3 sides with 1 fragment between corners and 1 middle fragment to fill the triangle, 28 pixel fills per micro polygon sounds in the ballpark, and even as a 20:1 best case using a resource that is 50-100x smaller than the flops on these consoles should illustrate how easy it is between shadow map generation and complex shader fx at high framerates to bottleneck on that resource quickly in a way that is less dependent on final render resolution than we might think, as even a 20% drop in resolution doesn't drop the workload 20% in the fill-rate used in each render pass or shader call, it probably reduces fill-rate by less than 1/3 (7%) for those frames. Whereas dropped frame-rate wipes out all that complex shading in those frames and maybe even fully miss a full shadow pass update, which typically happens at a much lower rate than the frame rate, making an even bigger saving than just the frame-rate drop x the duration it dropped.

For the above table values we also have the lack of context that the Series consoles block BVH use while texture sampling, so the RT and texturing numbers for comparison to PS5 are either or, not both at the same time, and the comparison table can't factor-in the effect of the cache-scrubbing efficiency on the PS5, so all we can say is that the Series X isn't more powerful from the results, which lines up with all the caveats of the spec differences.

Last edited:

ChiefDada

Member

I'm not a dev and don't work on consoles, we'd need multiple devs to chime in and let us know why, DF don't know any more than we do.

I feel like they've already spoken on many occasions:

Crytek Developer said Series X memory setup was a severe bottleneck that mitigated teraflop difference and PS5 hardware setup was preferable

Invader Studios says PS5 has a distinct streaming advantage.

Shin'en/The Touryst developer says their game was able to reach native 8k because of PS5 gpu clock speed and memory setup

Ascendant Studios/Immortals of Aveum developer said PS5 has more available memory, which allowed them to bump up certain presets.

We keep hearing the same story...

Vergil1992

Member

What the supposed developer of Inmortal of Aveum says seemed very strange to me. Nobody had ever said that PS5 had more available memory, and in fact, it is something that would have shown differences. Anyway, back to the same thing: PS5 and XSX have the same amount of memory. The only way for the PS5 to have more is for the Xbox Series OS to have a higher memory consumption. Eso no sería un problema de HW.I feel like they've already spoken on many occasions:

Crytek Developer said Series X memory setup was a severe bottleneck that mitigated teraflop difference and PS5 hardware setup was preferable

Invader Studios says PS5 has a distinct streaming advantage.

Shin'en/The Touryst developer says their game was able to reach native 8k because of PS5 gpu clock speed and memory setup

Ascendant Studios/Immortals of Aveum developer said PS5 has more available memory, which allowed them to bump up certain presets.

We keep hearing the same story...

The only time where Immortals of Aveum had better graphical settings if memory serves was in the game menu, where XSX scored about 15? fps and it was because I had SSR disabled and something else (ambient occlusion?). But the truth is that the developer was quite funny. It's a game that shows a solid advantage of XSX over PS5 (5-10fps in times of stress) and graphically they looked quite similar, only on PS5 it had some sharpening filter.

The funny thing is that it seems that Cyberpunk on XSX is the "losing" version when it has the same graphical settings and a higher resolution because it loses a few frames more frequently than on PS5, but then in Immortals of Aveum it was just the opposite. It had the same visual quality and resolution, and the Xbox Series X has a solid 5-10fps lead. But since PS5 has a sharpening filter it seems that the narrative was that the framerate didn't matter. Very curious.

Anyway, I agree that developers tend to have a preference for PS5 and its design. At least that is deduced from the statements of some developers, especially indies. But here we must make a point: what developers like is ease. If you have a machine that you can use more easily and more efficiently, and another that is less so, the developer will not care that among a huge range of resolutions one can put 15-18% more pixels in screen. These are people who are working, not competing in a console war. If the PS5 API/design/development environment is friendlier to them, they're going to prefer it, regardless of whether at the end of the day XSX is a little more powerful.

Last edited:

Vergil1992

Member

Exact! That's what I mean. Even if XSX is slightly more powerful (let's say it's a guess, I don't want to argue with anyone lol), the developers are going to care little if it can run at 1200p and on PS5 at 1100p (to say the least).Honestly anybody thinks devs give a fuck about optimising for 20% more performance advantages in "some expects". They will just focus on base spec for both consoles adjust settings and slam out there product.

These boxes are practical identical.

Developers are people like us working. They want their jobs to be easy, just like (I suppose) any of us. They don't care about the console war, the forums, or that a console has a slightly higher internal resolution (within a cluster of temporal reconstructions, TAA, dynamic resolution, rescaling like FSR...), they won't care at all.

But if PS5 really has friendlier tools and a more efficient design... then it will be the preferred console. Although it could also be the preferred one because it will generate more $$$. We can't know.

The PS5 doesn't have asynchronous ram like the series consoles and there's a possibility of flexible ram like the PS4 pro. Also,the series X has 10gs of gaming optimal amount of RAM before there's a penalty when going over that for CPU tasks.What the supposed developer of Inmortal of Aveum says seemed very strange to me. Nobody had ever said that PS5 had more available memory, and in fact, it is something that would have shown differences. Anyway, back to the same thing: PS5 and XSX have the same amount of memory. The only way for the PS5 to have more is for the Xbox Series OS to have a higher memory consumption. Eso no sería un problema de HW.

The only time where Immortals of Aveum had better graphical settings if memory serves was in the game menu, where XSX scored about 15? fps and it was because I had SSR disabled and something else (ambient occlusion?). But the truth is that the developer was quite funny. It's a game that shows a solid advantage of XSX over PS5 (5-10fps in times of stress) and graphically they looked quite similar, only on PS5 it had some sharpening filter.

The funny thing is that it seems that Cyberpunk on XSX is the "losing" version when it has the same graphical settings and a higher resolution because it loses a few frames more frequently than on PS5, but then in Immortals of Aveum it was just the opposite. It had the same visual quality and resolution, and the Xbox Series X has a solid 5-10fps lead. But since PS5 has a sharpening filter it seems that the narrative was that the framerate didn't matter. Very curious.

Anyway, I agree that developers tend to have a preference for PS5 and its design. At least that is deduced from the statements of some developers, especially indies. But here we must make a point: what developers like is ease. If you have a machine that you can use more easily and more efficiently, and another that is less so, the developer will not care that among a huge range of resolutions one can put 15-18% more pixels in screen. These are people who are working, not competing in a console war. If the PS5 API/design/development environment is friendlier to them, they're going to prefer it, regardless of whether at the end of the day XSX is a little more powerful.

Kenpachii

Member

Exact! That's what I mean. Even if XSX is slightly more powerful (let's say it's a guess, I don't want to argue with anyone lol), the developers are going to care little if it can run at 1200p and on PS5 at 1100p (to say the least).

Developers are people like us working. They want their jobs to be easy, just like (I suppose) any of us. They don't care about the console war, the forums, or that a console has a slightly higher internal resolution (within a cluster of temporal reconstructions, TAA, dynamic resolution, rescaling like FSR...), they won't care at all.

But if PS5 really has friendlier tools and a more efficient design... then it will be the preferred console. Although it could also be the preferred one because it will generate more $$$. We can't know.

Like honestly who gives a fuck about 20% performance increase. I can't even enable pathtracing on PC in cyberpunk without a 100% performance increase. What am i going to get better from a 20% performance increase? my 30 fps game is now 36 fps, WOW amazing. Or that 1 shadow setting can now be upgrade from medium to a bit more medium, insane.

Let me call up df so they can 400% zoom in on a shadow in the distance i will never see in my life to see if its less blocky and call it a clear win.

Honestly 20% means nothing.

I upgraded my "PC solution" a month ago. From a 3080 desktop to a 4080 laptop, performance is identical, but the resolution got cut in 2 so 2x more performance there + frame gen that's another 100% added. Now i can play pathtracing cyberpunk instead of 30's at 90's. Now that's a difference.

Vergil1992

Member

I have read the penalty argument (memory contention perhaps?) several times, but I would like to understand it; What difference is there from the penalty that PS5 has? Both have a single memory pool that the CPU and GPU will access, the bandwidth penalty when CPU/GPU access them simultaneously is inevitable. The only thing that could penalize performance is that if you need more than 10GB in XSX for the GPU you would have to deal with slower bandwidth as well, but it is not very slow compared to the bandwidth of PS5, and probably in addition to those 6GB there is high CPU/OS usage. The truth is that I am not very clear about the reason for MS's decision, it does not seem that it is to reduce costs, surely there is more demand from R/D to make a configuration like that, but without a doubt for the developers I do not think it is an very smart option. but the memory contention issue should be on both platforms. This problem is generated when CPU/GPU simultaneously access a single memory pool. On PS4, for example, I also had this problem and did not have memories with different bandwidths. I do not pretend to be absolutely right and if someone disagrees I will be happy to debate, but it does not seem logical to me.The PS5 doesn't have asynchronous ram like the series consoles and there's a possibility of flexible ram like the PS4 pro. Also,the series X has 10gs of gaming optimal amount of RAM before there's a penalty when going over that for CPU tasks.t have asynchronous ram like the series consoles and there's a possibility of flexible ram like the PS4 pro. Also,the series X has 10gs of gaming optimal amount of RAM before there's a penalty when going over that for CPU t

What I forgot to say is that the developer (supposed?) I think said that the XSS/XSX OS took up more memory and that's why they had more available on PS5. But it still seems very strange to me, it is something that would have been very noticeable in multiplatform games.

Neo_game

Member

I predict that PS5 Pro will be close in power with the Main Next Gen Xbox & might actually have more GPU TFLOPS but games will look better on the next gen console

Next gen SS could have same gfx performance as SX. Assuming SX is their Pro version of this gen

Yes & I'll take it a step further & say Xbox will not be going up against PlayStation in a Console War but will be facing Apple & Google in a Platform War .Are you assuming Microsoft will pull a 360 and the PS6 will be like the PS3 in terms of release date?