Dolomite

Member

Fair enough, we've gotten pretty detailed. My badI'm enough with theories here anyway, let the rest enjoy their VRS talk.

Fair enough, we've gotten pretty detailed. My badI'm enough with theories here anyway, let the rest enjoy their VRS talk.

I assume you're mod verified. If so, that makes you the genuine article as a game developer with access to these devkits (as you claim).

This is a fascinating write up. If you don't mind my asking, when you say that PS5 is 22GB/s effective, you mean that this isn't a pipedream figure but something that developers can meaningfully achieve? This is something that your team, for instance, is seeing right now in development?

I know you're bound out the wazoo in NDAs, if you tell me you can't talk about it I get it.

It's only doable with data that compresses particularly well at that rate, such as some audio and video data, where lossy compression doesn't really affect the quality that much.

By that metric, however, MS could've touted a higher "particularly well-compressed" figure if they wanted (I mean besides the 6 GB/s figure), but again, if it's mainly towards particular audio or video file types, then it is what it is :/

Since when are Epic making Rachet and Clank?Knowing that there were professionals who questioned Tim Sweeney (and they had to retract publicly) I do not know why it is strange that my words are questioned. But you know that I don't care. You did not know anything when you wrote your message and you will not know anything when you finish reading mine. Ignored. Next?

Yeah you asked an honest question . fanboys are just toxic to the community. I'd love a Digit foundry breakdown of both to just out the speculation to restSince when are Epic making Rachet and Clank?

Dont move the goal posts.

You stated as a fact that the XSX couldnt do what was shown on Ratchet, yet you have never laid eyes on a XSX, let alone work on one. When I asked you how much data was used on the screen swap, you said you had no idea. It was a fair question, because we know the size of the XSX RAM, and we know the speed of the SSD, so we could get an idea of what amount of data is required. We know the XSX has faster RAM bandwidth, so it is able to feed the GPU quicker than the PS5 can to put out the image on the screen, so I am wanting to know exactly why it cant be done on XSX.

You threw out the 22gbs per second as the PS5 SSD speed, when that isnt the case. Why you would do that I dont know.

Im pretty respectful, I havent called you names, I have asked you to back up what you stated with actual facts like data requirements for the screen swap.

And don't get me wrong, absolutely the PS5 SSD can feed the RAM quicker than the XSX can, thats not in debate. The question is if thats the limiting factor.

You have totally ignored RAM and Bandwidth.

Sorry, I did read it, but I didn't get the sarcasm in it. I have only seen that said about the PS5, but If you saw anyone saying it about the XBSX the same thing applies.Lol what? Did you actually read what I typed? Or did you go in auto defense mode?

wow, what is that up there i thought you posted this.(keep the goalpost where you 1st had it plz)RTX 2080Ti - 13.4TF

RTX 2080 - 10.1TF and VRAM limited

So at 30 % more TF, do you see 30 % more performance at 4K ?

what you are implying here is the GPUs will perform the same.Ps5 is faster, be worried. Crap vs crap.

point being made is one GPU has 20 % more TF and is wider, one is 20 % faster clocks, one has bigger api abstraction, one is more direct api, both use same speed RAM chips 14 gbps, one is wider for GPU access and narrower for other CPU and audio access. One has 100 % faster IO.

And so far most third parties have said they are same resolution (Destiny etc).

But keep telling that to yourself.

Compression is a compromise, your trading space for something.Optimization doesn't always mean making comprimises the player care about, spiderman optimized it's compression with no drawbacks.

Compression is a compromise, your trading space for something.

Since when are Epic making Rachet and Clank?

Dont move the goal posts.

You stated as a fact that the XSX couldnt do what was shown on Ratchet, yet you have never laid eyes on a XSX, let alone work on one. When I asked you how much data was used on the screen swap, you said you had no idea. It was a fair question, because we know the size of the XSX RAM, and we know the speed of the SSD, so we could get an idea of what amount of data is required. We know the XSX has faster RAM bandwidth, so it is able to feed the GPU quicker than the PS5 can to put out the image on the screen, so I am wanting to know exactly why it cant be done on XSX.

You threw out the 22gb per second as the PS5 SSD speed, when that isnt the case. Why you would do that I dont know.

Im pretty respectful, I havent called you names, I have asked you to back up what you stated with actual facts like data requirements for the screen swap.

And don't get me wrong, absolutely the PS5 SSD can feed the RAM quicker than the XSX can, thats not in debate. The question is if thats the limiting factor.

You have totally ignored RAM and Bandwidth.

There is a ton we dont know about, which is kinda my point.Seemed like it used a cutscene instead.

I would say yes, jokes are necessary.

Again, until someone can come out and say what level of data is going to need to be streamed into the RAM from the SSD to cover the data needed in RAM to change the screen, and show how the XSX wont be able to meet that requirement, then it's all just guessing and fanning.Only it loads in significantly less assets or asset complexity.

There isn’t really a simple equivalence there.

There is a ton we dont know about, which is kinda my point.

People saying what could be done, what couldnt be done, who really have no idea.

This isnt going to play out for a while.

I though R&C look stunning. But it's Insomniac, now with Sonys back up and access to all of their other studios IP, so without doubt we knew they would put out an amazing looking game.Well that's what it looks like to me in that trailer. I doubt have a doubt that if it's on the same level as what we've seen in R@C we will see it in the future.

Right now it's just a waiting game.

I dont know much about him.You guys realize you're wasting your time with an obviously biased PSVR dev right?

He knows nothing beyond the vr stuff and has no xsx devkit. And lets not forget he's one of "insiders" telling people Ps5 was in "puberty" which then lead to the 13.3 tf bs.

Pretty much just anotherOsirisBlack

I think MS next statement on this will be centered around sample feedback and directML and only Loading what is needed and let Machine learning fill in the gaps.The access time of current Phison controller is about 25 microseconds or whatever if anyone can be arsed to read the specsheet of each model, however that is just the access time to find where the data is, not to transfer it.

Instantly accessible is a play on words, does not mean instantly loads.

Do you have to allocate processing time and or latency to decompress the answer is yes?No, sometimes doing compression in a certain way is just better in every way and has no drawbacks.

I think MS next statement on this will be centered around sample feedback and directML and only Loading what is needed and let Machine learning fill in the gaps.

that might be their only possible move.

a good move though.

Do you have to allocate processing time and or latency to decompress the answer is yes?

There will always have drawbacks, whether you have to allocate silicon to it on the hardware or use CPU cycles.

The is no free compression method, the benefits may outweigh the cost,

but it's going to cost you any combination of image/data quality, processing cycles, and latency somewhere to decompress.

now tell me about the spiderman no compromise compression method.

I dont know much about him.

I did know about the wrong PS5 specs, but I put that down to old GCN dev kits which apparently were around the 13tflops.

You need the hardware to perform it if you want the benefits of hardware acceleration. Otherwise you implement your own solution in software, which is less efficient, but can be technically done.

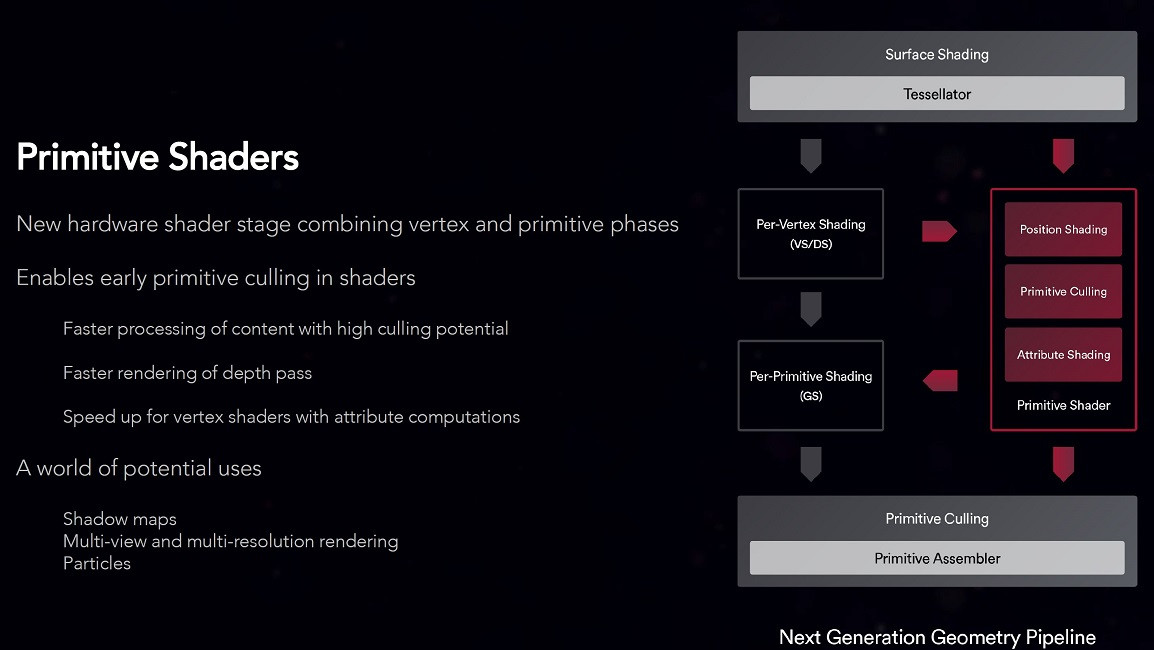

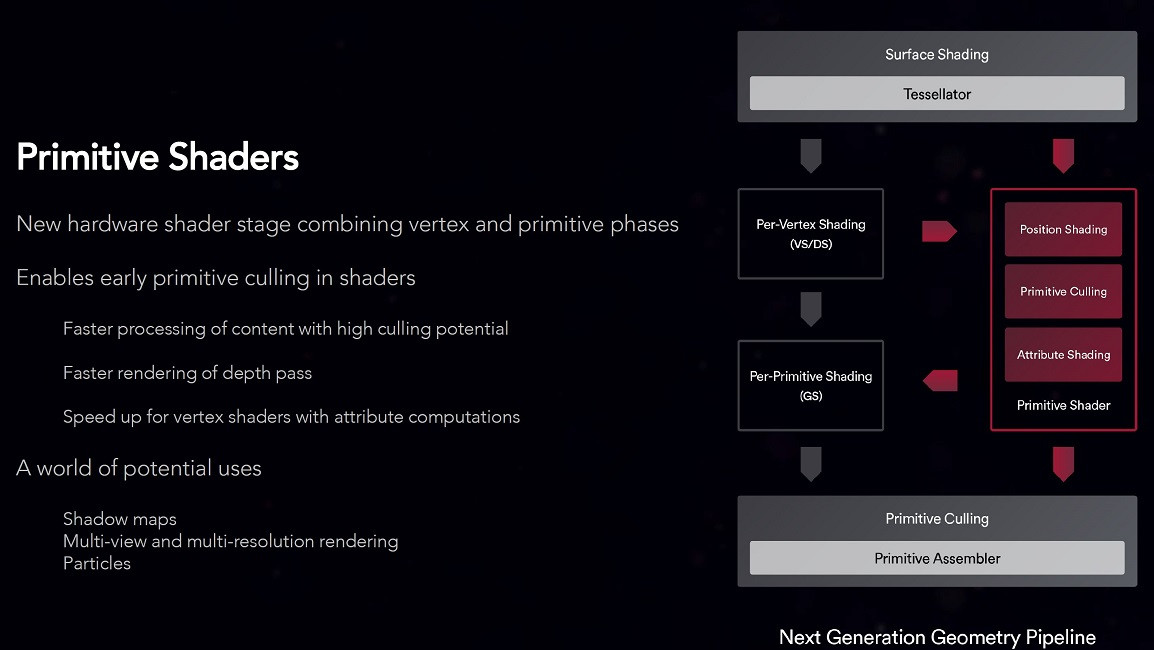

IIRC among AMD GPUs RDNA1 does not support VRS, just RDNA2 and onward. If Sony has an equivalent to VRS, they are implementing it differently, maybe with some customizations to the GE and PSes. And it would not be called VRS as that particular term is patented by Microsoft.

Some people mentioned MS and Intel's patents referencing Sony's, but didn't keep in mind that Sony's was for foveated rendering in application with the PSVR. That and VRS are similar in some aspects but operate and are applied differently. You can have two technologies with similar base DNA but very different implementations and functionality in practice, just look at PCM technologies like 3D Xpoint and ReRAM. It's nothing new.

The 100 GB bit, some of us have speculated, might be in regards to the GPU addressing a partition of data on the drive as extended RAM (it sees it more or less as RAM) through GPU modifications built off of pre-existing features of the XBO such as executeIndirect (which only a couple of Nvidia cards have support for in hardware). GPUDirectStorage, as nVidia terms it, already allows GPUs in non-hUMA setups to access data from storage into the VRAM. It's particularly useful for GPUs in that type of setup, but since these are hUMA systems that on the surface wouldn't seem necessary.

But...what if there's more to that virtual pool partition on XSX than meets the eye? We know the OS is managing the virtual partitions of the two RAM pools on the system, is it possible in some case that the GPU can access the 4x 1 GB RAM modules while the CPU accesses the lower-bound 1 GB of the 6x 2 GB modules? We don't know if this is the case or not, but if the OS can virtualize a split pool for optimizing the bus access of the GPU and CPU in handling contention issues, it might also theoretically be able to implement a mode, even if just in specific usage cases, to virtualize the pool as a 4x 1 GB chunk to the GPU and 6x 1 GB chunk to the CPU that can have them work simultaneously on the bus in those instances.

The tradeoff there would be collectively only 10 GB of system memory is being accessed, but the OS could then just re-virtualize the normal pool partition logic as needed, usual penalties in timing factoring in. Which wouldn't necessarily be massive whatsoever; if Sony can supposedly figure a way of automating the power load adjusting in their variable frequency setup to 2 Ms or less, I don't see how MS wouldn't be unable to do what's proposed here in even smaller a time range.

Anyway, the 100 GB being "instantly available" was never a reference to the speed of access but maybe something in regards to the scenario I've just described; even if the data is going to RAM, and the RAM it can go to is cut down to 4 GB physical with this method (if it would need to go to more RAM than that and/or need a parallel rate of data transfer greater than 224 GB/s, it'd have to re-virtualize the normal memory pool logic), at the very least the GPU can still transfer data while the CPU has access to data in the 6 GB pool on the rest of the bus, simultaneously.

Again, though, it'd depend on what customizations they've done with the GPU here and also, what extent the governing logic in the OS and kernel for virtualizing the memory pool partitions operates at. But it certainly seems like a potential capability and a logical extension of the GPUDirectStorage features already present in nVidia GPUs as well as things like AMD's SSG card line (it works very similarly I would assume, i.e drawing data directly from the 2 TB of NAND and transferring it to the GPU's onboard HBM2 VRAM, rather than needing to have the CPU draw the data from storage, dump it in system RAM, and then have the GPU shadow-copy those assets to the VRAM as how many older-generation CPU/GPU setups on PC operate). I'm gonna do a little more thinking on this because there might be some plausibility in it being what MS has done with their system setup, IMHO.

That should be the reason we discussed XSX and PS5 memory setup are too slow compared with what PC has...Considering the XSX has a far quicker memory set up than any PC does, I would say thats not a problem.

The drawback is processing then, you pay for the dedicated silicon used to unpack the data (you save on storage, so it's probably worth it).No, sometimes doing compression in a certain way is just better in every way and has no drawbacks.

Either that or a very bad LOD issueIsn't this VRS? (I may be wrong, this may have been posted before... just wondering, there are definitely effects running at lower resolution in the GT trailer)

Actually the bias started way before that.DF used to be perfectly fine and THE source for comparisons between PS4 and Xbox One, very professional, la crème de la crème, but something happened in 2017, something that would change Digital Foundry's credibility completely through present day. Can anyone guess what happened?

I remember too.Actually the bias started way before that.

At PS4/XB1 launch they tried to pass an inferior BF4 video as running on PS4 and after they were exposed they said it happened because they did the capture equipment setup wrong lol

Richard started to do damage control to MS with Xbox One inferior games at launch.

They have several articles about balance, secret sauce, etc.

If you read PS4 and XB1 articles from 2013/2014 you will easily spot all the laughable bias.

I'm talking about a basic scenario where you are compressing information in one way, but after optimization you use a different method that give you the same data when uncompressed but it takes up less space on storage and in decompressed faster.The drawback is processing then, you pay for the dedicated silicon used to unpack the data (you save on storage, so it's probably worth it).

Agreed. This:I'm surprised people keep bringing up a small environment transition within a trailer and somehow comparing it to loading entirely new environments during gameplay in under 2 seconds as some kinda 'gotcha'.

Beyond grasping at straws at this point.

You'd think everyone would be happy at getting to finally see a game running on next gen hardware, but nope, in the 3rd millennium there is only war.

Problem for PC is that data has to go from the drive to system RAM, and then to VRAM.That should be the reason we discussed XSX and PS5 memory setup are too slow compared with what PC has...

GPUs with the capacity of XSX and PS5 are used to way higher memory speeds in PC.

I've linked Nvidia's GPUDirectStorage quite a few times after Cerny's "Road to PS5" video regarding how PC's can reduce the I/O gap. However, that isn't what we are discussing here. Like you stated, GPUDirectStorage is a solution to bypass bouncing data around (from [storage --> system ram --> gpu ram] to [storage --> gpu ram]) on a computer system that is non-hUMA. This doesn't apply to consoles.

Additionally, I would rather not speculate on the little/vague information we have regarding Microsoft's Velocity Architecture. Sony didn't develop SmartShift (variable power frequency), this is AMD tech. What we can soundly say is that, data from a storage device needs to be in RAM for the GPU to process/render that data. Regardless on how it gets there. Lets say for some miracle Microsoft did figure out away to burst that much data and have it readily available for the GPU to render, don't you think developers would be singing praise to this? The tech is finalized, and developers have their hands on the hardware.

Isn't this VRS? (I may be wrong, this may have been posted before... just wondering, there are definitely effects running at lower resolution in the GT trailer)

Yes, this is a different thing, just re-organizing your data or finding ways to accelerate routines that are used very often, to do the same faster and/or while using less memory, has no drawbacks.But we can totally drop the compression example. there are tons of other examples of when optimization has no drawbacks.

Devs also have NDAs; even AMD have NDAs to adhere to until Sony and MS officially go foward with certain features supported in the system.

I didn't necessarily bring up GPUDirectStorage to suggest that in itself is what either of these systems are doing, since we both agree that isn't necessary in hUMA systems. But if we're in here theorizing about certain potential features of these systems, as long as we can rationalize and describe the method behind those ideas, there should be no foul in bringing these concepts up.

People are asking about how the 100 GB "instantly accessible" storage works on XSX; we have our theories and share them. I have mine, I've shared them. That's part of speculation; nothing should be out-of-bounds in regards technical speculation, especially if it can potentially help in understanding these systems better.

I feel I've presented a pretty reasonable theory on what could be getting done with that data in the 100 GB partition of the storage by the GPU to be supposedly "instant"; it's an idea that doesn't violate the rules of skipping RAM altogether, and it takes into account possible realistic flexibility with the OS's virtualized memory pool management of the GDDR6 in data transfer from storage to RAM, building off a concept already present in GPUs installed in non-hUMA setups. Just because the specific implementation within non-hUMA systems becomes redundant in a hUMA design, doesn't mean the concept at its root is inflexible, or can't be successfully implemented in a hUMA architecture especially if there are other design aspects to the hardware that invite the possibility.

No. It looks like aliasing to me. There's a lot of it on that car model tbh, maybe the picture is zoomed in a bunch?

I get that you're an industry professional but that's no indication of even-handedness. A lot of professionals are also biased toward one manufacturer over another. It's pretty clear from your posts that you lean heavily toward Sony and view everything through that filter.Knowing that there were professionals who questioned Tim Sweeney (and they had to retract publicly) I do not know why it is strange that my words are questioned. But you know that I don't care. You did not know anything when you wrote your message and you will not know anything when you finish reading mine. Ignored. Next?

Isn't this VRS? (I may be wrong, this may have been posted before... just wondering, there are definitely effects running at lower resolution in the GT trailer)

Yes, this is a different thing, just re-organizing your data or finding ways to accelerate routines that are used very often, to do the same faster and/or while using less memory, has no drawbacks.

Nope, 1:1 pixel on the screen, look at the headset of the guy below and the actual limit of the car... it seems like only the effects are running at a lower resolution (I took this from a thread with official full resolution screenshots, left them in tabs on my computer for a couple of days since I had no time to post in there--I found other annoying things, but they are unrelated to the topic, and should be because of alpha/beta software).

EDIT: this is a .jpg screenshot, so it may be compression artefacts, I have not found PNGs.

You are a very dedicated fan, has it occurred to you that you may well perceive bias where none exists? From your point of view any article that didn't damn the Xbox One was a form of sacrilege. Any article that didn't worship the advantages of the PS4 an outrage.Actually the bias started way before that.

At PS4/XB1 launch they tried to pass an inferior BF4 video as running on PS4 and after they were exposed they said it happened because they did the capture equipment setup wrong lol

Richard started to do damage control to MS with Xbox One inferior games at launch.

They have several articles about balance, secret sauce, etc.

If you read PS4 and XB1 articles from 2013/2014 you will easily spot all the laughable bias.

No, they relayed techno babble made by marketing types without questioning it.You are a very dedicated fan, has it occurred to you that you may well perceive bias where none exists? From your point of view any article that didn't damn the Xbox One was a form of sacrilege. Any article that didn't worship the advantages of the PS4 an outrage.

If that was the only issue.You are a very dedicated fan, has it occurred to you that you may well perceive bias where none exists? From your point of view any article that didn't damn the Xbox One was a form of sacrilege. Any article that didn't worship the advantages of the PS4 an outrage.

Isn't this VRS? (I may be wrong, this may have been posted before... just wondering, there are definitely effects running at lower resolution in the GT trailer)

I think you need to provide examples, then we can judge whether you've got a point or are just finding bias where none exists.If that was the only issue.

Every PS4 article has something about XB1 while XB1 articles are just XB1 articles.

Like I said just read the articles that you will easily spot the bias.

BTW the bias is even more evidence in the videos with Alex or Richard.

1. Not my problem.So, you posted false performance gains because it was based on reddit rumor. Anyway VRS performance gains are around 15% - 20% IIRC in strategic game Gears Tactics according to DF, but compromise is lower IQ

FYI, Mesh shaders and VRS are also used in Vulkan API, not just DirectX12 API.Listen to the time stamped cerny video carefully, Cerny said new geometry engine for RDNA2, then he describes Mesh shaders and VRS without using the DX12 names.

He also said a brand new geometry engine feature - synthesised geometry on the fly, I need to watch MS -mesh shaders again to see if its unique or not - have you heard of that feature ?

Nope Cerny said they are culling the vertices before processing them and mentioned Brand new features for NEW Geometry engine RDNA2.

Go watch his presentation again, and try listening.

Listen to Cerny again carefully, he calls the geometry engine NEW in ps5 for custom RDN2 and mentions SYNTHESISE GEOMETRY ON THE FLY AS A BRAND NEW CAPABILITY, ..TIMESTAMPED.

Cerny said Performance optimisation such as removing backfaced /removal of vertices and offscreen traingles

Real-life RTX 2080 FE and RTX 2080 Ti FE can exceed paper spec numbers.RTX 2080Ti - 13.4TF

RTX 2080 - 10.1TF and VRAM limited

So at 30 % more TF, do you see 30 % more performance at 4K ?

I don't have to dream to know that reality is not always how many people want to bend it^^Was this a dream (or nightmare in your case) you had?

Sorry, I did read it, but I didn't get the sarcasm in it. I have only seen that said about the PS5, but If you saw anyone saying it about the XBSX the same thing applies.

SSD->Memory->GPU.

wow, what is that up there i thought you posted this.(keep the goalpost where you 1st had it plz)

what you are implying here is the GPUs will perform the same.

does the 2080ti perform the same as 2080?

there actually is a lot more going on such as bandwidth that makes the delta between the 2080ti and 2080 what it is at 4K.

what is bigger api abstraction?

and why say both have 14gps when one has over 100Gb/s bandwidth.

and what is this narrow vs wider for CPU access and audio access? do you know the bus width of these subsystems?

Compression is a compromise, your trading space for something.

and why are you qualifying weather optimization needs to mean something to the player.(goalpost moving.)

and why would you even think there are no drawbacks. (maybe you should qualify that statement)

Real-life RTX 2080 FE and RTX 2080 Ti FE can exceed paper spec numbers.

NVIDIA GeForce RTX 2080 Founders Edition 8 GB Review

It was very bold of NVIDIA to debut its flagship implementation of the Turing architecture right next to the RTX 2080, poised to be the poster-boy of this architecture. This card packs the promise of real-time ray tracing, of sorts. NVIDIA also put out its best cooler design since TITAN. All...www.techpowerup.com

RTX 2080 FE has 1897 Mhz average with 46 CU equivalent yields ~11.17 TFLOPS

NVIDIA GeForce RTX 2080 Ti Founders Edition 11 GB Review

NVIDIA debuted its Turing graphics architecture today, straightaway with the flagship RTX 2080 Ti. This card packs the promise of real-time ray tracing at 4K UHD, besides huge gains in performance. NVIDIA also put out its best cooler design since TITAN, commanding a very high price for some very...www.techpowerup.com

RTX 2080 Ti FE has 1824 Mhz average with 68 CU equivalent yields ~15.87 TFLOPS

15. 87 / 11.17 = 1.42.

I own both MSI RTX 2080 Ti Gaming X Trio and ASUS RTX 2080 Duel EVO GPUs.

They literally say they don’t believe it, it’s not a compliment it’s actually a lack of trust, which is the contrary of a compliment.

And it’s pretty normal, I’m skeptic too about any of this consoles reaching a graphical level that damn good.

I hope so, but right now the normal reaction to something like that being possible in-game is skepticism.

You guys realize you're wasting your time with an obviously biased PSVR dev right?

He knows nothing beyond the vr stuff and has no xsx devkit. And lets not forget he's one of "insiders" telling people Ps5 was in "puberty" which then lead to the 13.3 tf bs.

Pretty much just anotherOsirisBlack

I get that you're an industry professional but that's no indication of even-handedness. A lot of professionals are also biased toward one manufacturer over another. It's pretty clear from your posts that you lean heavily toward Sony and view everything through that filter.

Dear brother, don't waste your time here, with all due respect to the respectful posters with similar/opposite opinions. Time will answer all these, and PS5 has shown things unseen on any other gaming device so far. Let the games do the talking.

RTX 2080 has variable clocks?RTX 2080Ti - 13.4TF

RTX 2080 - 10.1TF and VRAM limited

So at 30 % more TF, do you see 30 % more performance at 4K ?