Bo_Hazem

Banned

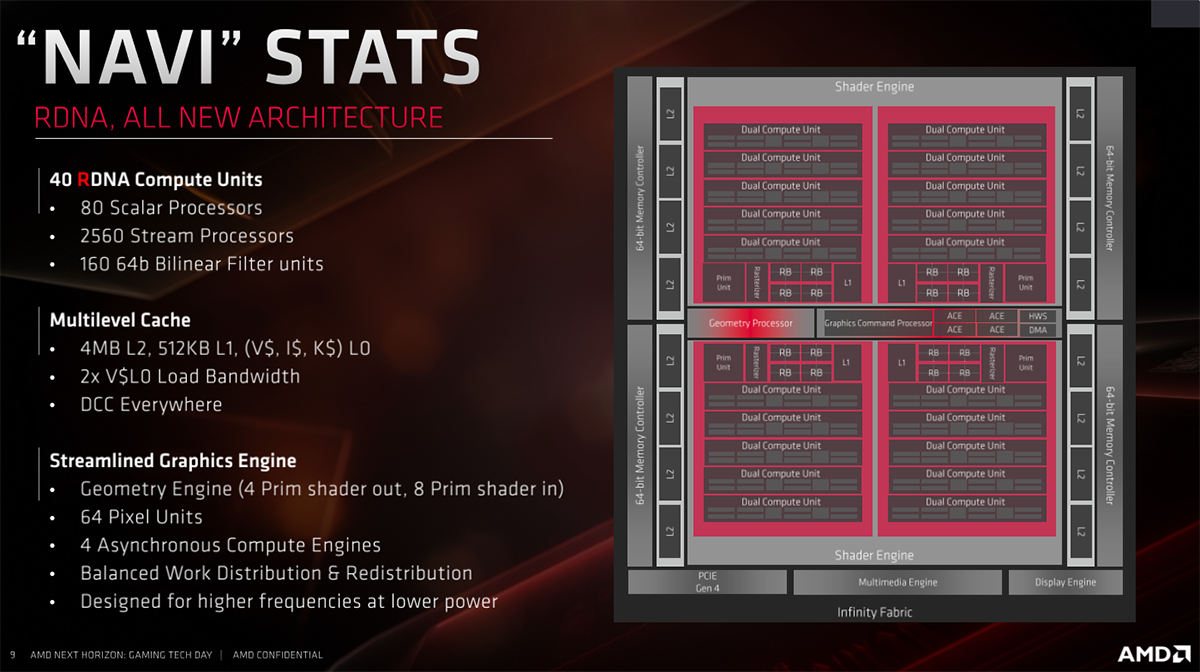

I'm just saying that, going by the GE and PS stuff on RDNA1, both of those were already present in the first generation. So while Primitive Shaders work a lot of Mesh Shaders, they aren't quite the same thing and have some very key differences. If you're insisting Sony's Primitive Shaders aren't customized with at least some of the improvements from Mesh Shaders, and their Geometry Engine isn't too different from what AMD already referred to as Geometry Engines in RDNA1 then...

...well, I dunno. It would mean they chose to optimize/fine-tune aspects of older technology kind of like @Elog is suggesting, maybe with inspirations from newer roadmap features, rather than taking those newer features wholesale. The question is though, why would they do that? It would kind of lend credence to an idea that they did have an earlier launch planned, and an earlier launch would have meant going with an RDNA2 base GPU would not have been possible. So why not use RDNA1 as the base and bring some RDNA2 features from the roadmap forward while customizing parts of the RDNA1 to reflect potential performance metrics of RDNA2 GPUs?

Sony using AMD's nomenclature for the GE and PS seems to basically stealth-confirm that for me, and again if you go back to the Ariel and Oberon testing data, you do see a progression from RDNA1 (Ariel) to RDNA2 (Oberon), but how and what type of transition that actually was between them is extremely curious. I think Sony deciding on a 36 CU GPU from the outset also belays the intention of using a custom RDNA2 GPU, in their case possibly meaning RDNA1 as the base blueprint and bringing forward RDNA2 features in a way to fit that base blueprint. And then things on Sony's end like the cache scrubbers possibly influencing AMD with future RDNA3 features. That seems it would be different from MS, who seemingly have gone with RDNA2 as their base blueprint and maybe doing their own customizations that could've also influenced some RDNA3 features in the near future.

Also this is just speculation on my end but since Sony's BC is hardware-based that might've also influenced decision to go with a custom RDNA2 GPU that has base RDNA1 and other RDNA2 features integrated in (even if in terms of how the hardware for those features works is a bit different from standard RDNA2). RDNA1 still has a good deal of GCN hardware support; while RDNA2 got rid of any base GCN hardware architecture in its design (or vast majority of it; IIRC it can still support GCN microcode but basically via emulating it?).

You might be on to something. I am starting to think now they've gone with a custom RDNA2 GPU with RDNA1 architecture at the base, but bringing forward some RDNA2 features (even if they are implemented differently on Sony's end) to customize aspects of the RDNA1 feature set and hardware architecture, basically using those RDNA2 features as inspiration to address things in their chosen route that would've caused performance issues.

The question is what exactly those things are, and to what extent those changes may've gone. I think if you look at the timeline of PS5 development and the information we have been getting for the past two years or so, this idea of their custom RDNA2 GPU being an RDNA1 base with alterations bringing RDNA2 roadmap features onboard and implemented as-needed, starts to stick. It'd explain the GPU leaks and testing data, the rumors of an earlier launch, etc. It'd explain the decision to go with a 36 CU GPU on the outset and focus on clocks, and some GPU customizations we know about such as the cache scrubbers. It'd explain why the BC is hardware-dependent, etc.

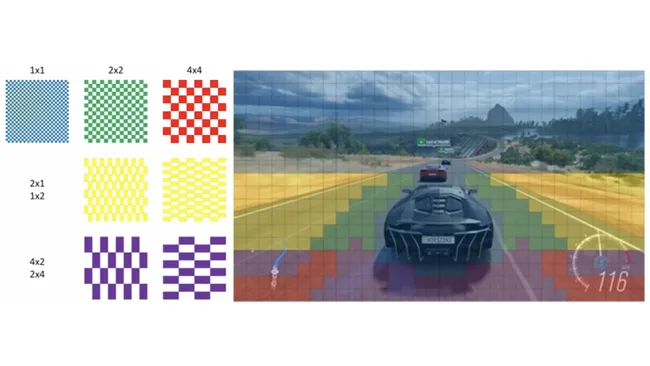

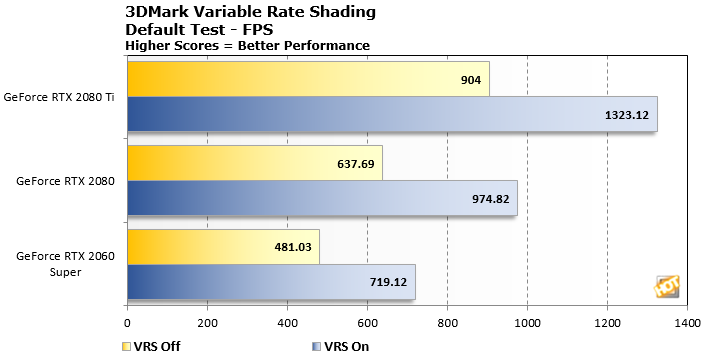

I think the benefits of tier 2 VRS isn't so much in visual differences you can see in screens or in motion, but the fact that the actual hardware is having a good chunk of performance resources freed up which can be applied to other areas, graphics or not.

PS4/Pro IIRC uses foveated rendering, which is what I think is the Cerny patent MS and Intel's stuff refers to. However, foveated rendering was at least primarily designed with the PSVR in mind, which is somewhat different than a similar technique on a non-VR display output. Principally there's some similarities, but in actual practice and implementation there are key differences.

Man, I missed your walls of text

And about VRS, the only game suspect of using LOD's and VRS is GT7, as you can notice pop-in in that tunnel gameplay and some noticeable aliasing at some footage that's more than likely caused by VRS, just like Nvidia's $5,500 RTX8000 gameplay demo that showed many severe signs of VRS ruining the final image.

VRS will be critical for VR with eye tracking as you're aiming for double the output at 4K@120Hz on each eye, that's why GT7 producer, Kazunori Yamauchi, said that they're aiming for 4K@240fps instead of 8K resolution:

“I think, display resolution-wise, 4K resolution is enough.”

“Rather than a spatial resolution that you’re talking about, I’m more interested in the advancements we can make in terms of the time resolution. In terms of frames per second, rather than staying at 60 fps, I’m more interested in raising it to 120 fps or even 240 fps. I think that’s what’s going to be changing the experience from here on forward.”

Gran Turismo's Future: "4K Resolution is Enough", But 240fps is the Target

Kazunori Yamauchi has made some interesting comments about the future direction of Gran Turismo's graphics. The Polyphony Digital studio boss has suggested that frame rate, rather than display resolution, may be the area in which the next titles in the series look to improve. Yamauchi was partici

Last edited: