Bojji

Member

50 Real® TF might be plausible.

They weren't able to double PS5 power in 4 years (PS4 pro did that in 3). I doubt they will be able to double pro power in next 3 or 4 years (that's 33TF), let alone go to 50TF...

50 Real® TF might be plausible.

People want their cake and to eat it too. There is just no money in spending all this time on maximising hardware usage and amazing graphics for it to flop like The Order 1886. The AAA games market has contracted and there are people who often even gun for its demise by not appreciating any of it. Then they turn around and say that the leaps aren't big enough. The way that the industry has tried to deal with this decline and increased risk/cost but still see those leaps is to make development cheaper by offloading the burden on to the hardware and middleware. UE5, AI and RT are the main drivers of that now.Has anyone worrying about this stuff considered that the real limitation is the sheer amount of work and complexity involved in creating software that maximizes the potential of the hardware?

The reason why middle-ware engines like UE have become dominant is that rolling your own engine is such a monumental task that very few are willing to entertain the idea; That's just creating the toolset, not a finished product.

I hate to point this out, but has movie CG improved by that much over the past decade or so? No, it hasn't. In fact if anything its declined due to teams being given inadequate time for polish passes.

This is way less complex work than games because there's no interactive aspect to consider, and the creators have absolute control over what is and isn't presented and essentially have unlimited compute capacity to create their vision offline.

Crazy thought; maybe you should spend more time appreciating WHAT IS, and how much work has gone into it, rather than belly-aching over the "failure" to attain some fantasy level that nobody ever seems to quantify outside of metrics that are dubiously relevant.

Why do that when you can just troll?Dying? Open your eyes and do some research.

The odds of a $500 PS6 in 2028 are almost nil. $600 is more likely. Same price as the PS5 7 years later? I doubt it. Maybe if they have a cheaper DE and a disc version…even still, the DE could be $600 and the disc version $700.PS6 will 100% disappoint in raw numbers and visuals. We are at the limits of $499 and 230w tdp. Nvidia powered PC will leave consoles further in the distant

PS4, masterfully designed. A tablet CPU with a 5400 RPM HDD over a USB 2.0 interface. Then you got the PS4 Pro, a massively unbalanced system.Bolded is quite an understatement, Sony's Hw design team led by Cerny are legendary. Vita, PS4/Pro and PS5/Pro are all masterfully designed. Im sure PS6 will surprise everyone.

People want their cake and to eat it too. There is just no money in spending all this time on maximising hardware usage and amazing graphics for it to flop like The Order 1886. The AAA games market has contracted and there are people who often even gun for its demise by not appreciating any of it. Then they turn around and say that the leaps aren't big enough. The way that the industry has tried to deal with this decline and increased risk/cost but still see those leaps is to make development cheaper by offloading the burden on to the hardware and middleware. UE5, AI and RT are the main drivers of that now.

Exactly. I would love to see the "power" of modern consoles and PC tech be applied to actual advances in AI and gameplay (systems). Good graphics are boring.None of this is a problem for me. The development has to catch up to the tech. Sure, we have better geometry, textures, shinier surfaces, but gameplay hasn’t evolved the way I expected it to.

Half Life 2 came out in 2004 and had the Gravity Gun and using physics to solve puzzles, battle enemies, and navigate the environment. Fast forward two decades, and there hasn’t been much evolution in that front. No amount of raster is going to fix that.

Give me Bloodborne 2 at the current level of graphics and I’ll be happy as can be.

Oh it's the edgy neo account that's jealous of people spending money againNo thanks.

Enjoy being PC cucked.

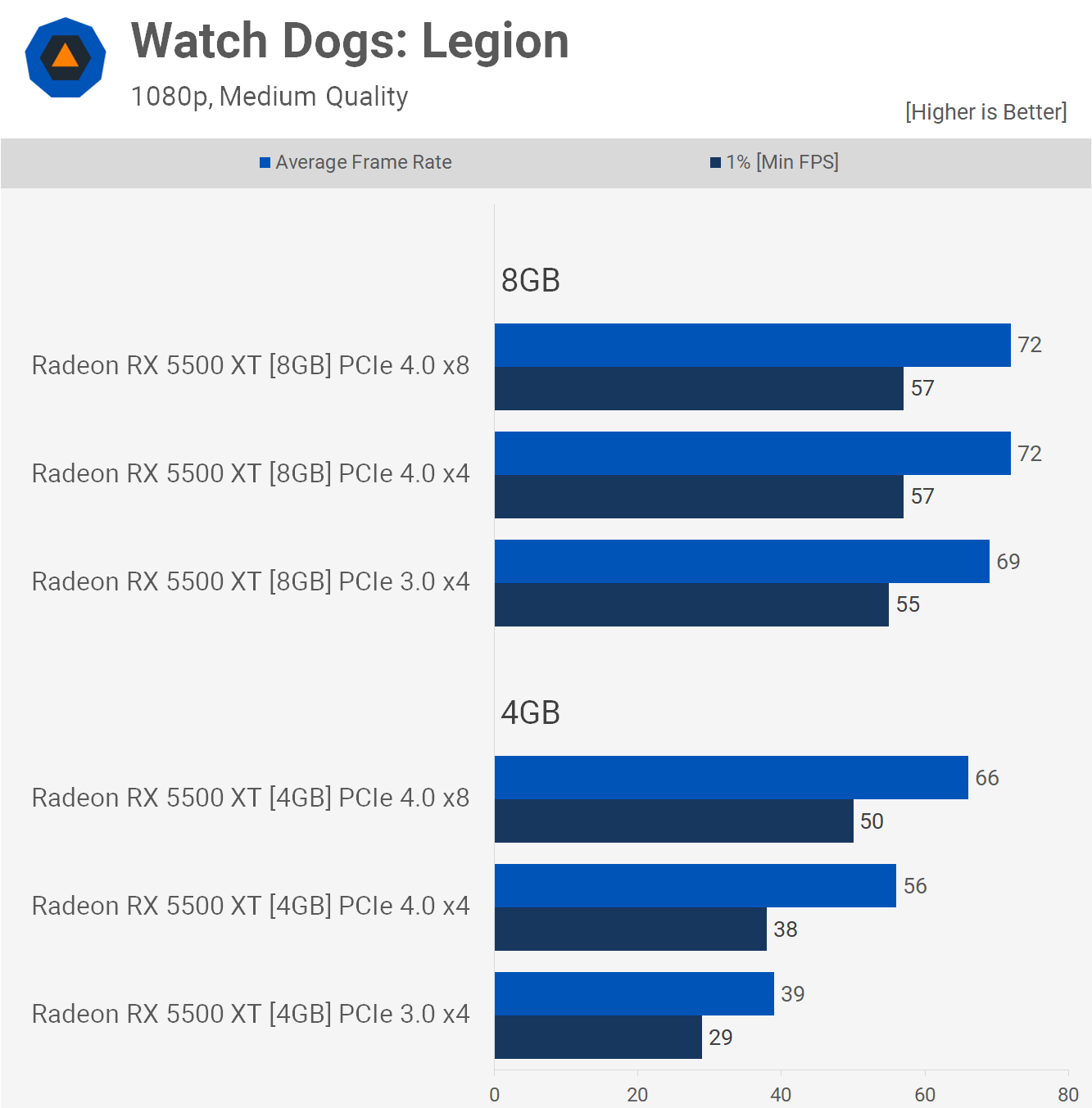

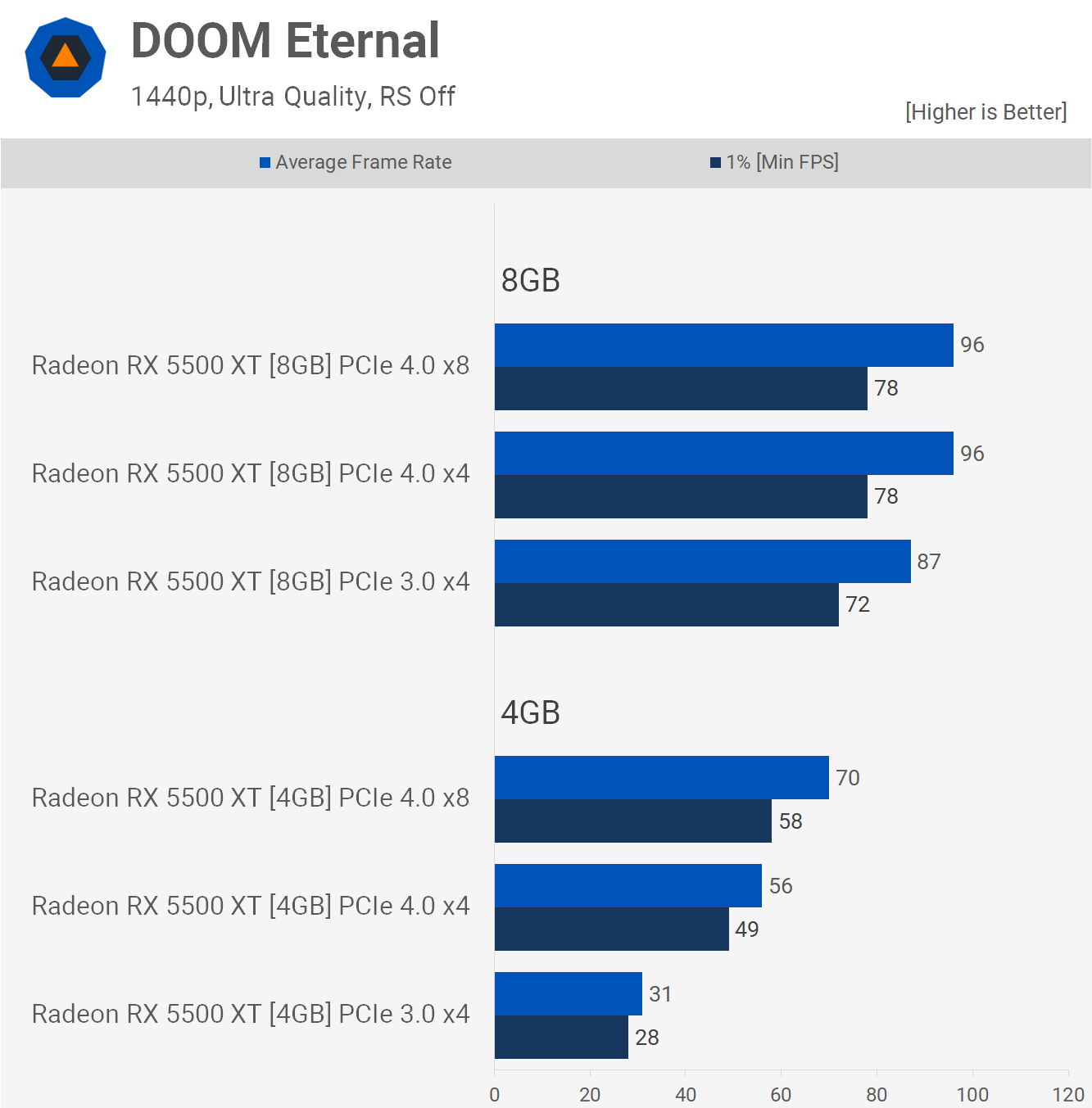

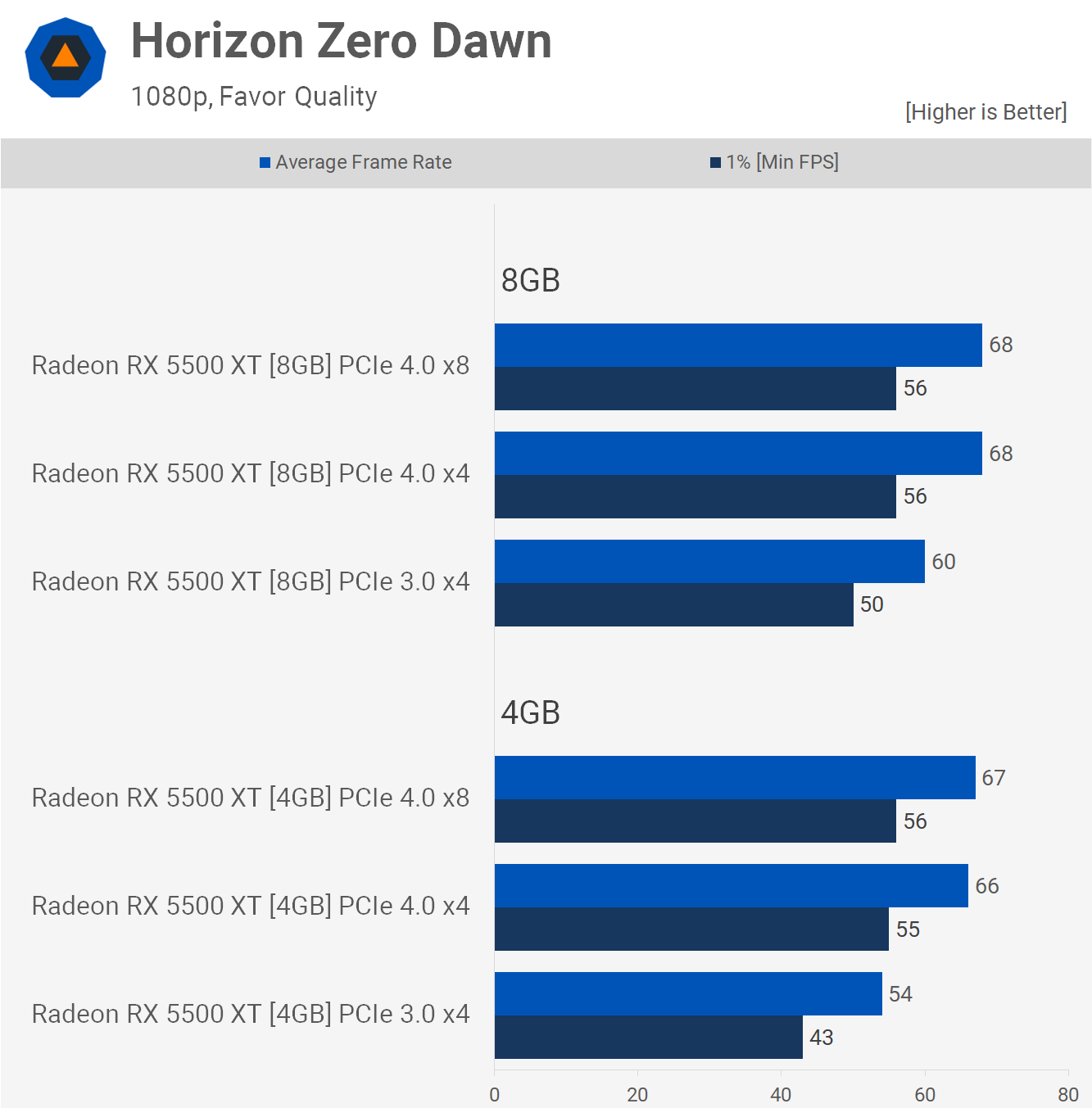

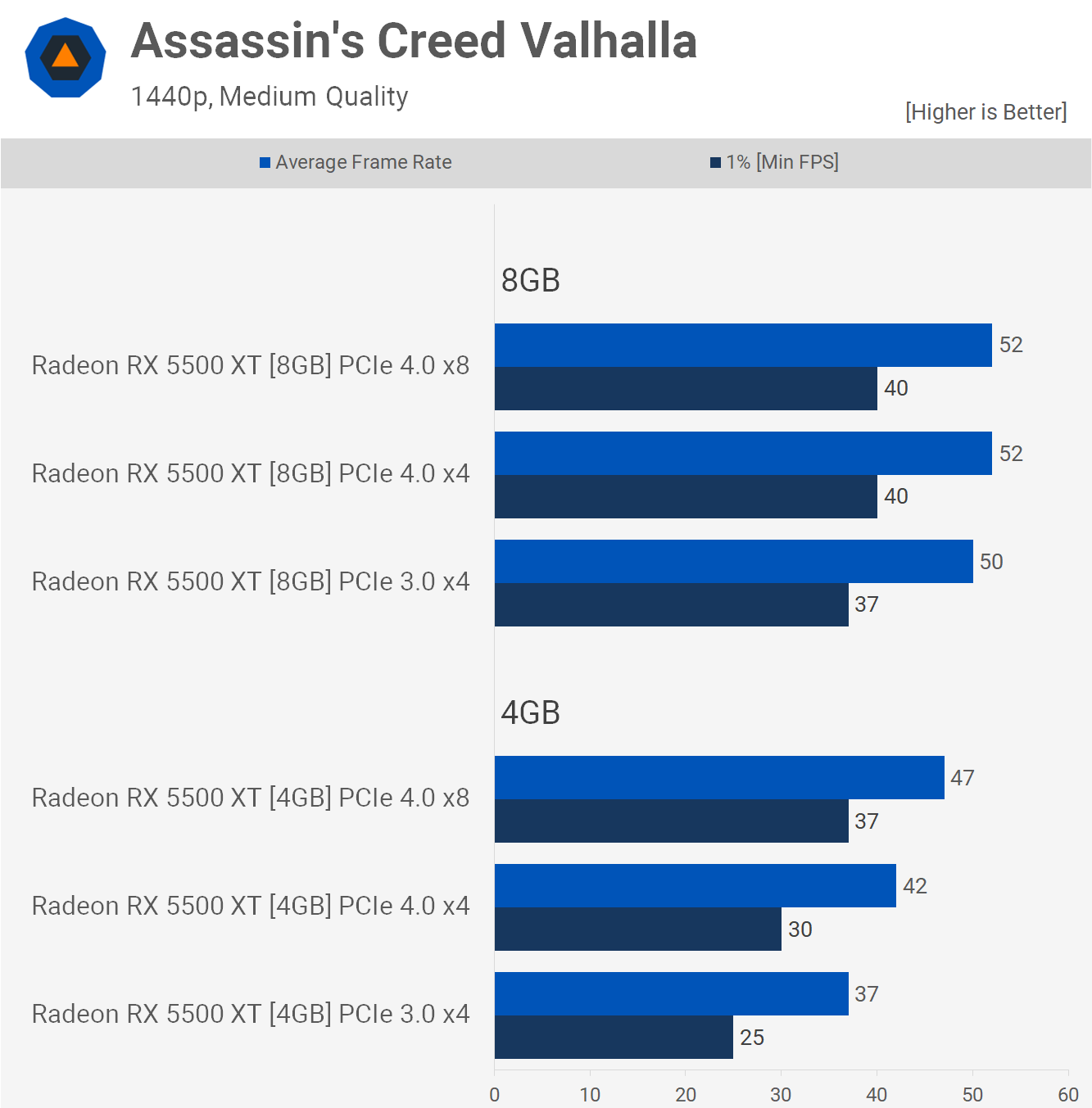

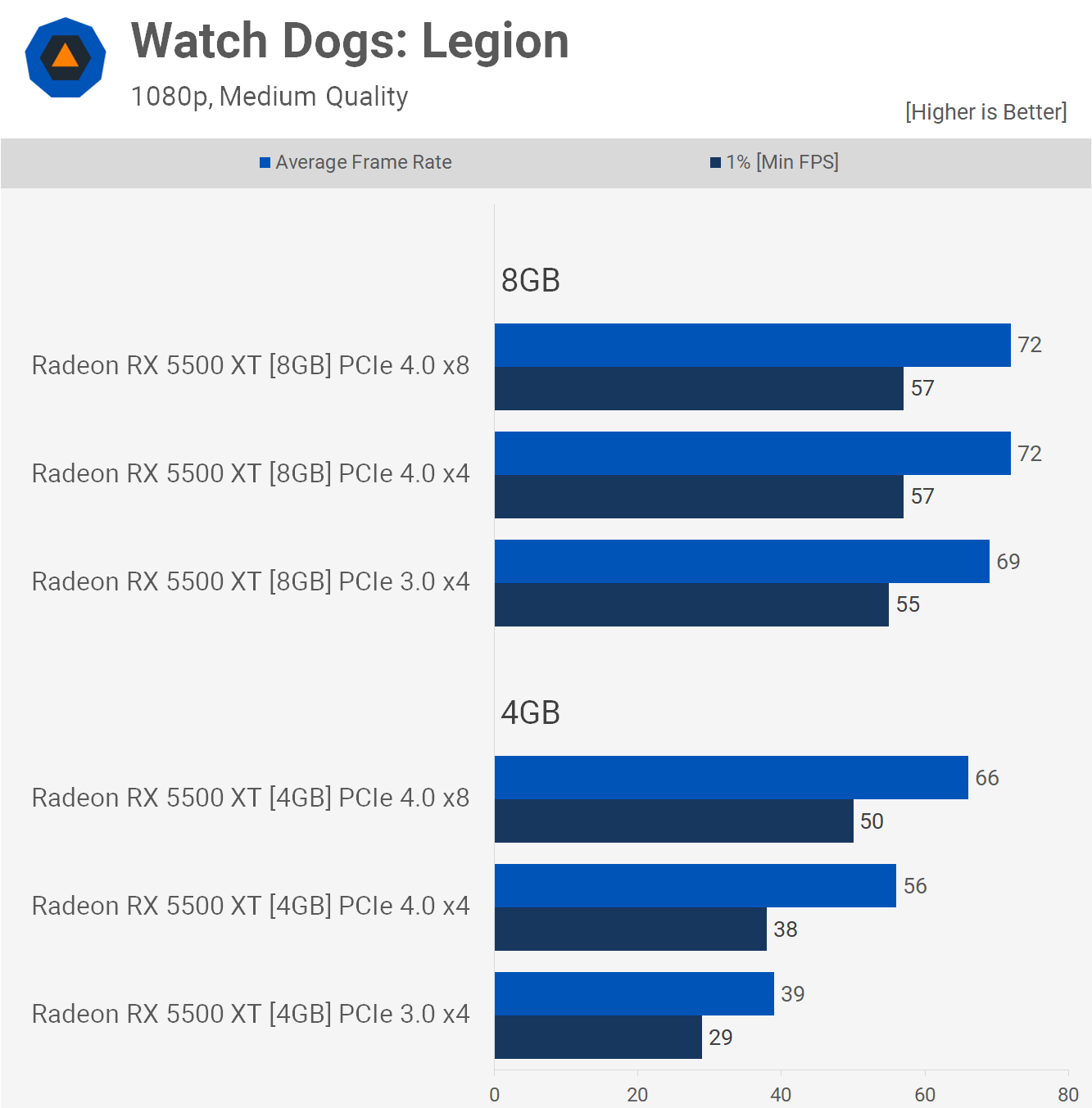

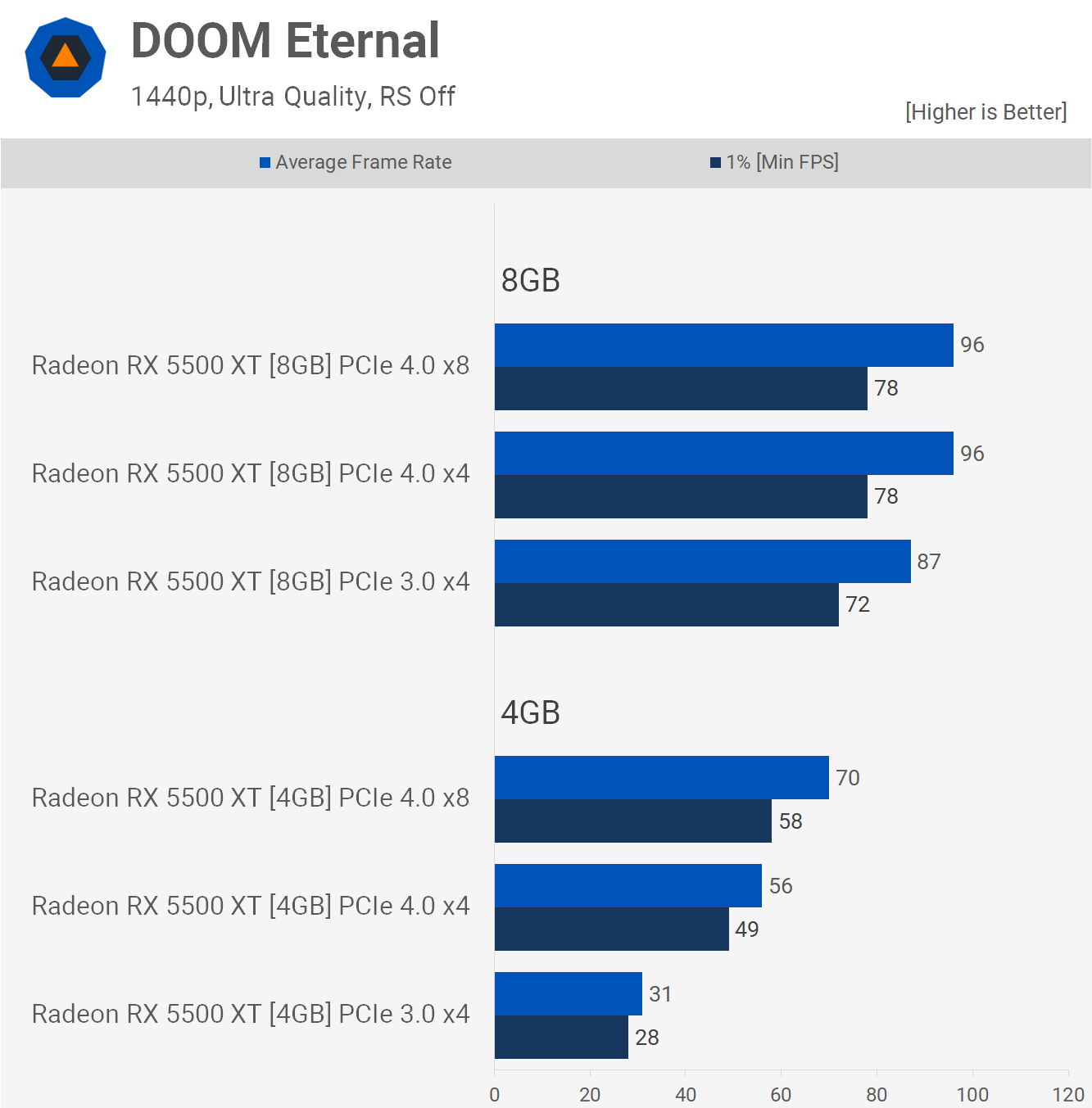

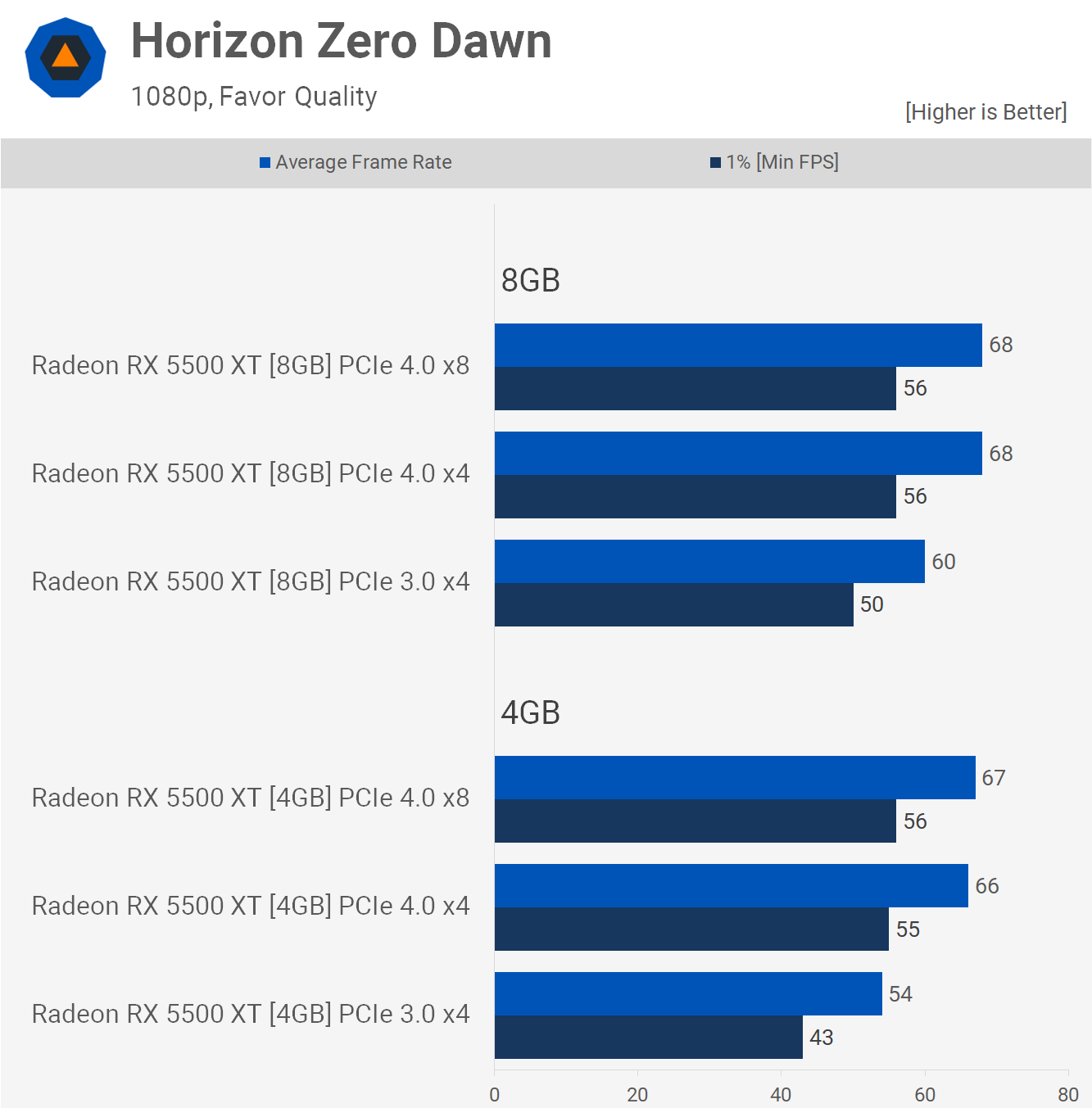

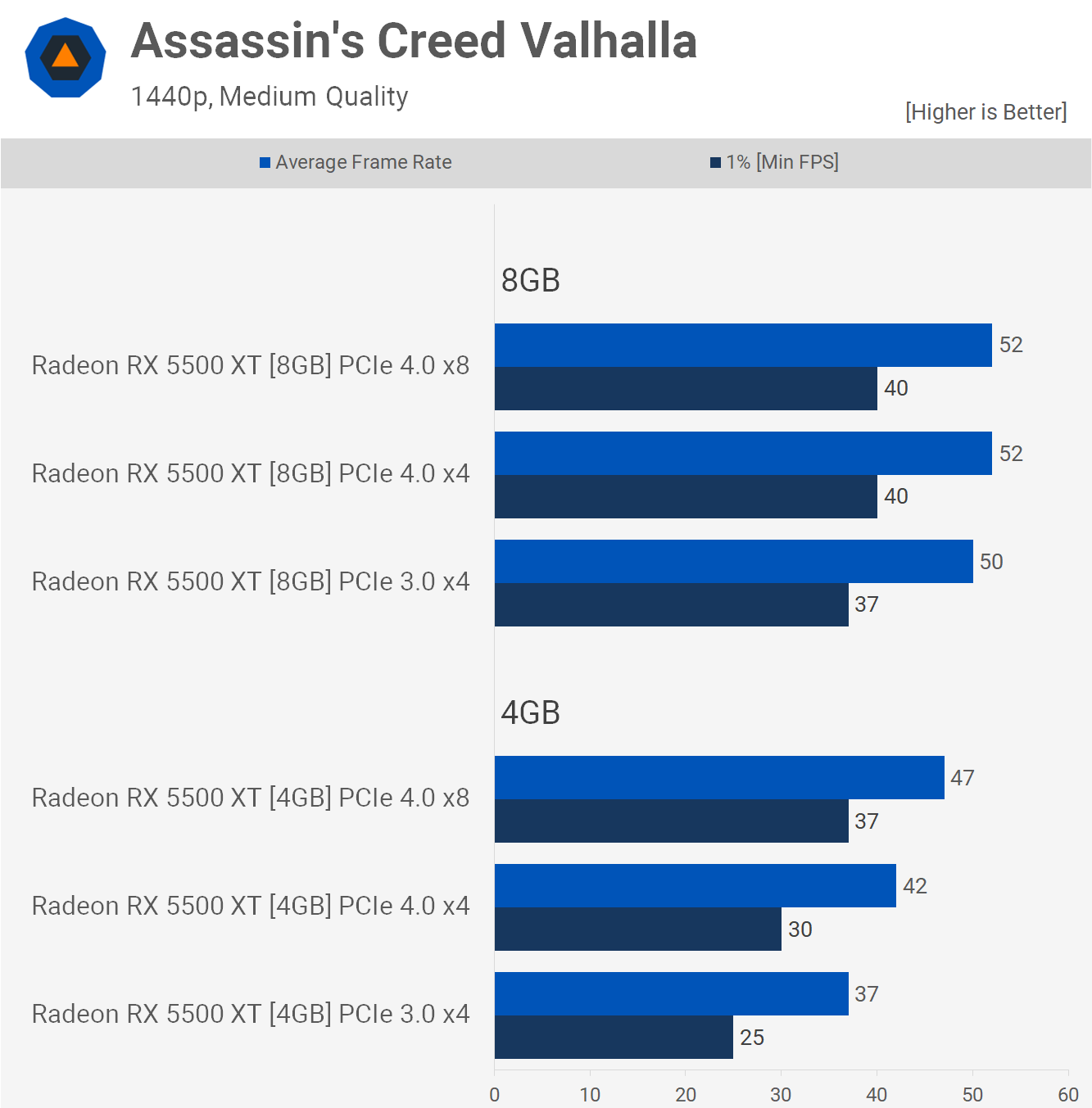

Speed differences are too low to be really relevant. Once game goes out of vram and spills into system RAM you are fucked:

This is precisely what I’m talking about. You can have all the VRAM in the world, but bandwidth will always be the bottleneck.It's not always the case. I downloaded texture pack for W40K Space Marine 2 that required around 18GB VRAM, and that's real VRAM usage, not even allocation. Some people had problems on 16GB VRAM cards because performance tanked with this texture pack, or the game stuttered, but on my PC the game still run smoothly, because PCIe transfer was fast enough.

This test shows how drastically fast PCIe can improve performance in VRAM-limited situations.

What's funny, 4GB card on PCIe4 slot was faster in some cases than 8GB model on PCIe3 (both minimum and average fps)

Getting a 8GB card in 2025 is a terrible idea though. Games are getting more and more heavy on memory.It's not always the case. I downloaded texture pack for W40K Space Marine 2 that required around 18GB VRAM, and that's real VRAM usage, not even allocation. Some people had problems on 16GB VRAM cards because performance tanked with this texture pack, or the game stuttered, but on my PC the game still run smoothly, because PCIe transfer was fast enough.

This test shows how drastically fast PCIe can improve performance in VRAM-limited situations.

What's funny, 4GB card on PCIe4 slot was faster in some cases than 8GB model on PCIe3 (both minimum and average fps)

This is where the future is. All the rest is a waste of time.As per Cerny’s presentation too, this is where the future of graphics rendering is leading to. Sparse rendering with AI inferring the rest of the pixels.

It's not always the case. I downloaded texture pack for W40K Space Marine 2 that required around 18GB VRAM, and that's real VRAM usage, not even allocation. Some people had problems on 16GB VRAM cards because performance tanked with this texture pack, or the game stuttered, but on my PC the game still run smoothly, because PCIe transfer was fast enough.

This test shows how drastically fast PCIe can improve performance in VRAM-limited situations.

What's funny, 4GB card on PCIe4 slot was faster in some cases than 8GB model on PCIe3 (both minimum and average fps)

This is precisely what I’m talking about. You can have all the VRAM in the world, but bandwidth will always be the bottleneck.

Very high and ultra settings with ray tracing on? The 4060 is a low-end card, why would anyone do this? These cards are getting stellar fps with all those features enabled, what’s the complaint here?It's not true at all. This FC example is some anomaly.

Reality looks like this:

Very high and ultra settings with ray tracing on? The 4060 is a low-end card, why would anyone do this? These cards are getting stellar fps with all those features enabled, what’s the complaint here?

They weren't able to double PS5 power in 4 years (PS4 pro did that in 3). I doubt they will be able to double pro power in next 3 or 4 years (that's 33TF), let alone go to 50TF...

What does might mean?

Stop setting the game to very high and ultra at a tier where it’s not acceptable. The textures alone will fill the VRAM. You shouldn’t be using 4K assets at 1080p. The low-tier GPU isn’t tuned for that.It's 4060ti...

Newer games are chugging 8GB cards even not on highest settings. But you can keep denying reality.

It also shows what happens when card goes out of vram, it's not similar to FC at all, isn't it? You have exactly the same GPU but one with more memory performs better.

Stop setting the game to very high and ultra at a tier where it’s not acceptable.

Makes sense. The extra $100 goes a long way in that regard. Pay budget prices, get budget performance.Fuck me...

It's very realistic when you have enough vram. Ratchet runs in 60fps with VH RT on 16GB card.

Yeah, but it's very unrealistic might.

Makes sense. The extra $100 goes a long way in that regard. Pay budget prices, get budget performance.

The 4060 is entry level though.Both 4060 and 4060ti shouldn't have 8GB in the first place. 10GB minimum for 4060 and 12GB for 4060ti.

8GB is entry level memory amount for cards like xx50 series.

But nvidia is gonna nvidia...

You said that once "game goes out of vram and spills into system RAM you are fucked", and my exampe showed that's not always the case.It's not true at all. This FC example is some anomaly.

Reality looks like this:

The 4060 is entry level though.

You said that once "game goes out of vram and spills into system RAM you are fucked", and my exampe showed that's not always the case.

"Not always the case" means there will be still games, where even fast PCIe will be not enough to fix performance in VRAM limited situation. RT games are especially sensitive to memory bandwidth, and can close to desktop as soon you run out or VRAM (especially RE4Remake). Techsport tested games without RT, and PCIe4 bandwidth was enough to improve performance across every single raster game they tested, although in most games card with more VRAM was still faster.

I'm not saying that fast PCIe slot can save GPUs with low amount of VRAM, but it certainly can help in some cases. That's why some people with 16GB VRAM cards has problems in W40K Space Marine 2 with 100GB texture pack, while I have no problems on my card despite having the same 16GB VRAM. Faster PCIe clearly made a difference in my case.

I don’t disagree with you.For some reason they didn't make 4050 (yet), but 4060 shouldn't have less memory than 3060. It should AT LEAST have that 10GB.

Fast PCIE might help somewhat with performance loss but it's not the solution.

You have to kill texture quality/resolution or change GPU to one with more memory.

For some reason they didn't make 4050 (yet), but 4060 shouldn't have less memory than 3060. It should AT LEAST have that 10GB.

10 doesn't work with a 128 bit bus.

Inb4 the 5060 ends up being 9 GB (96-bit)

160-bit is possible but Nvidia isn't going to do that.10 doesn't work with a 128 bit bus.

Inb4 the 5060 ends up being 9 GB (96-bit)

they don't "have to" do shit. They will either:This is better for the video game industry (Not the gpu makers) now the devs have to squeeze what we have, time for the talented devs to shine and the mediocre ones to git good.

Those cards should have been designed with right vram amounts.

Nvidia was super lazy with 4060/ti.

I waited three weeks and it seems the RTX50 series is disappointing. Without node shrinks, we cannot expect massive performance gains and lower prices.You should wait at least 2 more days, I don't think this is gonna age well.

I was expecting some architecture change, looks like a incremental minor revision. Ada was on 4nm already, no room for node improvement.I waited three weeks and it seems the RTX50 series is disappointing. Without node shrinks, we cannot expect massive performance gains and lower prices.