This is still a speculation and rumor thread, but I suspect some people have committed themselves to diminish any aspect of PS5 as much as they can. It's not that are discussing in good faith because their arguments are not coming from a place of logic or reference point (mainly history). In essence what has happened before or trends relative to Sony's hardware design.

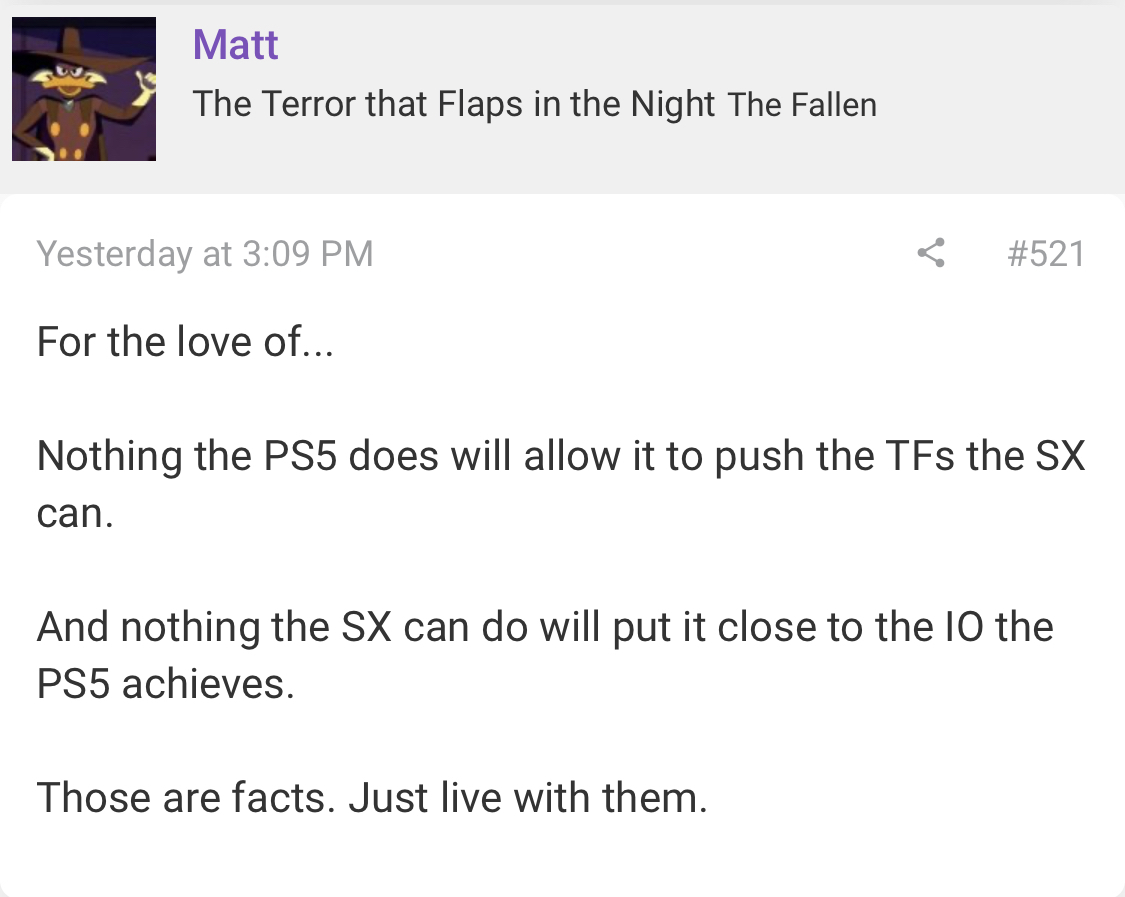

1.) How could PS5 be RDNA 1, when it's doing raytracing, has a more advanced method of VRS than RDNA 2, since it has an entire geometry engine witch cache scrubbers? If PS5 is RDNA 1, why can it do raytracing. Can I bel linked to one Navi 1 card that has built in RT capabilities like RDNA 2 or the next gen consoles including PS5? You see this whole RDNA 1 bit from certain posters is only used to suggest that PS5 is not as advanced as Series X and that has been happening for a while now, Cerny has confirmed, Lisa Su has confirmed but people need to get an edge somehow. Apparently the 16% TF divide and the 3% CPU divide is not enough.

2) Sony has a history of going cutting edge in every generation, they use the latest features available....When other consoles brought proprietary discs like Dreamcasts GD Rom and GC's 1.8GB discs......Sony went with 4.7 GB DVD's, had a tonne of USB ports, firewire you name, went gungho with the cpu and gpu design in house.....For PS3, only console to come with a tonne of features like wifi, top tier sound and media features including Bluray, (which was actually a new format, unlike this gen), again, lots of innovation with the Cell CPU in-house. PS4, 8GB DDR5 when most people were saying 4GB would be overkill, just like the same people trying to undersell the fast SSD this time, it came with lots of day 1 features which built up lot so popularity on consoles; like Screenshots, Game Recording and Streaming with Live with PlayStation, Share button, speaker on controller, Track Pad, More CU's for GPGPU and of course extra memory internally for media features and an extra 20GB/S bus to streamline the CPU/GPU/MEM....Then the PRO with it's butterfly setup 64 Rops, Vega features before Vega released, FP 16/RPM, ID buffer, Primitive Discard Accelerator that dealt with the triangle load and efficiency and the DCC dealing with better compression in the pipeline. Even the work distributor had features ahead of polaris, improved tesselation as one..... So you see, Sony has had a history of pushing their customization work in their consoles with the latest technology everytime....

So how should we be looking at the PS5. If Cerny has a more advanced/customized system to offload triangles and details not in immediate camera view or in view of what the player is focusing on whilst in-game, why should it be called less than RDNA 2? It's better...Primitive shading, a geometry engine, cache scrubbing, then with the insane speeds of their SSD and an insanely fast and versatile DMAC. PS will be able to draw things on screen incredibly fast, especially when certain details and geometry is culled from view. It also helps that the GPU at 2.23GHZ is incredibly fast due to it's high clocks, so there will be less lag and frame hitching at run-time.....Everything works in tandem, a very well oiled system.

The point is, in every generation, Sony engineers have given us features which never existed on consoles before. They are the pioneers of cutting edge tech or new features......They are using RDNA 2's RT, but felt their Geometry Engine would be better served to do VRS, they are probably taking hooks from the RDNA 3 subset, which has not even been discussed or revealed yet, that's according to rumor. They will use what they need for their console based on their vision for it, which is no bottlenecks, a well oiled system, developed for devs, lowest time to triangle etc....So can we really call an advanced VRS system, RT, 7nm EUV, clocks hitting 2.23Ghz and could even hit higher clocks as simply RDNA 1.5? Based on these advancements It's nothing short of RDNA 2+, because it's based on RDNA 2 with some methods improved specifically for the PS5....