3liteDragon

Member

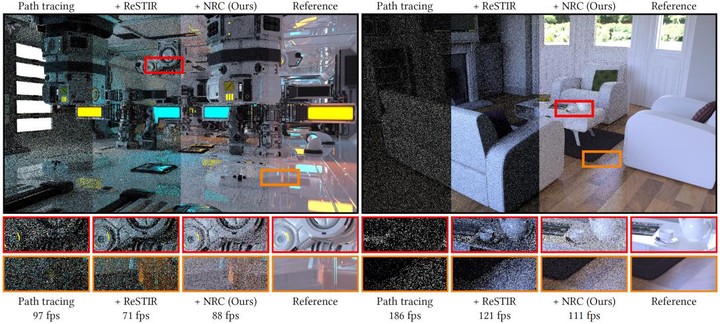

It sounds like DLSS 4 might bring neural rendering/upscaling to textures, but based on what I'm reading, it MIGHT not be exclusive to the 50 series? Anyone else looking into this, feel free to correct me. If this works on all RTX cards, that'll be huge. All DLSS features are a form of neural rendering but Jensen also talked about being able to generate textures/NPCs on the fly when he was asked about DLSS's future.

videocardz.com

videocardz.com

research.nvidia.com

research.nvidia.com

Inno3D teases "Neural Rendering" and "Advanced DLSS" for GeForce RTX 50 GPUs at CES 2025 - VideoCardz.com

NVIDIA preparing Neural Rendering revolution One of the board partners speaks about new AI-features, spotted by HardwareLuxx. The AI revolution has not happened yet, it seems. At least, that’s what Inno3D’s CES 2025 press release seems to claim. The company talks about everything that will be...

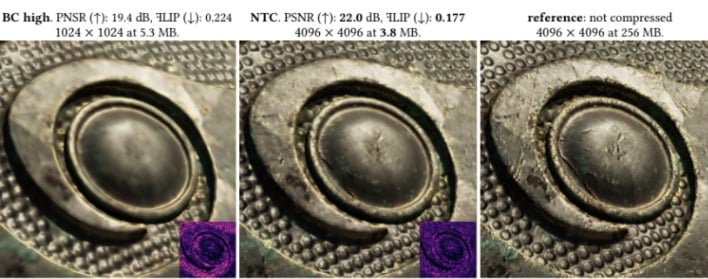

Random-Access Neural Compression of Material Textures | Research

The continuous advancement of photorealism in rendering is accompanied by a growth in texture data and, consequently, increasing storage and memory demands. To address this issue, we propose a novel neural compression technique specifically designed for material textures. We unlock two more...