-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD: FSR 2.0 will run on Xbox and these Nvidia graphics cards

- Thread starter adamsapple

- Start date

Thirty7ven

Banned

Some of you must be living under a rock. A bunch of games run with temporal upscaling solutions already, the advantage here is for the developers that don't have a good solution of their own.

If what these comparisons of FSR 2.0 and performance reveal are true... The news of its arrival on consoles is one of the best since its launch.

You have to be cautious until you see the real results, but it could be a great boost to the potential of the consoles to show more impressive things in the future.

A developer from The Coalition (I think) already warned Jonh Linneman from DF about the promising future of consoles even in that of moving Full RT..... and he mentioned this type of advances in image upscaling as a means to achieve it together with future optimizations with the RT hardware of the consoles.

I am sure that FSR 2.0 will not be the last to arrive on consoles.

You have to be cautious until you see the real results, but it could be a great boost to the potential of the consoles to show more impressive things in the future.

A developer from The Coalition (I think) already warned Jonh Linneman from DF about the promising future of consoles even in that of moving Full RT..... and he mentioned this type of advances in image upscaling as a means to achieve it together with future optimizations with the RT hardware of the consoles.

I am sure that FSR 2.0 will not be the last to arrive on consoles.

adamsapple

Or is it just one of Phil's balls in my throat?

Some of you must be living under a rock. A bunch of games run with temporal upscaling solutions already, the advantage here is for the developers that don't have a good solution of their own.

Not all temporal solutions are alike, this seems to be one of the better ones that doesn't require machine learning.

The GTX 1080 is only 20-25% more powerful than the GTX 1070 yet it enables them to double the suggest resolution?

AMD FidelityFX Super Resolution 2.0 GDC 2022 Announcements

Last week was a big week for our AMD FidelityFX™ Super Resolution (FSR) (1) upscaling technology – after FSR launched in 2021 and became the fastest adopted software technology in AMD history (2), we announced FidelityFX Super Resolution 2.0, the next generation of our open-source upscaling...community.amd.com

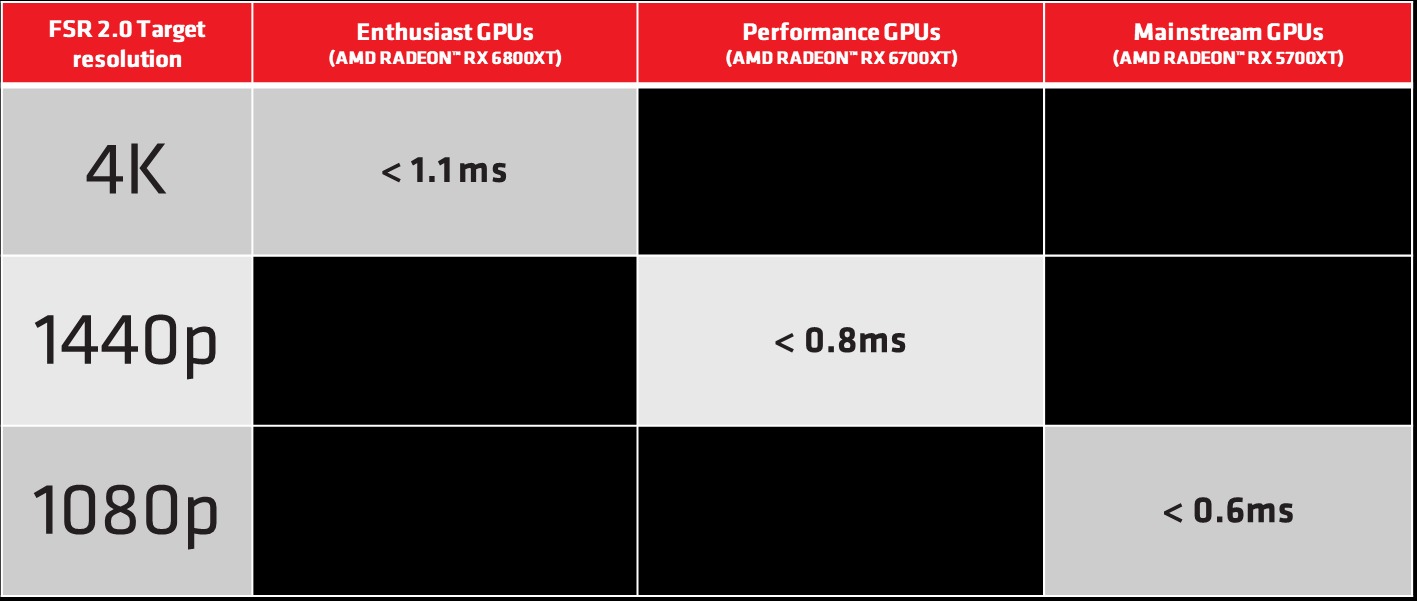

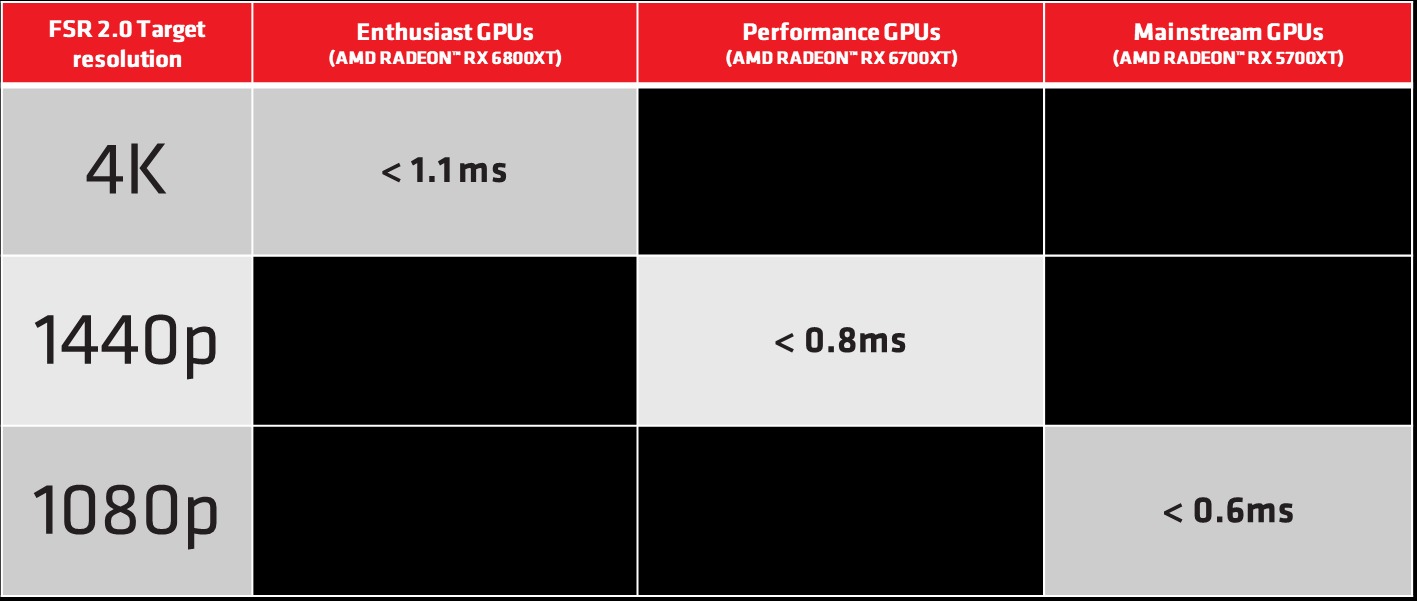

Heres a table

I wonder what enables that. More cores?

DJ12

Member

Still it's supports rdna 1 and 2 cards so we're good yeah.....They're literally reiterating what AMD said directly lol. No one asked about anything, AMD announced this themselves.

Target output resolution. The internal upscaled amount isn’t that significantThe GTX 1080 is only 20-25% more powerful than the GTX 1070 yet it enables them to double the suggest resolution?

I wonder what enables that. More cores?

Bernd Lauert

Banned

Pixel counters gonna be obsolete soon

T-Cake

Member

You still on 1070 ? Jesus lol.

Time to upgrade broda. A 3060 at least will do you wonders and they are everywhere now

Fraid so, good man. I bought a prebuilt which is now hampered by too low of a wattage power supply and insufficient PCI Express lanes to make full use of the newer GPUs. I need to build a whole new system.

oldergamer

Member

Good to see that Xbox can support this. I wonder if that is due to the additional machine learning modifications MS made to the hardware?

Last edited:

Bernd Lauert

Banned

PS5 supports this too, just needs a portGood to see that Xbox can support this. I wonder if that is due to the additional machine learning modifications MS made to the hardware?

DJ12

Member

Interesting take, considering it specifically mentions nothing like that is used.Good to see that Xbox can support this. I wonder if that is due to the additional machine learning modifications MS made to the hardware?

It's currently on xbox, as xbox uses Direct X which is the only API AMD had created FSR2.0 for at this time. Not even Vulcan is supported yet.

Last edited:

GreatnessRD

Member

If AMD has a real solution to DLSS with FSR 2.0, and their MCM GPUs are really like that...

Nvidia might be starting to sweat.

Love to see it. Keep the comp coming.

Nvidia might be starting to sweat.

Love to see it. Keep the comp coming.

AMD lists the native resolutions on that page.Target output resolution. The internal upscaled amount isn’t that significant

Performance mode 1080p has native resolution 960x540 (518,400 pixels).

Performance mode 1440p has native resolution 1280x720 (921,600 pixels).

Last edited:

vpance

Member

:format(webp):no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/23340231/fsr_performance_mode_timing.jpg)

This sounds good

In that under 1.5ms period, FSR 2.0 does all sorts of things, though — AMD says it replaces a full temporal anti-aliasing pass (getting rid of a bunch of your game’s jagged edges) by calculating motion vectors; reprojecting frames to cancel out jitter; creating “disocclusion mask” that compare one frame to the next to see what did and didn’t move so it can cancel out ghosting effects; locking thin features in place like the barely-visible edges of staircases and thin wires; keeping colors from drifting; and sharpening the whole image, among other techniques.

Bernd Lauert

Banned

There's even an ultra performance mode, where 720p becomes 4k. Wanna see how these extremes hold up.

kyliethicc

Member

So what are the framerates (and thus the differences) for these 4 modes on a system?As expected but good to see confirmation. As with FSR 1, this should also run on PS5 as well.

Mr Moose

Member

You would think so, it's weird that AMD went out of their way to name drop Xbox but not PS5, they're both running on AMD hardware.

Unless this is a case of full RDNA 2

:format(webp):no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/23340218/fsr_hardware.jpg)

That RDNA 2 RX 590.

It's because they are showcasing it with an Xbox studios game.

:format(webp):no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/23323101/7_DEATHLOOP_AMD_FSR_OFF_FSR1_FSR2_4K_Quality_Mode_Press_Image_B.png)

Sega Orphan

Banned

From the stills it looks really impressive. However, I will wait to see it in motion before saying its the second coming of DLSS.

It's a bit of a head scratcher about why it's available on XSX/S and not PS5. AMD would have announced it on PS5 if it was.

So it either requires a bit of programming work to bring it to PS5, which hasn't been done yet, or, MS played a part in its development so it's not going to be available on PS5, or, for some unknown reason it won't be able to run on the PS5.

It's a bit of a head scratcher about why it's available on XSX/S and not PS5. AMD would have announced it on PS5 if it was.

So it either requires a bit of programming work to bring it to PS5, which hasn't been done yet, or, MS played a part in its development so it's not going to be available on PS5, or, for some unknown reason it won't be able to run on the PS5.

Mr Moose

Member

Remember FSR 1.0 announced for Xbox Series and not PS5? PS5 had a game using it first.From the stills it looks really impressive. However, I will wait to see it in motion before saying its the second coming of DLSS.

It's a bit of a head scratcher about why it's available on XSX/S and not PS5. AMD would have announced it on PS5 if it was.

So it either requires a bit of programming work to bring it to PS5, which hasn't been done yet, or, MS played a part in its development so it's not going to be available on PS5, or, for some unknown reason it won't be able to run on the PS5.

Tripolygon

Banned

That we haven't seen yet.So what are the framerates (and thus the differences) for these 4 modes on a system?

No, it is because it was created using HLSL and Xbox supports HLSL. Vulkan support is not there yet but any developer can port it to Vulkan or PSSL if they want as it is open source.That RDNA 2 RX 590.

It's because they are showcasing it with an Xbox studios game.

mckmas8808

Mckmaster uses MasterCard to buy Slave drives

This looks really impressive.

You can compare native to the different FSR modes here.

FSR 2.0 - IMG 1 - Imgsli

imgsli.com

FSR 2.0 - IMG 2 - Imgsli

imgsli.com

This is VERY impressive! Does it have to be done per game like DLSS 2.0? Or is it more free? If that makes any sense.

mckmas8808

Mckmaster uses MasterCard to buy Slave drives

So Performance mode is basically 1080p -> 4K ?

kyliethicc

Member

lol then its useless info so far. Only point of reducing IQ is gaining frames. Need the real info.That we haven't seen yet.

No, it is because it was created using HLSL and Xbox supports HLSL. Vulkan support is not there yet but any developer can port it to Vulkan or PSSL if they want as it is open source.

twilo99

Member

Good news for the Series S.

I think they designed it with this tech in mind actually...

twilo99

Member

Who needs native anymore?

Narrative:

"Between that and VRR the devs can now just sleep at their desks and its all good."

Sega Orphan

Banned

You have to understand that FSR isn't some crazy new technology, it's just Temporal reconstruction with some AMD algorithms and sharpening filters.Remember FSR 1.0 announced for Xbox Series and not PS5? PS5 had a game using it first.

If this was only available on XSX/S and not PS5, Sony could develop their own version anyway. Insomniac have their own Temporal solution, and it may well be as good as FSR, but we haven't seen any comparisons with native vs it, so we don't know. Sony is quite well known for having their own versions of something. They have their own APIs, they have their own Ray Tracing software etc. It would not surprise me in the slightest if Sony didn't jump of FSR and had their own version, which is 99.9% the same, which can be converted from FSR in a quick minute.

Maybe they intend to roll out Insomniac tech, maybe they have been working on their own solution which they think is better. There are a heap of Temporal solutions now from FSR to UE5 to Insomniacs one, to even DLSS which relies on Temporal and vector info to do the majority of the work with DLSS.

Even if Sony didn't adopt it, they could 100% have something as good.

Tripolygon

Banned

This information is coming out of GDC where developers are being briefed on the technology, there are a few more months before the first game to use it is released to the general public. The point is to reduce render load to gain more FPS but still retain a similar IQ which is what they are showing here. This is not useless information. It is a given that it will lead to increased FPS, what has not been shown is how much but the technology itself costs less than 1ms to run at the highest quality level on a high-end GPU.lol then its useless info so far. Only point of reducing IQ is gaining frames. Need the real info.

kyliethicc

Member

If I tell you I can save you money by using these 3 coupons, but I never tell you how much you'll save, then which coupon is best?This information is coming out of GDC where developers are being briefed on the technology, there are a few more months before the first game to use it is released to the general public. The point is to reduce render load to gain more FPS but still retain a similar IQ which is what they are showing here. This is not useless information. It is a given that it will lead to increased FPS, what has not been shown is how much but the technology itself costs less than 1ms to run at the highest quality level on a high-end GPU.

vpance

Member

This information is coming out of GDC where developers are being briefed on the technology, there are a few more months before the first game to use it is released to the general public. The point is to reduce render load to gain more FPS but still retain a similar IQ which is what they are showing here. This is not useless information. It is a given that it will lead to increased FPS, what has not been shown is how much but the technology itself costs less than 1ms to run at the highest quality level on a high-end GPU.

According to that table above, < 1ms on a high end GPU in performance mode, not quality mode.

Would it be terrible if quality mode was 3ms or more on a 6800XT?

adamsapple

Or is it just one of Phil's balls in my throat?

If I tell you I can save you money by using these 3 coupons, but I never tell you how much you'll save, then which coupon is best?

It's still a WIP thing and the update isn't out on Deathloop yet.

Once the update is out, everyone should be able to see the gains.

They're just briefing the tech, come on man lol.

Tripolygon

Banned

I misread. The cost would more than likely pay for itself otherwise it would be pointless. As far as I remember DLSS can cost up to 10ms on some hardware so 3ms wouldn’t be bad at all.According to that table above, < 1ms on a high end GPU in performance mode, not quality mode.

Would it be terrible if quality mode was 3ms or more on a 6800XT?

1. It’s on a game by game basis so if you are told it provides X% performance uplift on Y game it won’t be the same for game Z.If I tell you I can save you money by using these 3 coupons, but I never tell you how much you'll save, then which coupon is best?

2. Download the sdk and implement it otherwise wait for actual games to release so you can see how much performance uplift it provides. It is one more optional feature you can use or not use.

3. Give me the fucking coupon, I’ll try all 3 for myself and find out.

Last edited:

SenjutsuSage

Banned

M1chl

Currently Gif and Meme Champion

Would like to add, that MS is using drivers in the Xbox OS directly from AMD, where Sony is using just basic bios and they are building on top of that themselves (they are using different business model than MS, even when it comes to architecture IP licensing, AMD supply everything for MS, but Sony is licensing their IP for usage/modifying/etc).

So that's why the difference between "True RDNA 2" and "RDNA 2 based technology".

And also why it's in the marketing materials AMD can state the functionality on Xbox, but not on PS5, because ultimately that's not their word to say it.

Hope that clear up some confusion.

So that's why the difference between "True RDNA 2" and "RDNA 2 based technology".

And also why it's in the marketing materials AMD can state the functionality on Xbox, but not on PS5, because ultimately that's not their word to say it.

Hope that clear up some confusion.

YOU PC BRO?!

Gold Member

1440p + 60fps + RT + FSR2.0 = 4K60 fps with RT

Last edited:

//DEVIL//

Member

sadly that's what happened you leave your PC without usual upgrades every now and then. for example. if I to upgrade my GPU this year, to a 4000 series, I might have to upgrade my power supply to a new one that has this weird new connector the new GPU require.Fraid so, good man. I bought a prebuilt which is now hampered by too low of a wattage power supply and insufficient PCI Express lanes to make full use of the newer GPUs. I need to build a whole new system.

Unless the partners GPU will use the same old power

kyliethicc

Member

The example was Deathloop. Yet no FPS listed. Useless example.I misread. The cost would more than likely pay for itself otherwise it would be pointless. As far as I remember DLSS can cost up to 10ms on some hardware so 3ms wouldn’t be bad at all.

1. It’s on a game by game basis so if you are told it provides X% performance uplift on Y game it won’t be the same for game Z.

2. Download the sdk and implement it otherwise wait for actual games to release so you can see how much performance uplift it provides. It is one more optional feature you can use or not use.

3. Give me the fucking coupon, I’ll try all 3 for myself and find out.

SenjutsuSage

Banned

Would like to add, that MS is using drivers in the Xbox OS directly from AMD, where Sony is using just basic bios and they are building on top of that themselves (they are using different business model than MS, even when it comes to architecture IP licensing, AMD supply everything for MS, but Sony is licensing their IP for usage/modifying/etc).

So that's why the difference between "True RDNA 2" and "RDNA 2 based technology".

And also why it's in the marketing materials AMD can state the functionality on Xbox, but not on PS5, because ultimately that's not their word to say it.

Hope that clear up some confusion.

This is wildly incorrect. Sony gets semi-custom parts from AMD the same exact way Microsoft does. Both are customers of AMD's semi-custom business where they can pick and choose any feature or roadmap feature and then integrate their very own IP into the mix.

And of course it doesn't mean PS5 won't support FSR 2.0. Of course it will, but what you just said is inaccurate. Microsoft is also licensing AMD tech and modifying a great deal of it. This is why Series X|S have machine learning built in and PC RDNA 2 does not. It's also why Series X GPU has custom texture filters built into the GPU for Sampler Feedback Streaming that's not present in PC RDNA 2. Not just that, Series X GPU goes beyond the RDNA 2 specs for Tier 2 VRS, as well as having larger group size support for Mesh Shaders beyond what the PC hardware has.

The spec max for Mesh Shader group size is 128. Series X can go up to 256. AMD also confirmed with RDNA 2 on their official youtube page that the group size support for Mesh Shaders is still below what Series X possesses.

ToTTenTranz

Banned

Quality mode on a 6800XT takes less than 1.1ms @4K.According to that table above, < 1ms on a high end GPU in performance mode, not quality mode.

Would it be terrible if quality mode was 3ms or more on a 6800XT?

The FSR 2.0 overhead difference between quality modes is seemingly negligible. What changes is mostly performance and output IQ.

All you need to do is take Deathloop's performance and add the overhead.The example was Deathloop. Yet no FPS listed. Useless example.

For example, to get FSR 2.0 Quality mode with 4K output the game will render at 1440p natively.

Then you just get a Deathloop benchmark for 1440p:

And add 1.1ms to that framerate.

For example on a RX 6800 you get 97.7 FPS, that's 1/97.7 = 10.2ms per frame.

With FSR 2.0 Quality it takes 1.1ms more, so its 11.3ms.

1/0.0113 = 88.5 FPS for a 4K output with better than native IQ.

oldergamer

Member

And you know this how?PS5 supports this too, just needs a port

Nice framerate boost! Native 4k on 6800 is 56.8 FPS.Quality mode on a 6800XT takes less than 1.1ms @4K.

The FSR 2.0 overhead difference between quality modes is seemingly negligible. What changes is mostly performance and output IQ.

All you need to do is take Deathloop's performance and add the overhead.

For example, to get FSR 2.0 Quality mode with 4K output the game will render at 1440p natively.

Then you just get a Deathloop benchmark for 1440p:

And add 1.1ms to that framerate.

For example on a RX 6800 you get 97.7 FPS, that's 1/97.7 = 10.2ms per frame.

With FSR 2.0 Quality it takes 1.1ms more, so its 11.3ms.

1/0.0113 = 88.5 FPS for a 4K output with better than native IQ.

ToTTenTranz

Banned

It runs on Polaris and Pascal GPUs from 2016. Insomniac has been using similar tech since the PS4.And you know this how?

It would take a special kind of imagination to assume it can't run on the PS5.

vpance

Member

Quality mode on a 6800XT takes less than 1.1ms @4K.

The FSR 2.0 overhead difference between quality modes is seemingly negligible. What changes is mostly performance and output IQ.

Wtf? That seems very impressive. There's little reason to use anything but quality then.

ArtHands

Thinks buying more servers can fix a bad patch

This is HUGE news, these consoles are in desperate need of this kind of tech. I hope it works well on them.

Was this at some Microsoft / AMD event or something? Why wouldn't AMD just mention both Xbox and PS5 the tech supports loads of GPUs so surely they could just mention the current consoles?

Likely because the tech is developed with active help from Microsoft since AMD developed it maknly for PC, thus by proxy AMD prioritize Xbox for consoles