HeisenbergFX4

Member

Yeah their 5090 prebuilt are not cheap though I think they 5080s are decently priced

Yeah their 5090 prebuilt are not cheap though I think they 5080s are decently priced

B&H lists that as using a B850 chipset, not horrible but definetly not enthusisast level for a $4,600 PC. Gen 4 PCIE which doesn't matter in practice but still kinda lame for the brand-new Gen 5 5090.

It’s already out, isn’t it?Is DLSS 4 coming to 40 series cards the same day when the 50 series launches?

I thought there's supposed to be a driver update? I haven't seen anything yet.It’s already out, isn’t it?

Yea I'm waiting for the driver update instead of that replace files from CP77 method.I thought there's supposed to be a driver update? I haven't seen anything yet.

it came out with Cyberpunk latest update. Use the latest DLSS Swapper to get it into other games that have DLSS. Could also wait till the 30th for the Nvidia app update that does this for you.I thought there's supposed to be a driver update? I haven't seen anything yet.

That is almost downright reasonable. They probably cheap out on PSU, RAM and Motherboard though to cut some corners. And SSD is likely to be a less expensive version without DRAM cache.Yeah their 5090 prebuilt are not cheap though I think they 5080s are decently priced

Being. Cyberpower its going to cut some corners just how much that relates to lost performance versus the decent price is hard to tellThat is almost downright reasonable. They probably cheap out on PSU, RAM and Motherboard though to cut some corners. And SSD is likely to be a less expensive version without DRAM cache.

Still, that’s a good overall price.

Probably not much to average user who wants to just buy and use it for say 3-4 years.Being. Cyberpower its going to cut some corners just how much that relates to lost performance versus the decent price is hard to tell

All future NVIDIA GPUs to be bundled with https://downloadmoreram.com/it will have MVG

multi vram generation 2x 3x 4x

12gb vram but compressed 2x 3x 4x to download more vram with AI

This was very interesting. Lower the power limit by 10% and you only lose a couple % of performance. Drop it 30% and you only lose like 8% performance, and it consumes less power than a 4090.

Yes, RTX 40 series undervolts differently.

In my case I saw 50-60W less power usage with UV and that's not a big difference to me. Also in certain games like Metro Exodus (at 4K with RT) not even UV reduced my GPU power usage (for some strange reason). Only power limit at 70% did it, but then my performance went from 85fps to 63fps so I said to myself fu** that, I'm not going to limit performance on my PC that much for just 50-60W.

What's interesting my power bills have not even increased compared to my previous PC (GTX1080) and I was sure it will be the case. The RTX4080S can draw up to 315W, but it's usually well under 300W. Some games can draw as little as 230W even at 99% usage (GTA4). I often play older games, so my RTX4080S can sometimes only draw 50-60W when running games with the same settings as my old GTX1080 OC at 220W. The RTX4080S is more power hungry, but also much faster than my old GTX1080, so it doesnt need to use it's full power budged as often.

I just got a 12GB card and I’ve got several games that saturate it at 1080p. Anyone buying a 12GB 5000 card is in for a rough go. I don’t think they will age well. 12GB is insane for a 5070.5070 has almost 700GB/s of memory BW. Memory BW is not the problem for this card, memory amount it...

You can see the strategy for Nvidia.I just got a 12GB card and I’ve got several games that saturate it at 1080p. Anyone buying a 12GB 5000 card is in for a rough go. I don’t think they will age well. 12GB is insane for a 5070.

Here's my UV resultsI suggest playing with your settings/curve. I am at 80% power output. My cards temps reduced to the point where I generally do not hear it (fans run low due to better temps). Using benchmarks apps I average about 99.1% of the performance for 80% of the power usage. I did OC the memory a bit. I might have a better card though...

Hence why after owning every xx80 between the 280 and 1080, I just bought a used 6750xt.You can see the strategy for Nvidia.

Smash rendering resolution to lower frame buffer memory, and then use NTC to squash the textures compressing the vram footprint further. Snake oil. Push back needs to start now.

Cyberpunk 2077 already has DLSS 4 support. Works quite well on my 4090. Performance mode is legit good,too.I thought there's supposed to be a driver update? I haven't seen anything yet.

12GB is insane for a 5070.

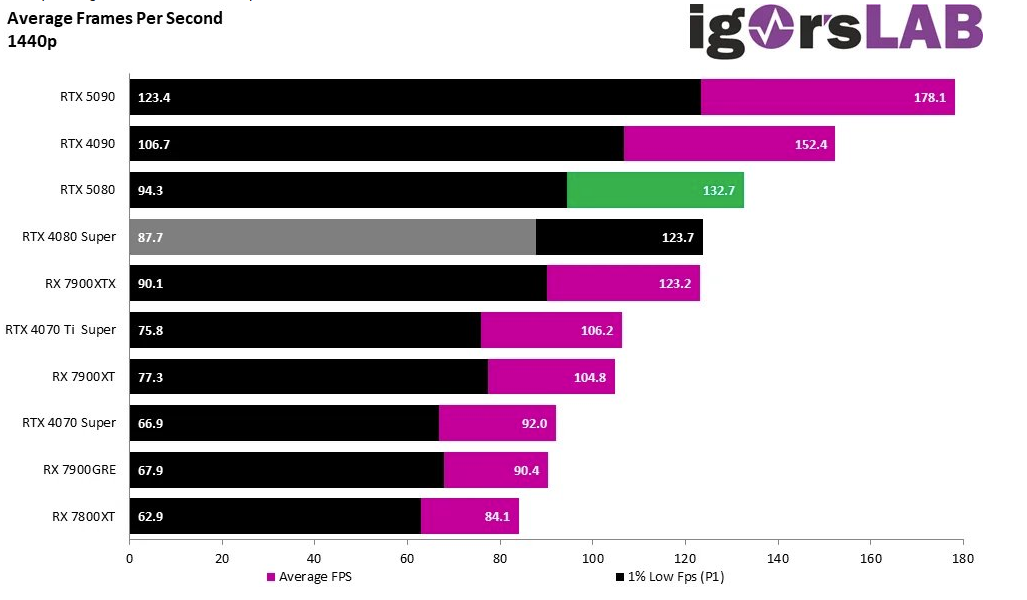

People who saw it say, that it was only 7% over 4080s at 4k

I thought it would be bad, but this has “exceeded” my expectations.

I thought it would be bad, but this has “exceeded” my expectations.

Depending on your target resolution and fps every single one or none.Here's my thing. How many games that demand a 5 series are even going to drop before the 6 series?

For people with a 4xxx gen card, yes. For people with 3xxx cards or lower, no.This looks abysmal:

You waited 2 more years for 8% better performance at the same price?For people with a 4xxx gen card, yes. For people with 3xxx cards or lower, no.

You waited 2 more years for 8% better performance at the same price?

A lot of us don`t actively wait for GPUs. If it wasn`t for my monitor setup I´d not even look at the 5xxx series now.You waited 2 more years for 8% better performance at the same price?

You did what you had to do, brother. I don't believe you have retired; it's just a break. The PS6 will eventually come out, and you must prove the green rats wrong. I will also invite you to go into the Switch 2 threads now and then to show them who the home console boss really is.Personally was too busy waging console war to bother with PC gaming. I've retired now so i'm back in the game.

I get your point, but I disagree. The value of this card is still awful. If you have to choose between a 4080 and a 5080 now for the same price, sure, the 5080 seems marginally better. But objectively, it's a bad deal for people coming from the 3000 series, too.A lot of us don`t actively wait for GPUs. If it wasn`t for my monitor setup I´d not even look at the 5xxx series now.

I am not following that logic.You did what you had to do, brother. I don't believe you have retired; it's just a break. The PS6 will eventually come out, and you must prove the green rats wrong. I will also invite you to go into the Switch 2 threads now and then to show them who the home console boss really is.

I get your point, but I disagree. The value of this card is still awful. If you have to choose between a 4080 and a 5080 now for the same price, sure, the 5080 seems marginally better. But objectively, it's a bad deal for people coming from the 3000 series, too.

If we had a real performance bump, you'd be able to get 4090 performance for the same price. You're getting, more or less, the same as 2 years ago for more or less the same price.

oof let`s not go down this rabbit hole. Since the crypto boom and the ai boom after it the word "value" in regards to GPUs is something best avoided.I get your point, but I disagree. The value of this card is still awful.

AMD just can do GDDR7 version 9070XT 24GB(3gb modules) add 12-15% perf, tie with 5080, and sell for like 800$. Only question want LiSa to do GDDR7 version...It's just a shame the 7900XTX turns to dogshit when asked to do anything other than raster, as it would still make a good card at a (relatively) decent price with plenty of VRAM

Ampere's GPUs are still fast and offer at least console-like quality (much better if we take DLSS technology into account). The biggest problem with RTX 30 series cards are VRAM. The RTX3090 have plenty of VRAM, but most RTX30 GPUs are VRAM limited in a lot of games and that's a real problem, so upgrading even to 4070tiSuper would make sense to a lot of RTX30 owners, yet alone something like RTX5070ti, or RTX5080.You did what you had to do, brother. I don't believe you have retired; it's just a break. The PS6 will eventually come out, and you must prove the green rats wrong. I will also invite you to go into the Switch 2 threads now and then to show them who the home console boss really is.

I get your point, but I disagree. The value of this card is still awful. If you have to choose between a 4080 and a 5080 now for the same price, sure, the 5080 seems marginally better. But objectively, it's a bad deal for people coming from the 3000 series, too.

If we had a real performance bump, you'd be able to get 4090 performance for the same price. You're getting, more or less, the same as 2 years ago for more or less the same price.

Is the 5080 a bad card? Fuck no, it still offers excellent performance. But it's still disappointing as a next-generation card if it's the same performance as a 4080, which was released in 2022, not last year. You may be thinking of the Super, which also had the same performance, it was just a price cut.I am not following that logic.

Yes I agree as gen to gen improvement is not high. But it does have new technology/ still faster than the original 4080/ cheaper than the original 4080.

Sure it’s same price as 4080s. But that card was released last year. I am not sure how much of a gab in terms of power you are expecting when it’s been known for a year now that this gen will still use the same node as 4000 series.

You don’t have to buy the 5000 series if you are happy with your current gpu. I am using a spare 3080ti because I sold the 4090 and want a 5090. But the truth is if I don’t get one guess what? My 3080ti still plays my games fine lol. Beauty of PC man

I hear you, and I still think it's probably the best card to buy at around $1000. I might get one since I need a second card, and I'm not buying a 5090 at that price. But I was expecting a 5080 to be at least faster than a 4090 and have more than 16GB of RAM. I might even get a discounted 4080 if I can find one.oof let`s not go down this rabbit hole. Since the crypto boom and the ai boom after it the word "value" in regards to GPUs is something best avoided.

We´re talking about a company with 70+% margin after all, so no matter how you look at it the consumer is always getting fucked here.

I´m pragmatically looking at a ~50% uplift from my 3080 with the 5080 for ~1k and that`s simply what I have to live with since there is no competition and no node shrink from TSMC.

Would more be better? ofc, but no one can offer it atm so not really worth discussing.

Thats bad.

I thought/hoped for Nvidias sake it would atleast clear the 4090 easy work.

But this is embarassing.

I imagine a 4080Ti would trump this card easy work.

My decision to skip the RTX 50s looking better and better.

Ive got no real upgrade path.

Well might as well wait for the RTX60s or the Super Node shrink if they manage it.

This looks abysmal:

They call it 4090ti because there seem to be no architectural gains. 30% bigger, 30ish% more performance (we've seen it going from 25 to 35, so I'm averaging all of that), for 25% more of the price.Many reviewers refer to the 5090 as the 4090ti, but the RTX4090ti was rumoured to only offer a 10% performance boost comparered to the RTX4090. The RTX5090 offers 35% better performance on average (techpowerup results) and there are games that show much higher scaling.

Disregarding money:Was hoping for this to be an easy decision but what’s peoples thoughts on upgrading from a 3080 to a 5090? Also what would be the best processor to go with it?

In raster games, Nvidia has not been able to improve performance without increasing power consumption and cost, but perhaps upcoming games that use natural features will benefit from the new architecture.They call it 4090ti because there seem to be no architectural gains. 30% bigger, 30ish% more performance (we've seen it going from 25 to 35, so I'm averaging all of that), for 25% more of the price.

The 4090 offered 70% better performance for a 5% price increase. It offered a significantly better cost per frame. It was also much faster and cheaper than the 3090ti.

This doesn't mean the 5090 is a bad card. It's still the most powerful card you can get today. It reminds me a lot of the 2080ti at launch (which also felt crazy expensive back then, go figure)