Starting with ampere nvidia lets an int/float be executed concurrently or float/float. Turing was just int/float. The theoretical flops listed is a best case scenario where it's only floats being used, real world when you throw an integer down the pipeline (that's not matrix math heading for the tensor cores) you have just cut your float performance in 1/2. Ada is doing the same thing. It doesn't get twice as many fp16 instead of a single fp32, it gets double the floats instead of an integer calculation. Doom Eternal is probably the poster child for this, it was about the only thing that got close to the expected performance the theoretical stats implied, and probably why it was in every nvidia ampere performance slide.As for the double compute on both AMD and Nvidia, where on these specs is the 4090 getting twice as many (theoretical)) FP16 (half)flops per clock as FP32? Unless I'm reading it wrong the tech specs show no gain on the Nvidia cards.

-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

PS5 Pro devkits arrive at third-party studios, Sony expects Pro specs to leak

- Thread starter Bernoulli

- Start date

Mr.Phoenix

Member

Technically, yes the OG PS5 drew 220-230W, the revised 6nm PS5 and the PS5refresh both drew 208W. Its possible that they can make a 250W system and cool it as you suggested being that the OG cooling solution was found out to be overkill, so that would likely have been enough to cool a 250W system.Fat PS5 consumes 230W. With what they learned witht their cooling solution with the Slim I think Sony could make a 250W Pro model and cool it with the same size as the launch model.

I still don't see why they wouldn't use 4nm in 2024. Maybe 5nm will be used on the devkits and 4nm on the consumer model.

As for the 4nm thing, the only answer to that would be cost. 5nm was on the market and Sony still chose to use 6nm (which in truth is just a very mature 7nm node) and I feel the same would happen with 4nm. The thing is that 4nm is not a real node just as

I could be wrong though, if the cost-to-die size equation ends up working in favor of using the more expensive process. But if that were the case, why didn't they use 5nm with the PS5slim? They have been on the 6nm used in the PS5slim since mid 2022. That should give you an idea of how eager so is to jump on new nodes.

then that would put us at a 250W PS5pro... at best.Didn't TH allude to the Pro being the same size as the Vanilla PS5? Might give us some insight into TDP and such.

Gaiff

SBI’s Resident Gaslighter

Try to follow the discussion, ronbot.6800 XT (72 CU RDNA 2) is not RDNA 3. RX 7900 XT has 84 CU RDNA 3 which is the closest 1:1 replacement for RX 6900 XT's 80 CU RDNA 2 configuration.

You never will because this guy seems to be disconnected from reality. It's just word salad after word salad with him and he never actually addresses your points. He just rambles and links a bunch of pie charts and you're just scratching your head trying to understand what he's trying to tell you. He was banned from the Gamespot forums or run out of them for being a console warrior always downplaying the Playstation and propping up Xbox. He does the same on PC but there, he shills for AMD and downplays NVIDIA. He's so transparent with it too.Maybe its that I am not understanding you. Or maybe its that I just don't agree with you. But I am not getting the point you are making.

Most definitely not what happened. Hell, we even heard the opposite at the time. It was said that the PS4 Pro was a panicked reaction to the Xbox One X and that's why it was half-assed but how could Sony just shit out a new console in a year? Project Scorpio was known to the public before the PS4 Pro. The truth is, neither one was to counter the other. They just had similar (and not very creative) ideas at around the same time.Well it looks as if Sony might have learned a lesson or two with the ps4pro because it was the Minecraft studio that spilled the beans before and allowed Microsoft to counter with the One X.

Last edited:

BlackTron

Member

Try to follow the discussion, ronbot.

You never will because this guy seems to be disconnected from reality. It's just word salad after word salad with him and he never actually addresses your points. He just rambles and links a bunch of pie charts and you're just scratching your head trying to understand what he's trying to tell you. He was banned from the Gamespot forums or run out of them for being a console warrior always downplaying the Playstation and propping up Xbox. He does the same on PC but there, he shills for AMD and downplays NVIDIA. He's so transparent with it too.

Most definitely not what happened. Hell, we even heard the opposite at the time. It was said that the PS4 Pro was a panicked reaction to the Xbox One X and that's why it was half-assed but how could Sony just shit out a new console in a year? Project Scorpio was known to the public before the PS4 Pro. The truth is, neither one was to counter the other. They just had similar (and not very creative) ideas at around the same time.

Honestly, who knows. Tight and kinda unlikely, but then again it's not really a new console. It was just a triple decker PS4, like they added a layer to a sandwich.

The gpu was really held back bad by that cpu. Because it wasn't really a new console it was a PS4 with a new graphics card.

I'm not saying it definitely was a panicked reaction just that it's plausible. If they did have the same idea at the same time MS did a much better job. Even though Sony turned around and beat them in next gen engineering on the same timetable as each other.

Mr.Phoenix

Member

Can you really say MS did a much better job? They basically did the same thing that Sony did, strap a bigger GPU to a whack CPU. The only difference is that coming from the XB1 MS had more ground to cover. They had to do something about their RAM as they couldn't use DDR again.Honestly, who knows. Tight and kinda unlikely, but then again it's not really a new console. It was just a triple decker PS4, like they added a layer to a sandwich.

The gpu was really held back bad by that cpu. Because it wasn't really a new console it was a PS4 with a new graphics card.

I'm not saying it definitely was a panicked reaction just that it's plausible. If they did have the same idea at the same time MS did a much better job. Even though Sony turned around and beat them in next gen engineering on the same timetable as each other.

It says a lot when the percentage difference between the XB1 and XB1X (5x) within the same gen is more than the percentage difference between the XB1X and their next-gen XSX(2x). That speaks more to the low floor of the XB1 that they were starting from than anything else. (yeah yeah I know architectural improvements matter and all that but you get my point).

This makes so much sense now. I was honestly beginning to question my sanity.You never will because this guy seems to be disconnected from reality. It's just word salad after word salad with him and he never actually addresses your points. He just rambles and links a bunch of pie charts and you're just scratching your head trying to understand what he's trying to tell you. He was banned from the Gamespot forums or run out of them for being a console warrior always downplaying the Playstation and propping up Xbox. He does the same on PC but there, he shills for AMD and downplays NVIDIA. He's so transparent with it too.

Gaiff

SBI’s Resident Gaslighter

Yep, they even nicknamed him ronbot back on Gamespot. Like, it's clear he understands computer hardware engineering, it's just that no one knows what the fuck he's trying to communicate. He's like ChatGPT without the novelty charm or social skills.This makes so much sense now. I was honestly beginning to question my sanity.

Last edited:

Crispy Gamer

Member

The leaked emails last year showed Jim Ryan complaining about the ps4pro leaks in I think 2015 bro you are the first person to ever say Sony copied Microsoft with the One XMost definitely not what happened. Hell, we even heard the opposite at the time. It was said that the PS4 Pro was a panicked reaction to the Xbox One X and that's why it was half-assed but how could Sony just shit out a new console in a year? Project Scorpio was known to the public before the PS4 Pro. The truth is, neither one was to counter the other. They just had similar (and not very creative) ideas at around the same titime.

asdasdasdbb

Member

PS4 Pro was (IMO) originally intended to help out the PSVR.

They did increase the CPU clock speed by 40%, which is something you can't say about the PS5 Pro.

They did increase the CPU clock speed by 40%, which is something you can't say about the PS5 Pro.

Clear

CliffyB's Cock Holster

PS4 Pro was (IMO) originally intended to help out the PSVR.

They did increase the CPU clock speed by 40%, which is something you can't say about the PS5 Pro.

Well it kinda depends on the power sharing on the PS5pro's APU.

If load reduces on the GPU components then the CPU side gets more power headroom and thus performance increases.

asdasdasdbb

Member

Well it kinda depends on the power sharing on the PS5pro's APU.

If load reduces on the GPU components then the CPU side gets more power headroom and thus performance increases.

Sony has (claimed anyway) that the Base PS5 should not drop it's clocks much or at all during normal gameplay. That may not end up being the case on the Pro... perhaps that's where the 2.0 Ghz typical GPU clock talk comes from.

S0ULZB0URNE

Member

Up to a couple% which isn't much.Sony has (claimed anyway) that the Base PS5 should not drop it's clocks much or at all during normal gameplay. That may not end up being the case on the Pro... perhaps that's where the 2.0 Ghz typical GPU clock talk comes from.

Last edited:

DJ12

Member

Well the lack of a ps5 version of minecraft probably reduces the chances of moving getting a ps5 Pro before anyone else.Well it looks as if Sony might have learned a lesson or two with the ps4pro because it was the Minecraft studio that spilled the beans before and allowed Microsoft to counter with the One X. I wouldn't be surprised if Sony only sent kits to a handful of studios to control leaks I don't expect a ton of pro patches this time around because for the most part the games are ready to scale to stronger hardware. GDC could be the earliest we hear anything about the pro and by then Sony would've probably shipped dev kits to everyone. I don't for a second believe that the pro doesn't exist I just think they learned from the last roll out.

JerryinSoCal

Member

Pro leaks happened way before Scorpio was announced, MS pushed the announcement out sooner, this isn't news and nobody thought PS4 Pro was a rushed response to the One X.Try to follow the discussion, ronbot.

You never will because this guy seems to be disconnected from reality. It's just word salad after word salad with him and he never actually addresses your points. He just rambles and links a bunch of pie charts and you're just scratching your head trying to understand what he's trying to tell you. He was banned from the Gamespot forums or run out of them for being a console warrior always downplaying the Playstation and propping up Xbox. He does the same on PC but there, he shills for AMD and downplays NVIDIA. He's so transparent with it too.

Most definitely not what happened. Hell, we even heard the opposite at the time. It was said that the PS4 Pro was a panicked reaction to the Xbox One X and that's why it was half-assed but how could Sony just shit out a new console in a year? Project Scorpio was known to the public before the PS4 Pro. The truth is, neither one was to counter the other. They just had similar (and not very creative) ideas at around the same time.

playsaves3

Banned

Oh, really?

97 average vs 84 in favor of the 3080 at 1440p. 52 vs 47 average in favor of the 3080 at 4K. The 3080 wins by 10 to 15% over the 6800. We've been through this already. Stop lying and claiming falsehoods. The absolute best the PS5's GPU can do is getting within 20-25% of the 3080, not 2%, let alone outperform it. And AMD does run better than NVIDIA in this game, which is why the 6800 XT beats the 3080, y'know, the actual card it's supposed to rival, not the lower tier 6800.

Right? Like, how the fuck is is it complicated to understand? The PS5 Pro will very likely be in the ballpark of a 4070/3080/7800 XT in rasterization performance in general. The 4080 is still about 50% faster than those guys. Assuming a very well-optimized title on the Pro that doesn't run all that well on PC, then the Pro could reach 4070 Ti level of performance, which is generally around 25% faster than its class of GPU.

The PS5 Pro would need to be as fast as the 4070 Ti out of the gate and then hope for another 25% boost in optimized games to get on the level of a 4080. What are the odds? And that's in raster alone.

How is it so hard for this kid to understand simple maths and scaling? Jesus Christ.

Why are you linking cyberpunk and other games when we are discussing the last of us

Gaiff

SBI’s Resident Gaslighter

It's the first benchmark in the video and I even summarized the results for you. The stupidity of your posts is seriously tiring.Why are you linking cyberpunk and other games when we are discussing the last of us

PaintTinJr

Member

Okay, but considering most things in compute/shaders is either floating point calculations or can be manipulated to use floats instead, and 3D accelerators back in the late 90s by their very genesis were to provide commodity floating point acceleration beyond a maths co-processor (now AVX), so unless a game was specifically engineered to lean more towards INT, and fail to do the optimisation to use an FP16 or FP32 instead of an INT on AMD hardware, I fail to see how that would be discussed in the same way that the huge benefits of Rapid pack maths (half float doubling theoretical throughput).Starting with ampere nvidia lets an int/float be executed concurrently or float/float. Turing was just int/float. The theoretical flops listed is a best case scenario where it's only floats being used, real world when you throw an integer down the pipeline (that's not matrix math heading for the tensor cores) you have just cut your float performance in 1/2. Ada is doing the same thing. It doesn't get twice as many fp16 instead of a single fp32, it gets double the floats instead of an integer calculation. Doom Eternal is probably the poster child for this, it was about the only thing that got close to the expected performance the theoretical stats implied, and probably why it was in every nvidia ampere performance slide.

RPM FP16 when used is a big gain for utilisation efficiency of AMD GPUs in modern HDR rasterization pipelines and even more so in deep and complex shaders or compute algorithms like RT and AI (where regression equation solving and inference from polynomial expansions is done) that all use floats for virtual every calculation, and tend to use them in matrices or vector calculations meaning they are doing multiples of floats per clock to be able to get that gain. Unless the INT calculations are being done in the ASICs(RT unit) in parallel I'm failing to see how this is more than a tiny gain when software is optimised for both Nvidia and AMD hardware, or am I missing another piece of the puzzle in my understanding?

Last edited:

Physiognomonics

Member

Doesn't work like that. What's expensive (hundred of millions?) is to design an APU on either 7nm or 5nm compatible families. Then using 6nm or respectively 4nm is super cheap compared to that as the initial costly design has already being done. Sony used 6nm as soon as it was available for them. They have no reason to make millions of PS5 Pro using 5nm when they have 4nm (for a similar price) available for them.Technically, yes the OG PS5 drew 220-230W, the revised 6nm PS5 and the PS5refresh both drew 208W. Its possible that they can make a 250W system and cool it as you suggested being that the OG cooling solution was found out to be overkill, so that would likely have been enough to cool a 250W system.

As for the 4nm thing, the only answer to that would be cost. 5nm was on the market and Sony still chose to use 6nm (which in truth is just a very mature 7nm node) and I feel the same would happen with 4nm. The thing is that 4nm is not a real node just asSmokSmog pointed out. 5nm, however, is. And it would be more expensive to use 4nm than 5nm especially when you are trying to make a chip whose size is over 300mm2.

I could be wrong though, if the cost-to-die size equation ends up working in favor of using the more expensive process. But if that were the case, why didn't they use 5nm with the PS5slim? They have been on the 6nm used in the PS5slim since mid 2022. That should give you an idea of how eager so is to jump on new nodes.

then that would put us at a 250W PS5pro... at best.

Last edited:

Mr.Phoenix

Member

First off, you are making it sound like 4nm and 5nm cost the same.Doesn't work like that. What's expensive (hundred of millions?) is to design an APU on either 7nm or 5nm compatible families. Then using 6nm or respectively 4nm is super cheap compared to that as the initial costly design has already being done. Sony used 6nm as soon as it was available for them. They have no reason to make millions of PS5 Pro using 5nm when they have 4nm (for a similar price) available for them.

Second, this whole hundreds of millions to design chip thing is just not true. This isn't like them making a cell processor. The R&D has already been done by AMD for the most part and what sony/MS are doing is picking from already existing technology. Sony is like a customer in a restaurant picking stuff off the menu. Sony is buying each chip from AMD. Sony is not paying AMD $100M to "design" a chip then paying them for each chip sold on top of that. This Sony spending hundreds of millions on designing chips is just nonsense.

Eg. In 2022, Sony was AMDs biggest driver in revenue accounting for 16% of AMD revenue due to sales of Playstations. ANd yet people like you think that sony shelled out some hundreds of millions to design an APU lol.

AMD sends Sony/MS some white paper of what they have and what they have coming and expected timelines, sony literally just picks features it wants. AMD tells them how much it would cost for each chip, and that's it.

playsaves3

Banned

You don’t see anything wrong in the benchmark you posted yet are calling others stupid? The one who expects insane rt performance???It's the first benchmark in the video and I even summarized the results for you. The stupidity of your posts is seriously tiring.

adamsapple

Or is it just one of Phil's balls in my throat?

Tassi

www.forbes.com

www.forbes.com

A PS5 Pro Will Feel Extra Unnecessary This Time Around

A PS5 Pro feels odd in a generation of supply shortages, price inflation and few PS5-only titles so far. But that could change.

www.forbes.com

www.forbes.com

MasterCornholio

Member

Tassi

A PS5 Pro Will Feel Extra Unnecessary This Time Around

A PS5 Pro feels odd in a generation of supply shortages, price inflation and few PS5-only titles so far. But that could change.www.forbes.com

That's pretty sad.

HeisenbergFX4

Gold Member

Marching orders coming down the pipelineTassi

A PS5 Pro Will Feel Extra Unnecessary This Time Around

A PS5 Pro feels odd in a generation of supply shortages, price inflation and few PS5-only titles so far. But that could change.www.forbes.com

Just wait the leak price of the Pro is going to be $599 (which I tend to believe and have said all along) and people are going to say something like "If you are willing to spend that just buy a budget gaming PC"

But the Pro would be a whole system and not one component to a PC and relative to the base PS5,the Pro GPU has newer tech. Also,the Pro being side-grade is only $100 more expensive than the base model if that really is the price of the Pro.Marching orders coming down the pipeline

Just wait the leak price of the Pro is going to be $599 (which I tend to believe and have said all along) and people are going to say something like "If you are willing to spend that just buy a budget gaming PC"

TheRedRiders

Member

I think the Pro and PC price and value comparisons will die down fairly quickly.

rofif

Can’t Git Gud

600? I would've guessed higher since they are going to milks suckers this time.Marching orders coming down the pipeline

Just wait the leak price of the Pro is going to be $599 (which I tend to believe and have said all along) and people are going to say something like "If you are willing to spend that just buy a budget gaming PC"

Crispy Gamer

Member

"I genuinely think that GTA 6 may end up being the most relevant upgrade factor here. It’s destined to be one of the biggest games of all time, barring some serious ball-dropping by Rockstar, and it’s easy to imagine many players, myself included, will want the best hardware to run the once in a generation game for the first time. And if Xbox does not get an upgrade, and the PC version isn’t out for at least a year, that will be the PS5 Pro. Though GTA is not out until some time in 2025, or beyond, if delayed."

Talk about mixed messages you'd think an article calling a PS5Pro "extra" unnecessary wouldn't find ways to justify one it's a pretty weak article it's really strange to see so called games journalists/gamers not excited to see new hardware it's not a damn new generation. I don't know what it is about Sony that get people so riled up when they release new hardware the discourse is just a waste of time because these moves usually prove to be popular by the general public.

Talk about mixed messages you'd think an article calling a PS5Pro "extra" unnecessary wouldn't find ways to justify one it's a pretty weak article it's really strange to see so called games journalists/gamers not excited to see new hardware it's not a damn new generation. I don't know what it is about Sony that get people so riled up when they release new hardware the discourse is just a waste of time because these moves usually prove to be popular by the general public.

Imtjnotu

Member

Marching orders coming down the pipeline

Just wait the leak price of the Pro is going to be $599 (which I tend to believe and have said all along) and people are going to say something like "If you are willing to spend that just buy a budget gaming PC"

We ready. Drop the leaks. Drop a pre-order. We ain't broke in this bitch

MikeM

Member

Day 1. Let me preorder now Sony!!!Marching orders coming down the pipeline

Just wait the leak price of the Pro is going to be $599 (which I tend to believe and have said all along) and people are going to say something like "If you are willing to spend that just buy a budget gaming PC"

Man not sure what it is but im always pumped for new console hardware over PC hardware.

PaintTinJr

Member

As much as PlayStation can charge that and more for a Pro with just DLSS/frame gen, I don't think they would, even if they prime the rumours channel with that pricing. The only way I see them charging that amount is if it is to set a new base price for launching the PS6, with the Pro being a free trial to see how a $599 price lands with key PlayStation consumers(earlier adopters).Marching orders coming down the pipeline

Just wait the leak price of the Pro is going to be $599 (which I tend to believe and have said all along) and people are going to say something like "If you are willing to spend that just buy a budget gaming PC"

The main reason why they won't do that IMO, is that it would give Xbox enough margin to do a X Pro at higher spec - while eating $300 or less in subsidizing the hardware - with a 1year delay, whereas at $499 for a Pro the only way Xbox could match up would be with a 2year delay and eating $300 or less in subsidizing, and I don't think a highly profitable PS5 Pro against the backdrop of a successful PS5 is necessary, and they'd rather sell nearer cost to show a market washout against Xbox; especially given the publishers they've bought. As it is cheaper for PlayStation to force Xbox into multiplatform publishing alongside a very weak Xbox console presence going forward IMO.

Last edited:

Quantum253

Gold Member

With the fluctuation in GPU pricing and having a highly customized chipset made specifically for a platform all systems will have, could you get an equivalent GPU on the marketplace for the same price? And that doesn't include the rest of the necessary components. Seems that the mid-range GPUs are hitting the 300-700 range already.Marching orders coming down the pipeline

Just wait the leak price of the Pro is going to be $599 (which I tend to believe and have said all along) and people are going to say something like "If you are willing to spend that just buy a budget gaming PC"

SonGoku

Member

Thats different though, 6nm was a "free" upgrade from 7nm since the porting of 7nm designs was straightforward with no additional investments required to port a 7nm SoC design to 6nm. Cost wise a SoC on 6nm was equal or cheaper, by the time Sony switched to it yields were probably good enough to produce cheaper SoCs compared to 7nm.As for the 4nm thing, the only answer to that would be cost. 5nm was on the market and Sony still chose to use 6nm (which in truth is just a very mature 7nm node) and I feel the same would happen with 4nm.

For 5nm they need to invest in porting the PS5 SoC to it plus the added wafer costs of 5nm itself probably didn't make financial sense to do so

Just to make sure by "4nm" you mean N4 and N4P which are just 5nm with efficiency, performance and slight density improvement?First off, you are making it sound like 4nm and 5nm cost the same.

If so cost shouldn't be much different, they are 5nm still and the design cost similar, by 2024 yields should mature to the point its a no brainer considering its modest improvements in performance (11%), efficiency (22%) and slight density (6%) increase.

3nm on the other hand seems very unlikely for 2024 even though TSMC are targeting 80% production capacity late 2024 would be pleasantly surprised if they do but rather not get hopes up

While true R&D is mostly* done by AMD the cost of said R&D is passed on to the cost of SoCs AMD sells to Sony, so if the R&D is higher the cost of the SoC would be higher for Sony. AMD doesn't sell its chips at wafer costs they have to recoup R&D investments and make some profits as well.Second, this whole hundreds of millions to design chip thing is just not true. This isn't like them making a cell processor. The R&D has already been done by AMD for the most part and what sony/MS are doing is picking from already existing technology.

*We know from previous Cerny interviews for PS4, PS4Pro and PS5 that Sony works with AMD in the design of the custom SoC to add features and remove some that aren't needed and some of those features make it to AMD off the shelf products and some don't

That being said considering RDN4 is rumored to be used only for midrange i suspect the RDNA4 card we see on the market will be a by product of their work with Sony for PS5 Pro much like the RX 480 and the RX 6700 (non XT). IF RDNA 4 releases next year I assume we'll see a similar situation with Pro using a cut down version (i.e 60CUs vs 64) of the off the shelf card.

That why I find the RDNA 3.5 rumors odd unless there's no RDNA 4

Last edited:

GermanZepp

Member

OohhThats different though, 6nm was a "free" upgrade from 7nm since the porting of 7nm designs was straightforward with no additional investments required to port a 7nm SoC design to 6nm. Cost wise a SoC on 6nm was equal or cheaper, by the time Sony switched to it yields were probably good enough to produce cheaper SoCs compared to 7nm.

For 5nm they need to invest in porting the PS5 SoC to it plus the added wafer costs of 5nm itself probably didn't make financial sense to do so

Just to make sure by "4nm" you mean N4 and N4P which are just 5nm with efficiency, performance and slight density improvement?

If so cost shouldn't be much different, they are 5nm still and the design cost similar, by 2024 yields should mature to the point its a no brainer considering its modest improvements in performance (11%), efficiency (22%) and slight density (6%) increase.

3nm on the other hand seems very unlikely for 2024 even though TSMC are targeting 80% production capacity late 2024 would be pleasantly surprised if they do but rather not get hopes up

While true R&D is mostly* done by AMD the cost of said R&D is passed on to the cost of SoCs AMD sells to Sony, so if the R&D is higher the cost of the SoC would be higher for Sony. AMD doesn't sell its chips at wafer costs they have to recoup R&D investments and make some profits as well.

*We know from previous Cerny interviews for PS4, PS4Pro and PS5 that Sony works with AMD in the design of the custom SoC to add features and remove some that aren't needed and some of those features make it to AMD off the shelf products and some don't

That being said considering RDN4 is rumored to be used only for midrange i suspect the RDNA4 card we see on the market will be a by product of their work with Sony for PS5 Pro much like the RX 480 and the RX 6700 (non XT). IF RDNA 4 releases next year I assume we'll see a similar situation with Pro using a cut down version (i.e 60CUs vs 64) of the off the shelf card.

That why I find the RDNA 3.5 rumors odd unless there's no RDNA 4 next year and AMD just launches a improved (RDNA 3.5) mid range card based on the work (RT HW) they did with Sony and with the RDNA3 bugs fixed

Fun times.

SonGoku

Member

If they do this ($599) I would hope the PS5 Pro matches the RTX 4070 in Raster and RT performance while also upgrading the CPU to Zen 4c or something like that. Though i fear Zen 2 judging by Cerny PS4 Pro comments about not upgrading CPU unless its a new gen, unless that was just copeout becase there weren't any good CPU options for AMD at the timeMarching orders coming down the pipeline

Just wait the leak price of the Pro is going to be $599 (which I tend to believe and have said all along) and people are going to say something like "If you are willing to spend that just buy a budget gaming PC"

Certainly doOohhSonGoku cómo andas che? *ruido de mate* remeber when you thought PS5 would have 32gb of ram.

Fun times.

Are you from the cono sur btw?

Last edited:

Mr.Phoenix

Member

I can honestly see them price it at $599. even if just a s a test. I am in the $499/$549 camp, but it wouldn't surprise me one bit if its at $599. More so being that I am expecting it to have a BOM ranging from $450-$550. If however, we see a discontinuation of the $500 Spiderman 2 and COD bundles (as I am expecting), no replacement for the $499 SKU and in turn a subsequent drop in price of the $449 SKU to $399 before June, then no. The PS5pro comes in at $499. Its just not going to be anything more than $100 more than the base SKU.As much as PlayStation can charge that and more for a Pro with just DLSS/frame gen, I don't think they would, even if they prime the rumours channel with that pricing. The only way I see them charging that amount is if it is to set a new base price for launching the PS6, with the Pro being a free trial to see how a $599 price lands with key PlayStation consumers(earlier adopters).

The main reason why they won't do that IMO, is that it would give Xbox enough margin to do a X Pro at higher spec - while eating $300 or less in subsidizing the hardware - with a 1year delay, whereas at $499 for a Pro the only way Xbox could match up would be with a 2year delay and eating $300 or less in subsidizing, and I don't think a highly profitable PS5 Pro against the backdrop of a successful PS5 is necessary, and they'd rather sell nearer cost to show a market washout against Xbox; especially given the publishers they've bought. As it is cheaper for PlayStation to force Xbox into multiplatform publishing alongside a very weak Xbox console presence going forward IMO.

Oh, I strongly doubt at this point Sony cares whatever MS is doing or going to do. And in truth, MS is so far behind, and would ultimately still be sharing the entirety of its third-party library with Sony, that Sony wouldn't even have to care.

Fair point, being that 4nm is just a more "mature" 5nm node, if the price is about the same then obviously, Sony uses that. My argument however is based on it being more expensive, if it costs less to go with 5nm, then that's what they do.Thats different though, 6nm was a "free" upgrade from 7nm since the porting of 7nm designs was straightforward with no additional investments required to port a 7nm SoC design to 6nm. Cost wise a SoC on 6nm was equal or cheaper, by the time Sony switched to it yields were probably good enough to produce cheaper SoCs compared to 7nm.

For 5nm they need to invest in porting the PS5 SoC to it plus the added wafer costs of 5nm itself probably didn't make financial sense to do so

Still feeds into what I am saying. Sony is buying the chip from AMD. Thats it. Obviously, the more complex that chip is, the more expensive it would be for Sony, but at the end of the day, it's still them just buying chips from Sony. It's not them paying 100s of millions in upfront costs to develop anything.While true R&D is mostly* done by AMD the cost of said R&D is passed on to the cost of SoCs AMD sells to Sony, so if the R&D is higher the cost of the SoC would be higher for Sony. AMD doesn't sell its chips at wafer costs they have to recoup R&D investments and make some profits as well.

While this is true, I feel it is one of those things that is PR'ed to hell and back. Both sony and MS always talk up how they worked closely with AMD and made some custom shit. Its true, but not in the way that it's made out to be.*We know from previous Cerny interviews for PS4, PS4Pro and PS5 that Sony works with AMD in the design of the custom SoC to add features and remove some that aren't needed and some of those features make it to AMD off the shelf products and some don't

As I said, AMD hands out what I am calling some sort of white paper to both Sony and MS listing out every single technology they have. And their respective timelines i.e, what's available and when what isn't will be. Sony will then literally pick what they want to go into their SOC. And by custom, that would usually just mean granular repurposing of specific components that are already there. Eg. repurpose what would have otherwise been a CPU core to a dedicated decompressor and call it a different name. Or what would have been a CU and make it a tempest engine. Or what would have been extra TMUs and make it a CBR ID buffer and accelerator. Or take what would have been a scheduler and turn it into a cache scrubber.

The point is, Sony and AMD are not actually making anything new. Sony is just taking what AMD has, and having AMD design a chip blueprint incorporating the things Sony has chosen. Yes, the custom is in the fact that there will be nothing else from AMD that is "configured" the way Sonys chip is. but not that sonys chip somehow has some new/exclusive or super high-end tech in their SOC.

Agreed, though by all accounts, the PS5pro is based on RDNA3, not 4. So its base is going to be RDNA3 with certain components or tech from RDNA4. The only thing that will likely come from RDNA4 would be the RT cores. Everything else will probably be RDNA3-based.That being said considering RDN4 is rumored to be used only for midrange i suspect the RDNA4 card we see on the market will be a by product of their work with Sony for PS5 Pro much like the RX 480 and the RX 6700 (non XT). IF RDNA 4 releases next year I assume we'll see a similar situation with Pro using a cut down version (i.e 60CUs vs 64) of the off the shelf card.

I feel matching the 4070/7800XT/7700XT is pretty much a lock. It would at least perform in that ballpark. In raster for certain, and if using RDNA4-based RT cores, then it could match the 4070 in RT too.If they do this ($599) I would hope the PS5 Pro matches the RTX 4070 in Raster and RT performance while also upgrading the CPU to Zen 4c or something like that. Though i fear Zen 2 judging by Cerny PS4 Pro comments about not upgrading CPU unless its a new gen, unless that was just copeout becase there weren't any good CPU options for AMD at the time

SonGoku

Member

Yes its one of the benefits of working with AMD instead of designing it themselves and fronting the whole cost prior to PS4 but still the chip R&D cost is factored into the SoC cost which yes gets cheaper the bigger the order Sony makes but still is one of the determining factors for the cost of the SoC beyond wafer cost so its not something that can be ignored when deciding node shrinksIt's not them paying 100s of millions in upfront costs to develop anything.

I can agree with this and include the caveat that the work AMD makes for Sony is "recycled" so to speak to launch a similar off the shelf GPU. Like for example we could say Polaris cards existed because of the work AMD done for Sony/MS that way they can maximize return on investmentThe point is, Sony and AMD are not actually making anything new. Sony is just taking what AMD has, and having AMD design a chip blueprint incorporating the things Sony has chosen.

How can we be 100% of this?Agreed, though by all accounts, the PS5pro is based on RDNA3, not 4. So its base is going to be RDNA3 with certain components or tech from RDNA4. The only thing that will likely come from RDNA4 would be the RT cores. Everything else will probably be RDNA3-based.

From my understanding and past history I would expect whatever architecture PS5 Pro uses will be based on the current Radeon architecture at the time of release. So if there's no RDNA4 card at the time of PS5 Pro release then yes it would make sense to be based on RDNA3 with RT/AI improvements

Another point is the difference between RDNA3.5 and 4. If the former adds RT improvements and fixes RDNA3 bugs there might not be that much of a difference between the two. RDNA2 for example aren't their CUs nearly identical to RDNA1 except for the addition of RT hw on the TUs? and obviously higher clocks

At the end of the day this all that matters, if they can get substantial RT improvements to match a 4070 and add AI hardware for AI image reconstruction it will be a banger. Im surprised how well the 4070 can max out settings including path tracing on AW2 and CP at 1440p with DLSS Balanced with console like fps experienceRDNA4-based RT cores, then it could match the 4070 in RT too.

Last edited:

Mr.Phoenix

Member

Technically they have done it before. The PS5. The PS5 has more in common with RDNA1 than it does with RDNA2. If you look at the die floorplans, looking at RDNA1 and the PS5 you would be forgiven to think you were looking at the same thing. The front end, CUs, TMUs, ROP, L2 cache, layout... everything is identical. The only real difference is the presence of RT cores. RDNA2 is much different, having L3 cache (infinity cache) to begin with, a different front end...etc.How can we be 100% of this?

From my understanding and past history I would expect whatever architecture PS5 Pro uses will be based on the current Radeon architecture at the time of release. So if there's no RDNA4 card at the time of PS5 Pro release then yes it would make sense to be based on RDNA3 with RT/AI improvements

This is partly why I am convinced PS5pro would be based on RDNA3, not 4. The other reason is that the PS5pro design was probably finalized in early 2023. And at that time, RDNA4 was probably too far away. But we never really know I guess.

This is my reasoning too, I think the difference between RDNA3 and 4 will not be that much. More like an evolution. Because everything that is supposed to make RDNA4 what it is, already exists in RDNA3. Just needs polish and/or imp[improvement.Another point is the difference between RDNA3.5 and 4. If the former adds RT improvements and fixes RDNA3 bugs there might not be that much of a difference between the two. RDNA2 for example aren't their CUs nearly identical to RDNA1 except for the addition of RT hw on the TUs? and obviously higher clocks

The hardware for AI acceleration is already there... well, kinda. One of the things that would need some polish and/or improvement, but there are 2 AI units in each RDNA3 CU right now.At the end of the day this all that matters, if they can get substantial RT improvements to match a 4070 and add AI hardware for AI image reconstruction it will be a banger. Im surprised how well the 4070 can max out settings including path tracing on AW2 and CP at 1440p with DLSS Balanced with console like fps experience

playsaves3

Banned

I think 700 would be good if they are actually going to upgrade the cpu600? I would've guessed higher since they are going to milks suckers this time.

playsaves3

Banned

I think if they actually make the specs I’m envisioning they would still be selling at a small loss for 599 (and possibly even at 699)As much as PlayStation can charge that and more for a Pro with just DLSS/frame gen, I don't think they would, even if they prime the rumours channel with that pricing. The only way I see them charging that amount is if it is to set a new base price for launching the PS6, with the Pro being a free trial to see how a $599 price lands with key PlayStation consumers(earlier adopters).

The main reason why they won't do that IMO, is that it would give Xbox enough margin to do a X Pro at higher spec - while eating $300 or less in subsidizing the hardware - with a 1year delay, whereas at $499 for a Pro the only way Xbox could match up would be with a 2year delay and eating $300 or less in subsidizing, and I don't think a highly profitable PS5 Pro against the backdrop of a successful PS5 is necessary, and they'd rather sell nearer cost to show a market washout against Xbox; especially given the publishers they've bought. As it is cheaper for PlayStation to force Xbox into multiplatform publishing alongside a very weak Xbox console presence going forward IMO.

playsaves3

Banned

They can’t get away with a 599+ price point if they don’t upgrade the cpu. Some are even expecting 699 and that would be ludicrous without a cpu upgrade. To add further sting this would be 599 or even 699 discless so you still have to buy the disc drive on top. Either these specs are wrong or the console really is somehow going to be 499.If they do this ($599) I would hope the PS5 Pro matches the RTX 4070 in Raster and RT performance while also upgrading the CPU to Zen 4c or something like that. Though i fear Zen 2 judging by Cerny PS4 Pro comments about not upgrading CPU unless its a new gen, unless that was just copeout becase there weren't any good CPU options for AMD at the time

Certainly dowasn't expecting SSD asset streaming to be that much of a paradigm shift, Im much more conservative with my hopes and dreams now days, for next gen for example now i only expect 24GB to 32GB instead of 64GB

Are you from the cono sur btw?

Last edited:

HawarMiran

Banned

STFU TASSITassi

A PS5 Pro Will Feel Extra Unnecessary This Time Around

A PS5 Pro feels odd in a generation of supply shortages, price inflation and few PS5-only titles so far. But that could change.www.forbes.com

it is

HawarMiran

Banned

it would spark ridicule and anger, but it would still sell. They are selling a fucking controller for a third of thatThey can’t get away with a 599+ price point if they don’t upgrade the cpu. Some are even expecting 699 and that would be ludicrous without a cpu upgrade. To add further sting this would be 599 or even 699 discless so you still have to buy the disc drive on top. Either these specs are wrong or the console really is somehow going to be 499.

DJ12

Member

It does kind of confirm the leak that the series x was this generations X to the power of 10 this generation for Microsoft all along. I think ps5 being so close to the x and in many way better really caught MS with their pants down.STFU TASSI

it is

They truly expected to be far ahead with in all head to heads this time round.

Last edited:

HawarMiran

Banned

MS needs to learn that it is about the games. Switch proves that.It does kind of confirm the leak that the series x was this generations X to the power of 10 this generation for Microsoft all along. I think ps5 being so close to the x and in many way better really caught MS with their pants down.

They truly expected to be far ahead with in all head to heads this time round.

rnlval

Member

Prove it.Mark Cerny in the Road to PS5 explicitly says otherwise about the PS5 custom geometry engine BVH tests blocking, and as he is the system architect I'll take that as a solid source on the matter. He also talked about us being in the early days of RT with many changes/evolution in techniques, and he has patents on the subject himself, so I'd argue he was never going the Nvidia route with fixed turnkey solutions for PlayStation, as it is the exact opposite of everything he has talked about, and AMD's engineer choices - compared to Intel and Nvidia - contradict your point too.

rnlval

Member

Maybe its that I am not understanding you. Or maybe its that I just don't agree with you. But I am not getting the point you are making.

Are you trying to say that for graphical workloads the reason we aren't seeing benefits of dual-issue compute is that there isn't a relative increase in TMU and ROPs? Cause if that's what you are saying, then I don't agree.

Take the Non-dual issue capable 2080Ti for instance vs the 3080ti. Regardless of the increase in TMU and ROP on the 3080ti, its still only about 50% better than the 2080ti which tracks with the actual non-dual issue TF difference between the two cards vs being over 3x better as the claimed TF number would suggest.

And I am fully aware of what TMU and ROPs do, and I am just not buying that they are somehow the bottleneck preventing us from seeing the claimed FP32 performance gains. If what is being said that in-game applications, dual issue compute adds only like 10% of relative performance, then fine, I can't argue with that mainly because I can't prove it wrong, but that thing is marketed as a literal doubling in GPU compute. Thats disingenuous.

NVIDIA GeForce RTX 3080 Ti Founders Edition Review

NVIDIA's GeForce RTX 3080 Ti is the green team's answer to AMD's recent Radeon launches. In our testing, this 12 GB card basically matches the much more expensive RTX 3090 24 GB in performance. The compact dual-slot Founders Edition design looks gorgeous and is of amazing build quality.

The average performance gap between RTX 3080 Ti and RTX 2080 Ti is less at lower resolution.

At 1080p, RTX 3080 Ti has a 25% average performance advantage over RTX 2080 Ti.

At 1440p, RTX 3080 Ti has a 35% average performance advantage over RTX 2080 Ti.

NVIDIA GeForce RTX 3080 Ti Specs

NVIDIA GA102, 1665 MHz, 10240 Cores, 320 TMUs, 112 ROPs, 12288 MB GDDR6X, 1188 MHz, 384 bit

NVIDIA GeForce RTX 2080 Ti Specs

NVIDIA TU102, 1545 MHz, 4352 Cores, 272 TMUs, 88 ROPs, 11264 MB GDDR6, 1750 MHz, 352 bit

At 4K resolution,

with pixel rate, RTX 3080 Ti has about a 27% advantage over RTX 2080 Ti.

with texture rate, RTX 3080 Ti has about a 37% advantage over RTX 2080 Ti.

with memory bandwidth, RTX 3080 Ti has about a 48% advantage over RTX 2080 Ti. Focus on the memory bandwidth i.e. 912.4 GB/s vs 616 GB/s

with L1 size, RTX 3080 Ti has about a 100% advantage over RTX 2080 Ti.

These are GPU's paper clock speed specs. Actual average clock speed can be higher e.g. RTX 3080 Ti FE's average clock speed is 1780 Mhz.

with texture rate, RTX 3080 Ti has about a 39.5% advantage over RTX 2080 Ti.

with pixel rate, RTX 3080 Ti has about a 28.9% advantage over RTX 2080 Ti.

For proper 2X performance scaling when TFLOPS is increased by 2X, scale non-shader hardware by 2X.

Last edited:

Physiognomonics

Member

In 1 or 2 years:Tassi

A PS5 Pro Will Feel Extra Unnecessary This Time Around

A PS5 Pro feels odd in a generation of supply shortages, price inflation and few PS5-only titles so far. But that could change.www.forbes.com

Forbes - Xbox Series X Y is the real upgrade, better than PS5 Pro, this is the second coming of Jesus, Digital Foundry analysis confirms.

Darkkahn

Member

Is this written by a 5 years old?Tassi

A PS5 Pro Will Feel Extra Unnecessary This Time Around

A PS5 Pro feels odd in a generation of supply shortages, price inflation and few PS5-only titles so far. But that could change.www.forbes.com

rnlval

Member

Oh, really?

97 average vs 84 in favor of the 3080 at 1440p. 52 vs 47 average in favor of the 3080 at 4K. The 3080 wins by 10 to 15% over the 6800. We've been through this already. Stop lying and claiming falsehoods. The absolute best the PS5's GPU can do is get within 20-25% of the 3080, not 2%, let alone outperform it. And AMD does run better than NVIDIA in this game, which is why the 6800 XT beats the 3080, y'know, the actual card it's supposed to rival, not the lower tier 6800.

Right? Like, how the fuck is is it complicated to understand? The PS5 Pro will very likely be in the ballpark of a 4070/3080/7800 XT in rasterization performance in general. The 4080 is still about 50% faster than those guys. Assuming a very well-optimized title on the Pro that doesn't run all that well on PC, then the Pro could reach 4070 Ti level of performance, which is generally around 25% faster than its class of GPU.

The PS5 Pro would need to be as fast as the 4070 Ti out of the gate and then hope for another 25% boost in optimized games to get on the level of a 4080. What are the odds? And that's in raster alone.

How is it so hard for this kid to understand simple maths and scaling? Jesus Christ.

Your video used a Ryzen 5 7600X CPU.

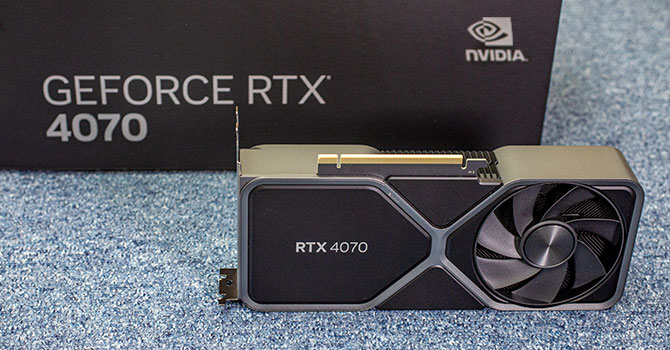

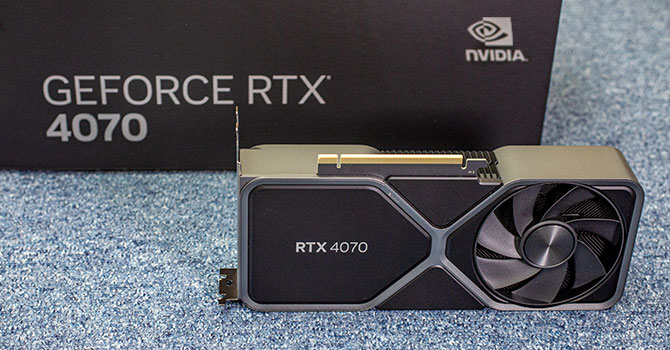

NVIDIA GeForce RTX 4070 Founders Edition Review

NVIDIA's GeForce RTX 4070 launches today. In this review we're taking a look at the Founders Edition, which sells at the baseline MSRP of $600. NVIDIA's new FE is a beauty, and its dual-slot design is compact enough to fit into all cases. In terms of performance, the card can match RTX 3080...

At 4K average raster performance, RTX 3080 10 GB slightly beats RX 6800 XT.

-----

NVIDIA GeForce RTX 4070 Founders Edition Review

NVIDIA's GeForce RTX 4070 launches today. In this review we're taking a look at the Founders Edition, which sells at the baseline MSRP of $600. NVIDIA's new FE is a beauty, and its dual-slot design is compact enough to fit into all cases. In terms of performance, the card can match RTX 3080...

RTX 3080 10 GB murdered RX 6800 XT in average raytracing performance.

Techpowerup's RTX 4070 review used the Intel Core i9-13900K Z790 platform. Ryzen 5 7600X doesn't reduce the CPU influence i.e. use Ryzen 7 7800X3D or Ryzen 9 7950X3D for AMD camp.

Last edited:

rnlval

Member

RTX 3080 10 GB can run out VRAM at 4K.The game favors amd cards so the 6800 outperforms the 3080 there…

The Last of Us Part I Benchmark Test & Performance Analysis Review

The Last of Us is finally available for PC. This was the reason many people bought a PlayStation, it's a masterpiece that you can't miss. In our performance review, we're taking a closer look at image quality, VRAM usage, and performance on a selection of modern graphics cards.

At 1080p, RTX 3080 (cutdown GA102) 10 GB is slightly superior over RX 6800 XT. The weakness of many Ampere SKUs is the low VRAM.

For 4K, GA102 relative RTX 3090 with 24 GB VRAM maintains its performance lead over RX 6900 Xt and RX 6800 XT.

Techpowerup didn't test RTX 3080 12 GB VRAM and RTX 3080 Ti 12 GB VRAM SKUs.

VRAM usage for The Last of US Part 1 https://www.techspot.com/review/2656-the-last-of-us-gpu-benchmark/

At 1440p Ultra Quality, RTX 3080 10 GB has similar results with RX 6800 XT, but 1% lows RTX 3080 10 GB is inferior.

RTX 3080 Ti 12 GB and RX 6900 XT 16 GB have similar average FPS and 1% low results from 1080p to 4K.

Last edited:

rnlval

Member

My point with the RDNA 3 84 CU vs RDNA 2 80 CU comparison is performance improvements with similar CU count GPUs.Try to follow the discussion, ronbot.