Thebonehead

Gold Member

He's been caught out on fake ones before thoughit's based on AMD internal slides

He's been caught out on fake ones before thoughit's based on AMD internal slides

it's realHe's been caught out on fake ones before though

it's real from AMD slides(December)Sorry, but MLID has been caught lying so many times, that he now has no credibility.

So. You’re saying that a 650$ card is supposed to compete with what until last week was a 1.000$ card plus having more space taken for AI cores to run an acceptable upscaler…it's real from AMD slides(December)

NAVI48 XTX was projected to compete with XTX +-5%

NAVI48 XT with XT +-5%

The rest story just driver to take this perf

I prob will and I don't think 5070 is gonna make it for me... I'd be surprised if they end up being kinda at the same level, AMd has to fuck it up too much for thatI'm sorry, but who the fuck is gonna buy a 9070XT without even considering or waiting for 5070 reviews first?

The answer is nobody.

If AMD launches first, the immediate response has historically always been "Wait for Nvidia". So what's the fucking point?

We don't know price. to compete with 5080 they just need slap GDDR7 into NAVI48XTX, but will they do it?ou’re saying that a 650$ card

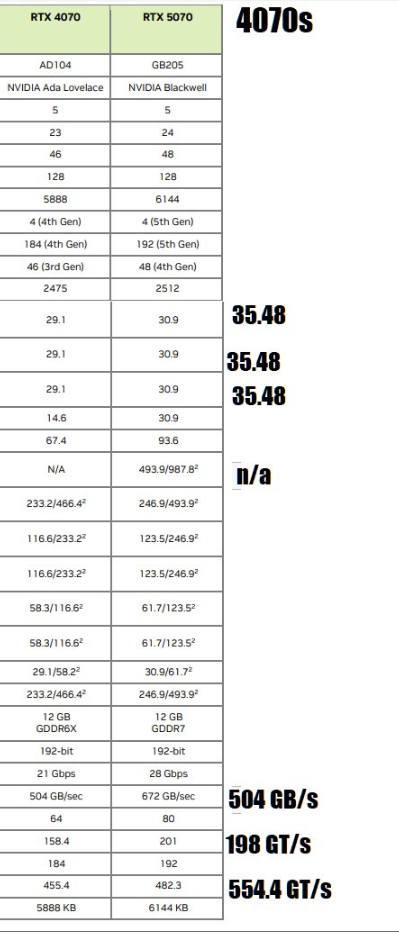

5070 vanilla could be worse, than 4070S or same.I don't think 5070 is gonna make it for me.

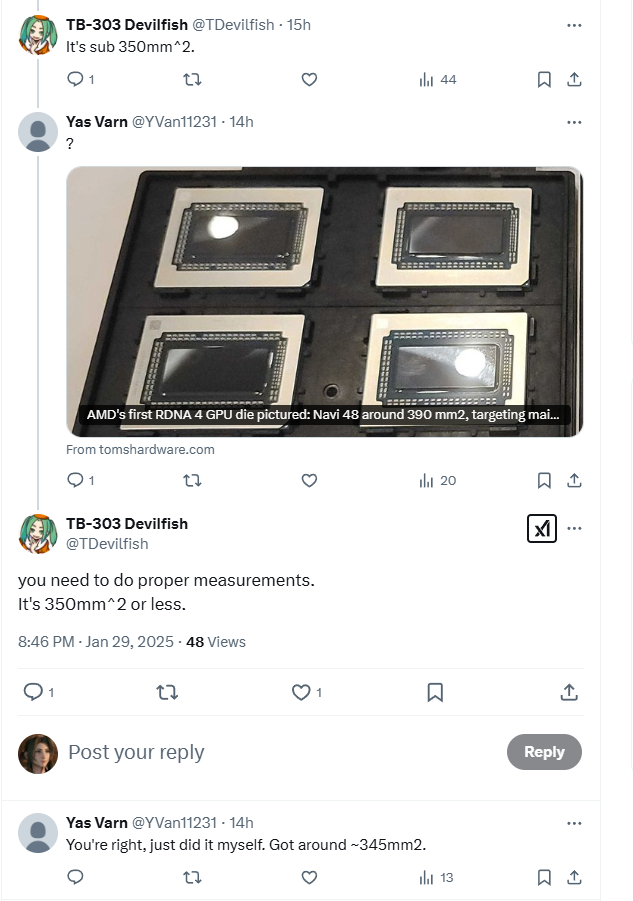

This is usually the norm with a node shrink. AMD had one, Nvidia didn't. The die size of the 5080 is 378, Navi 48 is 390 mm².So. You’re saying that a 650$ card is supposed to compete with what until last week was a 1.000$ card plus having more space taken for AI cores to run an acceptable upscaler…

Does Lisa Su have photos of the TSMC CEO with a horse or something?

sub 350Navi 48 is 390 mm².

I could only find about 390 onlinesub 350

because it's was calculate from screen. People who know, saying it's sub 350I could only find about 390 online

We don't know price

Then they’d probably price it around 900$ (10% less price for a 10% less performance).So their 9070xt is now not a 5070ti competitor, but is close to the 5080.

zero chance. itr's cheap sku'sThen they’d probably price it around 900$

No one would buy that card at that price. Nvidia still has superior upscaling and ray tracing. AMD has now positioned their card as a xx70 competitor, it needs to compete in price as well. I think it will be 499 for the 9070 and 650 for the 9070xt.Then they’d probably price it around 900$ (10% less price for a 10% less performance).

in 5th series RT have regression, in some games. Nvidia still has superior upscaling and ray tracing

I would be surprised if AMD now matches Lovelace, but you're right that Blackwell doesn't seem to have any significant advantage.in 5th series RT have regression, in some games

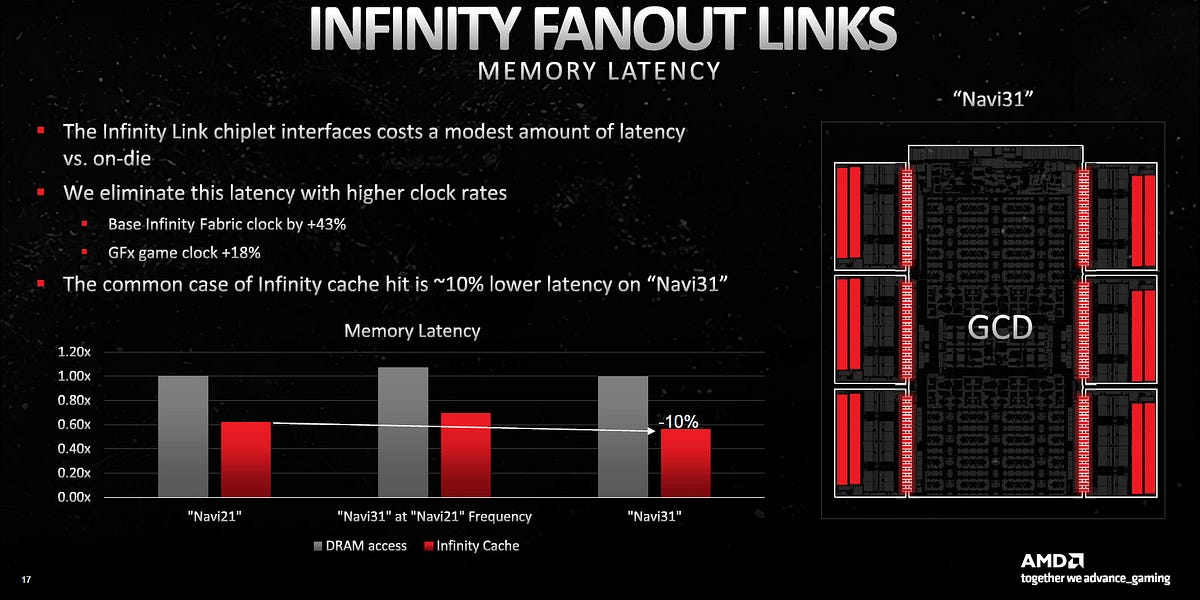

that because Blackwell caches is meh, despite RT cores is improved, but they can fix caches in 6xxx seriesBlackwell doesn't seem to have any significant advantage.

Mlid at least give to us different slides, coreteks just pure speculation alwaysCoreteks is almost as bad as MLID.

After watching this vid it all makes sense now, actual performance of rdna4 amd cards, its delay, the naming etc.

That price for a 4080 tier card would be bananas. I don’t see it happening just because nothing good ever happens.No one would buy that card at that price. Nvidia still has superior upscaling and ray tracing. AMD has now positioned their card as a xx70 competitor, it needs to compete in price as well. I think it will be 499 for the 9070 and 650 for the 9070xt.

They really fucked up with their naming, but that's great news for us

What I'm fearing is that AMD thinks they have way too good of a deal and are discussing whether they should price pair with Nvidia, I can see AMD execs being divided probably due to AI data centers paying whatever anywayThat price for a 4080 tier card would be bananas. I don’t see it happening just because nothing good ever happens.

Add lower cache bandwidth and worse cache latency for blackwell

What that should tell you, is that you just like what he's saying.I think this guy is full of shit, usually, but this explanation is the one that makes the most sense to me.

It’ll be the logical thing to do from a business perspective. Everything else is distilled hopium.What I'm fearing is that AMD thinks they have way too good of a deal and are discussing whether they should price pair with Nvidia, I can see AMD execs being divided probably due to AI data centers paying whatever anyway

FSR4 allegedly being very very good as per media outlet that tried it and also upscalers and other proprietary tech are not in every game, so you have to rely on other upscalers, mods, etc.Why are they comparing 9070xt to 5080 without taking DLSS into account? Native rendering is basically theoretical - in practice everyone uses DLSS since it performs and usually even looks better (less shimmer etc)

You're focusing on ONE thing in that video. I agree that Nvidia did not rebrand anything.What that should tell you, is that you just like what he's saying.

Having watched this video, this is just pure speculation.

Jensen Huang, rebranded the 5070Ti into the 5080? To force AMD's prices lower? Lmao When the fuck would Nvidia ever sacrifice their 80 class GPU getting embarrassed by a cheaper "70" class GPU? That didn't happen with Ampere, where their costs were significantly smaller so they would have had way more room to clip their own margins, so why the hell would it happen now?

As good as DLSS seems to have become, not all games use DLSS.Why are they comparing 9070xt to 5080 without taking DLSS into account? Native rendering is basically theoretical - in practice everyone uses DLSS since it performs and usually even looks better (less shimmer etc)

Timestamped to the conclusion but the whole video is a sad reminder of how easily the PC gaming sphere has been manipulated.

Timestamped to the conclusion but the whole video is a sad reminder of how easily the PC gaming sphere has been manipulated.

Dude just stripped AMD buck naked and spanked them on the ass.

they finally have some decent hardware and are instead fucking up on simply getting it into market? what is this shit show? this is all frank azor doing, goddamn

So in conclusion, we know really nothing about pricing, but knowing AMD they are very likely to fuck it up.

They delayed because Blackwell is much slower than expected and they saw an opportunity to raise prices if 9070 XT ended up faster than 5070Ti. But 5080 reviews were so negative they probably won't do it now.Quite the opposite, they delayed it to improve drivers and software stack.

And to get more stock, not to do a paper launch, like nvidia did with the RTX 5080 and 5090.

The question now is if AMD is going to screw up with the price.

so which upcoming games wont?As good as DLSS seems to have become, not all games use DLSS.

Timestamped to the conclusion but the whole video is a sad reminder of how easily the PC gaming sphere has been manipulated.

I don’t know?!? That a pretty wide question. You can’t assume all future games will though. Heck even announced games don’t even get it lol. Destiny 2 was announced when the first RTX was first rolled out, never got it. You can’t assume anything or even older games will get dlss 4 with frame gen options, therefore it’s not a static baseline for apples to apples.so which upcoming games wont?

the only big title the last years I have seen ís Helldivers 2 - but that is more of CPU bound game

All I can say is that for $5 I got a program that is doing the same thing a $2000 gpu is being marketed as having (MFG). I feel bad for the people that buy a 5000 series card because it makes more frames. Just another in a long line of bullshit buzzwords people gobbled up with shit eating grins.You can find my long list of replies in the other previous graphic thread that was from this guy's channel and modern lighting, which I mainly agree with him that most of the time they are not using Lumen/RT/Path tracing correctly and that these make most sense when a game has a very dynamic scene with building/destruction

But Nvidia / AMD / Intel gave a tool to make the above, including for the games that it makes sense. Blaming them on bad implementations just takes the cake lol. Worthless video this time imo. Devs are the ones holding the key.

His crusade on any AA is getting tiring too. Sure bud, do make your solution which you need funding on.

Keeping a 1060 as some benchmark to how games should run in modern days is completely ridiculous. Its 9 years old. Even pure rasterized games left that card behind, I know I had one.

Wait - that sounds affordable. How does something like that perform on something new, like KCD II?

All I can say is that for $5 I got a program that is doing the same thing a $2000 gpu is being marketed as having (MFG). I feel bad for the people that buy a 5000 series card because it makes more frames. Just another in a long line of bullshit buzzwords people gobbled up with shit eating grins.

Sure but i bet 99-100% of demanding AAA games will support DLSS. If indies have dlss hardly matters since they are performant regardlessI don’t know?!? That a pretty wide question. You can’t assume all future games will though. Heck even announced games don’t even get it lol. Destiny 2 was announced when the first RTX was first rolled out, never got it. You can’t assume anything or even older games will get dlss 4 with frame gen options, therefore it’s not a static baseline for apples to apples.