llien

Banned

(that DLSS is nearly flawless and even better than native was never claimed by anyone)

Last edited:

(that DLSS is nearly flawless and even better than native was never claimed by anyone)

Nvidia claimed 3080 was 2 times faster than 2080.Nvidia claims that their 5070 has 4090 performance. Not bad.

Because Raytracing is going to become a requirement in almost all big games going forward.I can use FSR1-3 on a GTX10, why I need to pay $700.

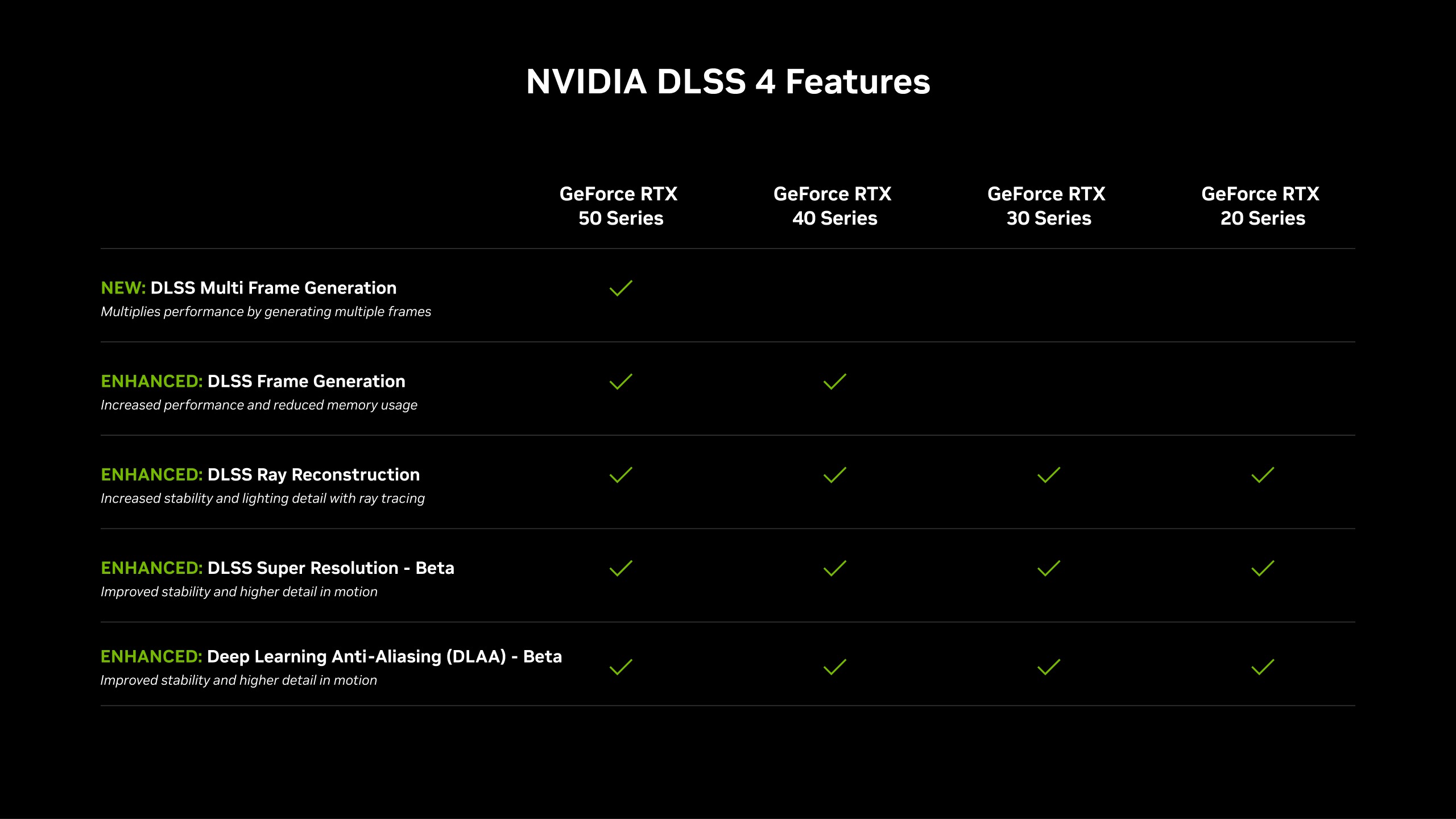

It will be backward compatible with all games that use DLSS and can be forced from the new Nvidia APP in all games, it will be available with the release of the new cards.

*For nowDLSS4 isn’t multi-frame-gen. That’s a new feature unique to Blackwell.

Both DLSS and TAA will look soft without sharpening mask. With my reshade settings TAA / DLSS quality improves dramaticaly, but maybe with this improved model DLSS image will look sharp even without reshade.yup, it's already better in quality mode than native TAA in many games, but native TAA is also kinda shit... so being better than kinda shit was already very welcome and is why people love DLSS. and the more it improves over native TAA, the better.

like in Doom Eternal or Indiana Jones, not using DLSS quality mode and instead running native with TAA is literally costing you image quality while running worse. and while not looking perfect, the fact that it looks objectively better than native TAA while giving you a perfomance boost is great.

and now this great thing gets a massive quality boost.

YepWait what. You mean in all games that already support DLSS, right?

Console is really a scam if you think about it... RTX20 is receiving the new features but to get the PiSSeR you need to burn $700 and the customers who bought a base Ps5 get the finger... and it isn't even worth it.

To be fair i do think DLSS looks better than native in a lot of games, with older games when the tech was new coming off a bit dodgy looking.

It's magic.i do think DLSS looks better than native in a lot of games

When was it that the "hardwahr RT" gimmick was first hyped,, mm, 6+ years ago, right?Because Raytracing is going to become a requirement in almost all big games going forward.

Well on my screen DLSS doesnt blur, it fact it often looks sharper than native rez. Even FSR can look sharper than TAA.It's magic.

Yeah, well, maybe.

I did have this sort of conversation in the early days of glorified TAA upscaling. (nobody seriously pushed that with AI upscaling, the DLSS 1).

I was challenged into "guess which of these is native" in all seriousness.

For some magical reason, the pics with more blur and less detail appeared to be universally the ones with glorified TAA upscaling applied.

So, that's where my skepticism comes from, cough.

On top of anything hyped by PF more likely than not being scheisse.

Fucking APP

Guess we have to thank ai sales for backwards compatibility, hopefully it runs the same or better on my 20series.

Forcing things from an app/driver level, where have I heard that before and why do I remember some issues with it.... ;P

Under the hood stuff is rendered at lower resolution (definitely), TAAed (definitely), denoised using NN (most likely).I mean a lot of people say this and i dont think its some big consipracy theory, or everyone is clouded by delusion or somehting.

AFMF, driver level hijacking/injecting from AMD, trigged anticheats.DLSDSR?

Guys, forget upscaling and frame gen a minute, there's huge megabombs

The bolded was not even presented in the conference and are huge game changers for path tracing

Neural radiance cache path tracing / RTX Mega Geometry / Neural Texture Compression / RTX Neural faces

RTX Mega Geometry accelerates BVH building, making it possible to ray trace up to 100x more triangles than today’s standard.

RTX Neural Radiance Cache uses AI to learn multi-bounce indirect lighting to infer an infinite amount of bounces after the initial one to two bounces from path traced rays. This offers better path traced indirect lighting and performance versus path traced lighting without a radiance cache. NRC is now available through the RTX Global Illumination SDK, and will be available soon through RTX Remix and Portal with RTX.

They've basically rebuilt EVERYTHING

The shader pipeline with neural shaders

The BVH structure with Mega geometry

The path tracing solution with neural cache radiance

The upscaling technology by switching to transformer model

But is this also for the older cards?

This is what happens when a company is competing only with itself and it's still pretty early techI don't get folks who with every new DLSS version finally realize the last wasn't as flawless as they've been saying. It's SO awesome. No wait, NOW it's awesome. No wait, NOW it finally fixes the HUGE issue we didn't care for before (also omg @ the lame competition not having it fixed yet)! Etc.

Do you mean DLSS 3? There’s no way FSR will be a Nintendo solution on Nvidia hardware.Switch won't have any frame gen from nvidia (ampere). Only FSR3.

Bro that's a lot of frames.

Might consider building a new machine sooner than expected lol.

DLSS is game ran at lower resolution, plus TAA (antialiasing) + upscaling and some NN denoising.TAA is trash, DLSS is better TAA, therefore better than native if properly implemented.

Oh, FFS...Death Stranding and many more titles look objectively better with DLSS quality mode than running native

DLSS is game ran at lower resolution, plus TAA (antialiasing) + upscaling and denoising.

"therefore better than native" my bottom.

have you ever used it or are you just talking out of your ass?

That means yes. I was here to look for this comment. Smart thinkingCould Switch 2 use any of this shit?

The Nvidia App is actually pretty good.

If you dont like it you could probably do all this with Nvidia Inspector or manually inject using DLSSTweak Tool or just copy/paste.

But i imagine for most people using the App just makes life easier.

kevboard

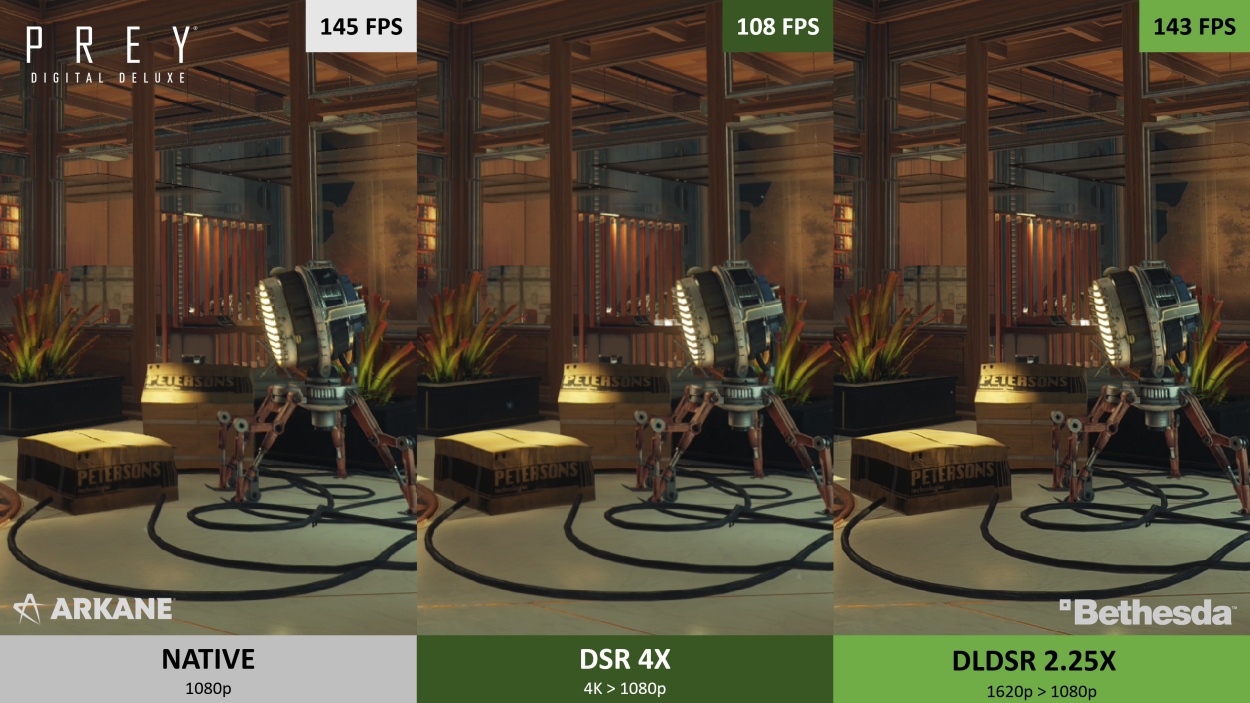

I don't know where those pics come, but the second does look better than the above image, no noticeable loss of detail or blur either.

Just sad its not in the control panel also, in the convenient section where you could always change those kind of settings.

Mark Cerny just made a presentation about making CNN run on PS5 pro, meanwhile nVidia has moved on to transformer.

Nvidia are always 2-3 steps ahead of everyone else. I can see why AMD chickened out last night. They probably got wind of Nvidia's announcement(s) and decided to play reactive as they've always done in the GPU market and waited for prices before coming up with their own strategy.

Cerny's boasting about tech that Nvidia was working on 2 generations ago.

I'm not sure that is clear at this point. I'd bet it is for future implementation. There we have DirectStorage but you barely notice it being widely used since announcement.Can someone clarify if RTX Neural Textures are supported in previous RTX GPUs too?

Can someone clarify if RTX Neural Textures are supported in previous RTX GPUs too?

Interesting.Probably, as this is a technique to be incorporated into Nvidia's SDK. AMD and Intel are also working on this.

Microsoft will incorporate it into DirectX, so it should even appear on the Xbox. I've seen some extensions being worked on for Vulkan as well.

Enabling Neural Rendering in DirectX: Cooperative Vector Support Coming Soon - DirectX Developer Blog

Neural Rendering: A New Paradigm in 3D Graphics Programming In the constantly advancing landscape of 3D graphics, neural rendering technology represents a significant evolution. Neural rendering broadly defines the suite of techniques that leverage AI/ML to dramatically transform traditional...devblogs.microsoft.com

Why wouldnt it be it be Control Panel?

The Control Panel has most if not all of the settings the Nvidia App has (sans filters and overlays) Im pretty sure the Nvidia App driver settings simply changes things in the Control Panel without you needing to actually open the Control Panel and if you want even more granularity then use Nvidia Inspector which has even more settings.

The App is just quick access.

Well hopefully it will be. I might have used nvidia inspector back in the day when 770 would not clock down properly with 2 monitors, but ill see how it goes, probably just copy stuff or whatever.

Interesting.

I'm curious to see if Nintendo (Switch 2) and Sony (PS6 most likely, not PS5 Pro) plan to follow suit...

I take it you havent looked in the Nvidia App, Control Panel or Inspector in a long long long time?

The Nvidia App drivers section is literally.....literally shortcuts to Control Panel settings you are most likely to use.

Note: The Nvidia App literally came out like two months ago.......were you thinking of Geforce Experience?

I think the part that isn't coming across to others in your observation is that without MFG or DLSS FG this isn't a free lunch on the older hardware.why its hard for some to get this ?? its in the picture .... from their page. Multi Frame Gen is under DLSS 4. however, the only new thing they added to DLSS is MFG and its 5000 series exclusive. did they enhance the old DLSS ? sure. but the real full DLSS4 is for 5000 series only

As a fellow 3080ti owner, wait for independend reviews, after that who knows, u might not even wanna look at 5080 since its definitely below even 4090(not to mention 16gigs of vram will be bottleneck in 2-3years in 4k), with 5090 at least u got over 100% performance jump in raster vs 3080ti, on top all new features, and ofc 32gigs of vram so 100% warranty for it to never be bottleneck even on 4k max rt/path racing in future games, even 5-6 years from now, hell 5090 is stronger from future ps6Yea

My 3080 Ti was begging to be put off behind the barn when I tried Full RT in that game, there was no combination of making it feasible unless I was aiming 20-30 fps (unacceptable).

Went with Lumen for that one.

5080 looks mighty tempting